The information and communication technology industry is today faced with a global challenge: develop new services with improved quality of service (QoS) and at the same time reduce its environmental impact. In addition, the development towards 5G and Beyond (B5G) technologies, the exponential growth in the number of connected objects …

Read More »DARPA thrust on Machine Learning Applied to Military Radiofrequency applications

US considers the Electromagnetic domain that is the electromagnetic spectrum portion of the information environment as a domain of Warfare. Assured access to the RF portion of the electromagnetic spectrum is critical to communications, radar sensing, command and control, time transfer, and geo-location and therefore for conducting military operations. The …

Read More »DARPA OFFSET developing offensive swarm tactics with hundreds of drones and ground robots for diverse missions in complex urban environments

Artificial intelligence is being employed in a plethora of defense applications including novel weaponry development, command and control of military operations, logistics and maintenance optimization, and force training and sustainment. AI also enhances of autonomy of unmanned Air, Ground, and Underwater vehicles. It is also assisting in new concepts of …

Read More »Software Defined Radio (SDR) standards for commercial and Military

A radio is any kind of device that wirelessly transmits or receives signals in the radio frequency (RF) part of the electromagnetic spectrum to facilitate the communication or transfer of information. In today’s world, radios exist in a multitude of items such as cell phones, computers, car door openers, vehicles, …

Read More »Robots rapidly advancing from assistants, to becoming our close companions to replacing us and merging with us as Robot-human hybrids

A robot, is any automatically operated machine that replaces human effort, though it may not resemble human beings in appearance or perform functions in a humanlike manner. A robot is a machine—especially one programmable by a computer—capable of carrying out a complex series of actions automatically. A robot can be …

Read More »Artificial-Intelligence Race among Countries to strengthen economy, solve societal challenge and transform industries

Artificial intelligence (AI) term was coined by John McCarthy, defined it as “the science and engineering of making intelligent machines”. The field was founded on the claim that a central property of humans, intelligence can be so precisely described that a machine can be made to simulate it. The general …

Read More »Robotics simulator

Modelling is the process of representing a model (e.g., physical, mathematical, or logical representation of a system, entity, phenomenon, or process) which includes its construction and working. This model is similar to a real system, which helps the analyst predict the effect of changes to the system. Simulation of a …

Read More »Accurate, realtime Geospatial intelligence (GEOINT) critical for Military and Security operations, experiencing many new technology trends

Geoint is imagery and information that relates human activity to geography. It typically is collected from satellites and aircraft and can illuminate patterns not easily detectable by other means. GEOINT (GEOspatial INTelligence) is the foundation of intelligence, it is the process with which skilled analysts evaluate and correlate information, either …

Read More »Enterprise architecture

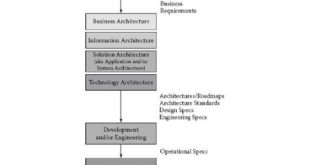

The term enterprise can be defined as describing an organizational unit, organization, or collection of organizations that share a set of common goals and collaborate to provide specific products or services to customers. In that sense, the term enterprise covers various types of organizations, regardless of their size, ownership model, operational …

Read More »Satellite Remote Sensing

Remote sensing is the science of acquiring information about the Earth’s surface without actually being in contact with it. This is done by sensing and recording reflected or emitted energy and processing, analyzing, and applying that information. Some examples of remote sensing are special cameras on satellites and airplanes taking …

Read More » International Defense Security & Technology Your trusted Source for News, Research and Analysis

International Defense Security & Technology Your trusted Source for News, Research and Analysis