Alexander Graham Bell discovered photoacoustics by accident when he was working on the development of the photophone, a followup to his recently developed telephone that operated via modulated sunlight instead of electricity. You would operate a photophone by speaking into its transmitter toward a mirror placed inside of it. The vibrations of your voice would then cause similar vibrations in the mirror. Next, sunlight was directed into the mirror to capture the vibrations and project them into the photophone’s receiver. The vibrations would then be turned back into audible sound.

Bell observed that when he illuminated a solid material with a rapidly pulsed beam of modulated light, it caused an acoustic frequency to emit at the same frequency as the beam of light. Bell called the effect “photophonic phenomena” or “radiophony”; what we now know as photoacoustics.

Nowadays, the photoacoustics effect and photoacoustic imaging are useful for nearly any application area that involves generating images of opaque material samples. Photoacoustics combines the capabilities of optical and acoustical imaging. This combination offers the best of both worlds in terms of biomedicine, as it makes photoacoustic imaging a multiscale, high-resolution, and noninvasive technique.

Some potential applications of photoacoustics for biomedicine include: Generating images of vasculature that show the level of oxygen in the blood vessels, Photoacoustics-based mammography systems for more accurate results and a patient-friendly experience, and Locating cancerous tumors without any interaction that could alter the cancer’s course of action

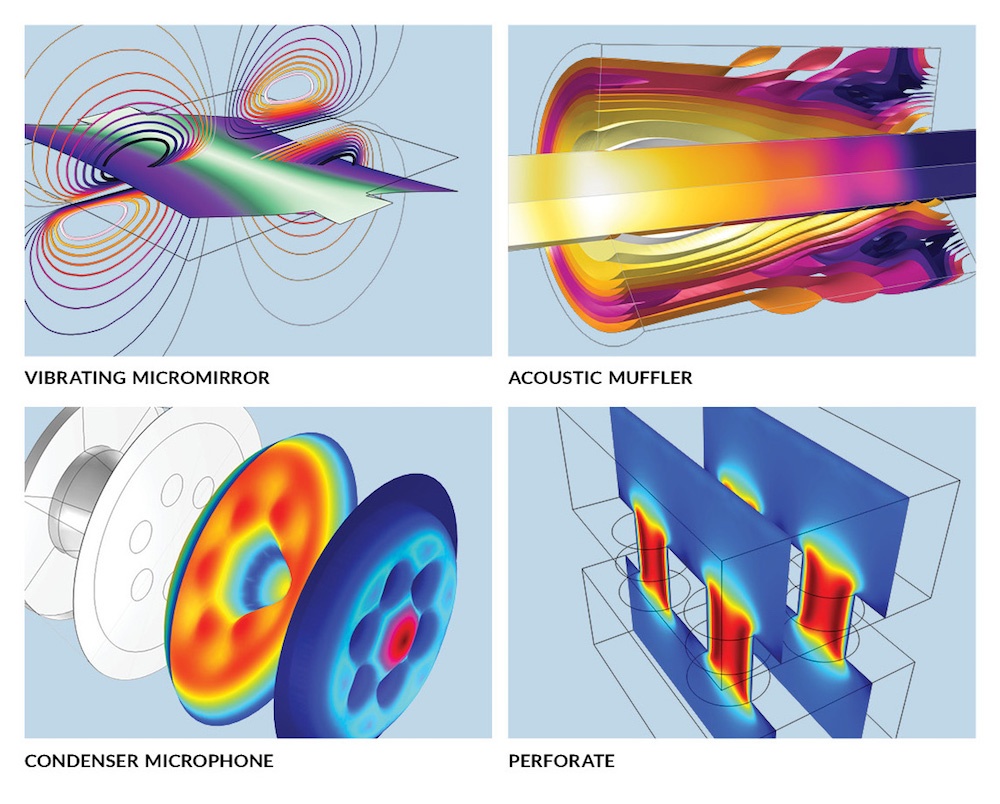

Using acoustics modeling software, it’s possible to study this phenomenon and optimize devices that rely on it. Photoacoustic effects can be solved by the thermoviscous acoustics equations, which account for the acoustic perturbations in pressure, velocity, and temperature. Thermoviscous acoustics are involved in the study of many different devices, including: Condenser microphones,

Miniature loudspeakers, Hearing aids, Mobile devices and MEMS structures.

Photoacoustic Imaging

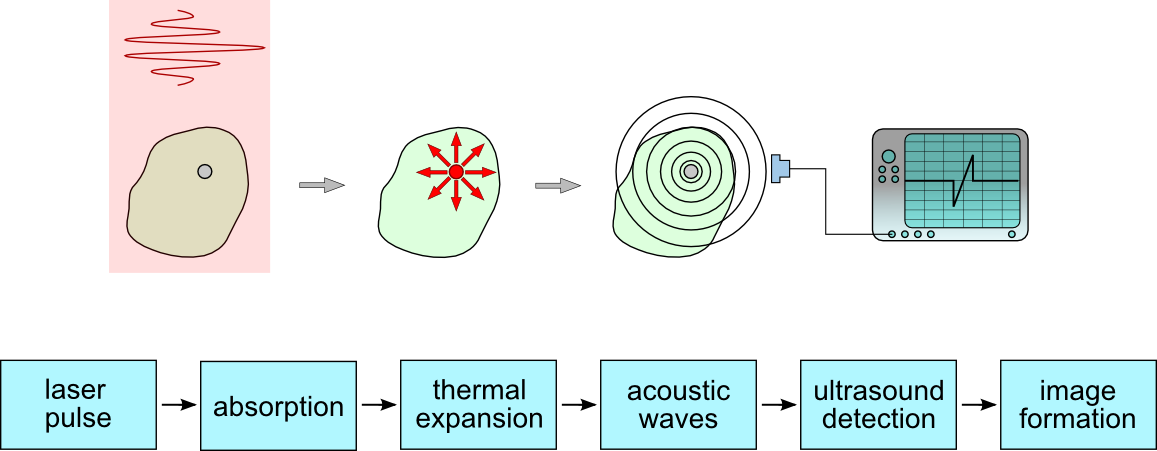

Photoacoustics is the study of vibrations induced in matter by light. Laser light causes localised heating when it is absorbed by a surface. This in turn makes the target area expand and sends a pressure wave through the rest of the material. This effect enables imaging of opaque systems, particularly biological samples.

Photoacoustic imaging (PAI) is an emerging imaging technique that bridges the gap between pure optical and acoustic techniques to provide images with optical contrast at the acoustic penetration depth.

Photoacoustic imaging (optoacoustic imaging) is a biomedical imaging modality based on the photoacoustic effect. In photoacoustic imaging, non-ionizing laser pulses are delivered into biological tissues (when radio frequency pulses are used, the technology is referred to as thermoacoustic imaging). Some of the delivered energy will be absorbed and converted into heat, leading to transient thermoelastic expansion and thus wideband (i.e. MHz) ultrasonic emission. The generated ultrasonic waves are detected by ultrasonic transducers and then analyzed to produce images. It is known that optical absorption is closely associated with physiological properties, such as hemoglobin concentration and oxygen saturation. As a result, the magnitude of the ultrasonic emission (i.e. photoacoustic signal), which is proportional to the local energy deposition, reveals physiologically specific optical absorption contrast. 2D or 3D images of the targeted areas can then be formed.

The two key components that have allowed PAI to attain high-resolution images at deeper penetration depths are the photoacoustic signal generator, which is typically implemented as a pulsed laser and the detector to receive the generated acoustic signals. Many types of acoustic sensors have been explored as a detector for the PAI including Fabry–Perot interferometers (FPIs), micro ring resonators (MRRs), piezoelectric transducers, and capacitive micromachined ultrasound transducers (CMUTs).

In 2018, Chinese researchers developed photoacoustic technology pairs fiber laser light, ultrasound to image tissue

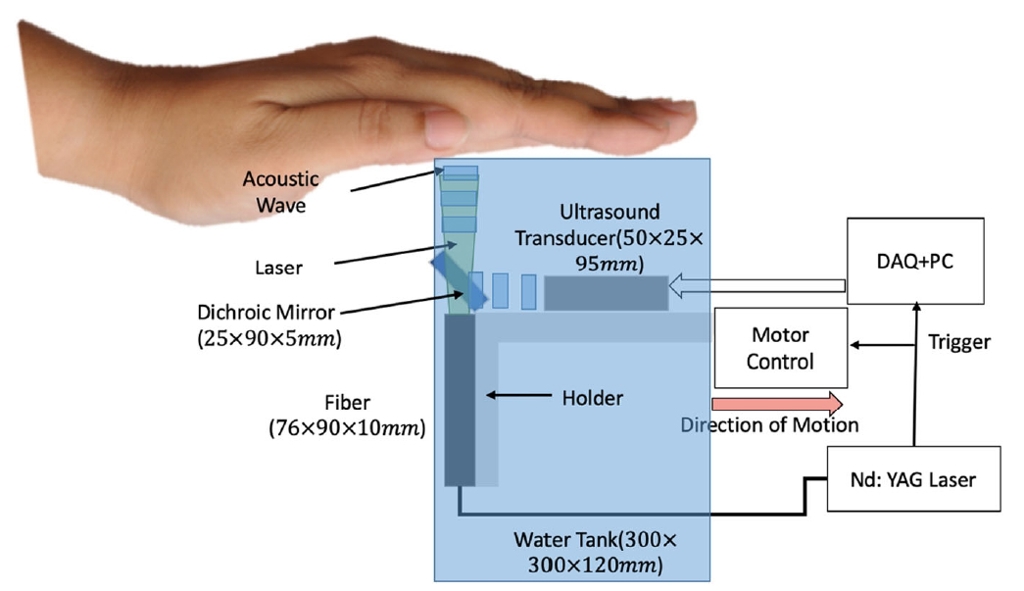

Researchers at Jinan University’s Institute of Photonics Technology (Guangzhou, China) have developed a photoacoustic imaging technique that may have potential applications in wearable devices, instrumentation, and medical diagnostics. It uses fiber-optic ultrasound detection, exploiting the acoustic effects on laser pulses via the thermoelastic effect—temperature changes that occur as a result of the elastic strain.

“Conventional fiber-optic sensors detect extremely weak signals by taking advantage of their high sensitivity via phase measurement,” says Long Jin, the lead researcher. These same sorts of sensors are used in military applications to detect low-frequency (kilohertz) acoustic waves. However, they don’t work so well for ultrasound waves at the megahertz frequencies used for medical purposes because ultrasound waves typically propagate as spherical waves and have a very limited interaction length with optical fibers. The new sensors were specifically developed for medical imaging, Jin says, and can provide better sensitivity than piezoelectric transducers currently used.

The research team designed an ultrasound sensor that is essentially a compact laser built within the 8-µm-diameter core of a single-mode optical fiber. “It has a typical length of only 8 mm,” Jin says. “To build up the laser, two highly reflective grating mirrors are UV-written into the fiber core to provide optical feedback.” This fiber then gets doped with ytterbium and erbium to provide sufficient optical gain at 1530 nm. They use a 980 nm semiconductor laser as the pump laser. “Such fiber lasers with a kilohertz-order linewidth—the width of the optical spectrum—can be exploited as sensors because they offer a high signal-to-noise ratio,” says research team member Yizhi Liang, an assistant professor at the Institute of Photonics Technology.

The ultrasound detection benefits from the combined technique because side-incident ultrasound waves deform the fiber, modulating the lasing frequency. “By detecting the frequency shift, we can reconstruct the acoustic waveform,” Liang says. The team does not demodulate the ultrasound signal, extracting the original information, using conventional interferometry-based methods or any additive frequency locking. Rather, they use another method called “self-heterodyning,” where the result of mixing two frequencies is detected. Here, they measure the radio frequency-domain beat note given by two orthogonal polarization modes of the fiber cavity. This demodulation also intrinsically guarantees a stable signal output.

The fiber laser-based ultrasound sensors offer opportunities for use in photoacoustic microscopy. The researchers used a focused 532 nm nanosecond pulse laser to illuminate a sample and excite ultrasound signals. They place a sensor in a stationary position near the biological sample to detect optically induced ultrasound waves.

“By raster scanning the laser spot, we can obtain a photoacoustic image of the vessels and capillaries of a mouse’s ear,” Jin says. “This method can also be used to structurally image other tissues and functionally image oxygen distribution by using other excitation wavelengths—which takes advantage of the characteristic absorption spectra of different target tissues.” “The development of our laser sensor is very encouraging because of its potential for endoscopes and wearable applications,” Jin says. “But current commercial endoscopic products are typically millimeters in dimension, which can cause pain, and they don’t work well within hollow organs with limited space.”

Photoacoustic Imaging Could Enable Hard-to-Fool Biometrics

The technique of photoacoustic tomography (PAT), an acousto-optical hybrid technique that combines the sensitivity of optical imaging with the penetration of ultrasound, can produce breathtakingly detailed images of cells and tissues for biomedical study. Now, a U.S.-based research team offers what could be a practical way to put PAT into service in another area: biometric scanning to verify people’s identity (Appl. Opt., doi: 10.1364/AO.400550).

The researchers have built a compact prototype scanner that collects photoacoustic data from a test subject’s fingers, pipes the data into a sophisticated modeling algorithm, and spits out a detailed 3D image of the pattern of blood vessels inside the finger. Such patterns—inherently unique to the individual, and buried beneath the skin—are effectively impossible to “spoof” or falsify. And, the researchers report, initial studies suggest that the new compact system they’ve developed can correctly tag an identity as much as 99% of the time using this internal fingerprint.

PAT works by combining optical excitation with acoustic reporting . A near-infrared, ns-to-ps laser pulse is fired into the tissue to be examined. When the pulse strikes a molecule in the tissue of interest—such as a vein—the molecule jumps to an excited state; as it relaxes, some of the energy re-emitted by the molecule is in the form of heat. The sudden burst of heat creates a pressure wave inside the tissue that propagates at around 1500 m/s back out of the sample, and that can be read by an ultrasound transducer. The ultrasound signals resulting from repeated laser pulses are then sent to a computer for tomographic processing into a 3D image.

Because different biological tissues absorb at different optical wavelengths, PAT can provide unusual specificity—operators can target the tissue to be examined by selecting the right wavelength for the excitation beam. And because the scattering of acoustic waves in tissue is orders of magnitude weaker than optical scattering, the ultrasound delivery of the final signal can allow for far better spatial resolution at depth than in purely optical techniques.

The idea of using finger vasculature as a potential biometric security tool has been broached before. But previous approaches have rested on purely ultrasound, optical or thermal techniques, and have provided only comparatively rough, 2D images. Recognizing that 3D images would provide a far higher level of security and unambiguous identification, the research team—including scientists at the State University of New York, Buffalo, USA, and NEC Laboratories America, USA—turned to PAT. In a previous study published in 2018, several members of the team prototyped a photoacoustic-imaging platform based on palm scanning.

The prototype system provided results comparable to conventional biometric approaches. However, the authors of the new study note that it was “bulky and inconvenient,” and prone to deteriorating results given small errors in the subject’s hand placement.

The use of a dichroic “cold” mirror that’s transparent to IR light but that reflects acoustic energy allowed a compact setup for the new system.

The researchers are now working on these and other possible improvements to the system. They are even looking, eventually, at porting the system to smartphones, some of which, the authors note, already include ultrasound capabilities. In a press release accompanying the research, Giovanni Milione, a researcher at NEC Laboratories, sketched out some of the prospects the team is exploring. “We envision this technique being used in critical facilities, such as banks and military bases, that require a high level of security,” he said. “With further miniaturization, 3D vein authentication could also be used in personal electronics or be combined with 2D fingerprints for two-factor authentication.”

New Photoacoustic Airborne Sonar System Helps Image Underwater Objects

In Dec 2020, an airborne photoacoustic imaging system was reported to be developed at Stanford University could aid in biological marine surveys and the search for sunken ships. The device is able to break through the threshold between air and water, which had previously been a barrier to aerial survey.

Techniques such as radar and lidar have proven useful for surveying land. Due to absorption, though, they do not support marine survey. Sonar has instead been the method of choice for the application, though a device reliant on that technology must be submerged, in a process that can slow, expensive, and inefficient. Sound waves are unable to pass from air into water without losing the majority of their energy to reflection, and returning sound waves experience another energy loss by having to break through that threshold a second time.

To overcome these obstacles, the Stanford researchers played to the strengths of each medium: sound and light. “If we can use light in the air, where light travels well, and sound in the water, where sound travels well, we can get the best of both worlds,” said first author Aidan Fitzpatrick, a Stanford graduate student in electrical engineering.

The researchers turned toward photoacoustics, creating the Photoacoustic Airborne Sonar System (PASS). In the system, a laser fires at water, where its beam is absorbed, creating ultrasound waves that propagate down through the water column and reflect off submerged objects in a direction toward the surface.

Because the waves propagate underwater as a result of the laser hitting the water surface, their energy only depletes once through reflection.

“We have developed a system that is sensitive enough to compensate for a loss of this magnitude and still allow for signal detection and imaging,” said study leader and associate professor of electrical engineering Amin Arabian. Transducers record the returning waves, which are then interpreted by software that reconstructs the signals into a three-dimensional image.

“Similar to how light refracts, or ‘bends’ when it passes through water or any medium denser than air, ultrasound also refracts,” Arabian said. “Our image reconstruction algorithms correct for this bending that occurs when the ultrasound waves pass from the water into the air.”

To date, the system has only been used in a container the size of a large fish tank. “Current experiments use static water, but we are currently working toward dealing with water waves,” Fitzpatrick said. “This is a challenging but, we think, feasible problem.”

The researchers believe that eventually the technology will eventually be able to compete with sonar systems that are able to reach depths of hundreds to thousands of meters. Researchers next plan to test the device in larger settings, and, eventually, in an open-water environment.

“Our vision for this technology is on board a helicopter or drone,” Fitzpatrick said. “We expect the system to be able to fly at tens of meters about the water.” The research was published in IEEE Access (www.doi.org/10.1109/ACCESS.2020.3031808).

References and Resources also include:

https://www.photonics.com/Articles/Photoacoustic_Tech_Allows_Airborne_Underwater/a66506

International Defense Security & Technology Your trusted Source for News, Research and Analysis

International Defense Security & Technology Your trusted Source for News, Research and Analysis