Introduction

In the dynamic landscape of operating systems, Linux stands as a resilient and influential force. Born from the passion of Linus Torvalds in 1991, Linux has since grown into a diverse and robust ecosystem of operating systems that continue to shape the technology world. In this article, we will delve into the world of Linux operating systems, celebrating their versatility, open-source nature, and security benefits. We will also highlight a compelling example of how Linux has been embraced by the Indian Defense Ministry for enhanced security.

An operating system serves as a crucial bridge between a computer’s hardware and its users. It’s a collection of software components that not only manage the computer’s hardware resources but also provide essential services for software programs to run smoothly. Essentially, an operating system acts as a foundation for a user to execute programs conveniently and efficiently.

In addition to facilitating program execution, the operating system plays a pivotal role in maintaining the health and stability of a computer system. It assigns software resources to users, detects errors, and implements measures to prevent system failures. Furthermore, it handles file management tasks, ensuring that data is organized and accessible.

Initially designed for personal computers using Intel x86 architecture, Linux has since been adapted to a multitude of platforms, making it one of the most versatile operating systems. Its dominance is further highlighted by its presence on smartphones, primarily through Android, and its widespread use on servers, where it powers over 96.4% of the top 1 million web servers. Linux is also the exclusive operating system on TOP500 supercomputers.

Understanding the Basics

Before we explore the rich diversity of Linux distributions, let’s clarify what Linux actually is. Linux is an open-source, Unix-like operating system kernel. It serves as the core component of an operating system, managing hardware resources and acting as an interface between software applications and the hardware. It’s important to note that a complete operating system typically combines the Linux kernel with a collection of software utilities, libraries, and user interfaces to create a functional computing environment.

The Open Source Philosophy

One of the fundamental principles of Linux is its open-source nature. Unlike proprietary operating systems like Microsoft Windows or macOS, Linux distributions are freely available, and the source code is open for anyone to inspect, modify, and distribute.

Its open-source nature means that anyone can access, modify, and distribute its source code freely, fostering a vast community of supporters and developers. This vibrant community ensures continuous improvement, resulting in highly secure, cost-effective, and efficient operating systems. Linux’s modular and easily configurable design allows it to deliver optimal performance on various hardware platforms, while its security features make it less vulnerable to malware, reducing the need for antivirus software.

This open-source ethos fosters a vibrant community of developers, contributors, and enthusiasts who collaborate to create, maintain, and improve Linux distributions. This not only encourages innovation but also results in robust, secure, and reliable operating systems.

Linux Architecture

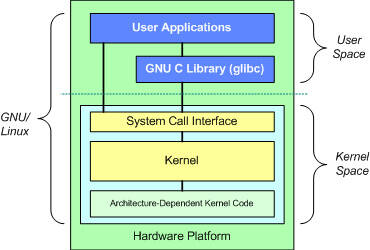

Linux architecture revolves around two primary spaces: User Space and Kernel Space. These components interact through a System Call Interface, a well-established interface enabling userspace applications to communicate with the kernel.

Key elements include:

- Hardware Layer: This is the lowest level, comprising the physical electronic devices within a computer, such as the CPU, RAM, storage, and peripherals.

- Kernel: Linux’s core, responsible for critical operations like memory management, process management, device driver management, system calls, and security. It acts as the intermediary between software applications and hardware.

- System Library: This layer interfaces with the kernel, abstracting its complexities from users. It includes shared libraries like libc and system services running in the background, often referred to as daemons.

- Applications: The software that allows users to perform tasks within the Linux system, such as compilers, programming languages, shells, and user apps.

- Shell: The Linux shell interprets commands, enabling users to access files, utilities, applications, and perform automation tasks. It comes in two main types: command-line and graphical.

- System Utility: These provide essential functionalities for system administrators, facilitating file management, network configuration, and more.

- Text Editors: Tools for writing and editing code, available as command-line (e.g., vi, nano) or GUI-based (e.g., gedit, emacs) options.

Installing Software and Updates: Both software updates and software installation files for Linux operating systems are distributed in files known as packages. These packages are archive files containing the required components for either installing new software or updating existing software. You use package managers to manage the download and installation of packages. Different Linux distros provide different package managers—some are GUI-based and some are command line tools.

They are distinct file types containing software or updates for different Linux operating systems. .deb files are used for Debian-based distributions such as Debian, Ubuntu, and Mint. Deb stands for Debian. And .rpm files are used for Red Hat-based distributions such as CentOS/RHEL, Fedora, and openSUSE. RPM stands for Red Hat Package Manager.

You use the “sudo apt update” command to find available packages for your distro. The output of this command lists each available package, builds a dependency tree, and lets you know how many packages can be upgraded. To install the packages, use the “sudo apt upgrade” command.

Deb and RPM formats are equivalent, so the contents of the file can be used on other types of Linux OSs. If you find that a package that you want to use is only available in the other format you

can convert it using the alien tool. To convert packages from RPM format to deb, simply use the alien command and specify the package name that you want to convert. To convert to RPM

format, use the –r switch with the alien command.

GUI-based Linux distro package managers include PackageKit and Update Manager. Update Manager is a GUI tool for updating deb-based Linux systems. PackageKit is a GUI tool for

updating RPM-based Linux systems.

Linux Design Principles

GNU/Linux is a multi-user OS. This means not merely that several users can use a single machine, each having their own customized environments, but that several users may use a single machine at the same time, either by logging on from a terminal or another computer, or by leaving a running process after logging out.

In a multiuser system, each user has a private space on the machine; typically, he owns some quota of the disk space to store files, receives private mail messages, and so on. All users are identified by a unique number called the User ID, or UID. To selectively share material with other users, each user is a member of one or more user groups , which are identified by a unique number called a user group ID . Each file is associated with exactly one group.

Linux is a multiprocessing operating system. Processes carry out tasks within the operating system. A program is a set of machine code instructions and data stored in an executable image on disk and is, as such, a passive entity; a process can be thought of as a computer program in action.

The Linux kernel exists in the kernel space, below the userspace, which is where the user’s applications are executed. The code of the kernel component runs in a unique privilege mood known as kernel mode along with complete access to every computer resource. This code illustrates an individual process, runs in an individual address space, and doesn’t need the context switch. Hence, it is very fast and efficient. Kernel executes all the processes and facilitates various services of a system to the processes. Also, it facilitates secured access to processes to hardware.

The architectural perspective of the Linux kernel consists of System call interface, Process Management, the Virtual File system, Memory Management, Network Stack, Architecture, and the Device Drivers.

For the userspace to communicate with the kernel space, a GNU C Library is incorporated which provides a forum for the system call interface to connect to the kernel space and allow transition back to the userspace. A library contains functions that the applications work with. The libraries then, through the system call interface, instruct the kernel to perform a task that the application wants.

System calls: Applications pass information to the kernel through system calls. The system call is the means by which a process requests a specific kernel service. There are several hundred system calls, which can be roughly grouped into six categories: filesystem, process, scheduling, interprocess communication, socket (networking), and miscellaneous.

Process management; is mainly there to execute the processes. These are referred to as the thread in a kernel and are representing the individual virtualization of the particular processor. Processes are separate tasks each with their own rights and responsibilities. If one process crashes it will not cause another process in the system to crash. Each individual process runs in its own virtual address space and is not capable of interacting with another process except through secure, kernel-managed mechanisms.

During the lifetime of a process, it will use many system resources. It will use the CPUs in the system to run its instructions and the system’s physical memory to hold it and its data. It will open and use files within the filesystems and may directly or indirectly use the physical devices in the system. Linux must keep track of the process itself and of the system resources that it has so that it can manage it and the other processes in the system fairly. It would not be fair to the other processes in the system if one process monopolized most of the system’s physical memory or its CPUs.

The most precious resource in the system is the CPU, usually there is only one. Linux is a multiprocessing operating system, its objective is to have a process running on each CPU in the system at all times, to maximize CPU utilization. If there are more processes than CPUs (and there usually are), the rest of the processes must wait before a CPU becomes free until they can be run.

Signals: The kernel uses signals to call into a process. For example, signals are used to notify a process of certain faults, such as division by zero.

Memory management; memory is managed in what are known as pages for efficiency. Linux includes the methods in which to manage the available memory as well as the hardware mechanisms for physical and virtual mappings. Swap space is also provided

File systems Provide a global, hierarchical namespace for files, directories, and other file-related objects and provides file system functions. The Linux filesystem is the collection of files on your machine. It includes the files needed to run the machine and applications as well as your own files containing your work. The top level of the filesystem is the root directory, symbolized by a forward slash (/). Below is a tree-like structure of the directories and files in the system. And the filesystem assigns appropriate access rights to the directories and files.

The very top of the Linux filesystem is the root directory, which contains many other directories and files. One of the key directories is /bin, which contains user binary files. Binary files contain the code your machine reads to run programs and execute commands. It’s called “slash bin” to signify that it exists directly below the root directory. Other key directories include /usr, which

contains user programs, /home, which is your personal working directory where you should store all your personal files, /boot, which contains your system boot files, the instructions vital for system startup, and /media, which contains files related to temporary media such as CD or USB drives that are connected to the system.

Virtual file system; it provides a standard interface abstraction for the file systems. It provides a switching layer between the system call interface and the file systems supported by the kernel.

Network stack; is designed as a layered architecture modeled after the particular protocols. Network protocols: Supports the Sockets interface to users for the TCP/IP protocol suite. Network device drivers: Manages network interface cards and communications ports that connect to network devices, such as bridges and routers.

Processes and scheduler: Creates, manages, and schedules processes.

Virtual memory: Allocates and manages virtual memory for processes

Traps and faults: Handles traps and faults generated by the processor, such as a memory fault.

Physical memory: Manages the pool of page frames in real memory and allocates pages for virtual memory.

Interrupts: Handles interrupts from peripheral devices. As the name suggests, interrupt signals provide a way to divert the processor to code outside the normal flow of control. When an interrupt signal arrives, the CPU must stop what it’s currently doing and switch to a new activity; it does this by saving the current value of the program counter (i.e., the content of the eip and cs registers) in the Kernel Mode stack and by placing an address related to the interrupt type into the program counter.

Device drivers; a significant part of the source code in the Linux kernel is found in the device drivers that make a particular hardware device usable. In Linux environments, programmers can build device drivers as parts of the kernel, separately as loadable modules, or as user-mode drivers (for certain types of devices where kernel interfaces exist, such as for USB devices).

Architecture-dependent code; those elements that depend on the architecture on which they run, hence must consider the architectural design for normal operation and efficie

Linux kernels have most of their functionality contained in modules that are put into the kernel dynamically. This in contrast to monolithic types, is referred to as modular kernels, a number of which can be automatically loaded and unloaded on demand.

In essence, a module is an object file whose code can be linked to and unlinked from the kernel at runtime. Typically, a module implements some specific function, such as a filesystem, a device driver, or some other feature of the kernel’s upper layer. Such a setup allows a user to load or replace modules in a running kernel without the need of rebooting. A module does not execute as its own process or thread, although it can create kernel threads

The Linux kernel associates a special file with each I/O device driver. Block, character, and network devices are recognized.

Diversity in Linux Distributions

Linux’s success is closely tied to the Free Software Foundation’s commitment to creating stable, platform-independent, and user-embraced software. The GNU project, for instance, supplies developers with essential tools, and the GNU Public License (GPL) ensures software quality and community acceptance.

Linux offers a remarkable variety of distributions, often referred to as “distros,” each tailored to different needs and preferences. Here are a few noteworthy examples:

- Ubuntu: Known for its user-friendliness, Ubuntu is an excellent choice for beginners. It offers a clean and intuitive desktop environment, extensive software repositories, and long-term support (LTS) releases for stability.

- Fedora: Embracing cutting-edge technologies, Fedora is favored by developers. It serves as a testing ground for new features that often find their way into other distributions.

- Debian: Renowned for its stability and commitment to free software, Debian is the foundation for several other Linux distributions, including Ubuntu.

- Arch Linux: Designed for users who want complete control over their system, Arch Linux provides a minimalistic base for users to build upon according to their preferences.

- Linux Mint: Focused on providing a familiar desktop experience, Linux Mint is known for its ease of use and aesthetics. It’s an excellent choice for those transitioning from Windows.

Linux also offers a unified approach to software installation and updates, using package managers to handle the distribution of software packages. Popular package formats include .deb (Debian-based) and .rpm (Red Hat-based). Package managers like apt and yum help manage these packages, ensuring software is up to date and resolving dependencies.

Linux boot and startup processes

There are two sequences of events that are required to boot a Linux computer and make it usable: boot and startup. The boot sequence starts when the computer is turned on, and is completed when the kernel is initialized and systemd is launched. The startup process then takes over and finishes the task of getting the Linux computer into an operational state.

The first step of the Linux boot process really has nothing whatever to do with Linux. This is the hardware portion of the boot process and is the same for any operating system. When power is first applied to the computer it runs the POST (Power On Self Test) which is part of the BIOS (Basic I/O System).

BIOS POST checks the basic operability of the hardware and then it issues a BIOS interrupt, INT 13H, which locates the boot sectors on any attached bootable devices. The first boot sector it finds that contains a valid boot record is loaded into RAM and control is then transferred to the code that was loaded from the boot sector.

The boot sector is really the first stage of the boot loader. There are three boot loaders used by most Linux distributions, GRUB, GRUB2, and LILO. GRUB2 is the newest and is used much more frequently these days than the other older options.

The primary function of either GRUB is to get the Linux kernel loaded into memory and running. The bootstrap code, i.e., GRUB2 stage 1, is very small because it must fit into the first 512-byte sector on the hard drive along with the partition table. Because the boot record must be so small, it is also not very smart and does not understand filesystem structures. Therefore the sole purpose of stage 1 is to locate and load stage 1.5.

Stage 1.5 of GRUB must be located in the space between the boot record itself and the first partition on the disk drive. This space was left unused historically for technical reasons. Because of the larger amount of code that can be accommodated for stage 1.5, it can have enough code to contain a few common filesystem drivers, such as the standard EXT and other Linux filesystems, FAT, and NTFS. The function of stage 1.5 is to begin execution with the filesystem drivers necessary to locate the stage 2 files in the /boot filesystem and load the needed drivers.

The function of GRUB2 stage 2 is to locate and load a Linux kernel into RAM and turn control of the computer over to the kernel. The kernel and its associated files are located in the /boot directory. The kernel files are identifiable as they are all named starting with vmlinuz. You can list the contents of the /boot directory to see the currently installed kernels on your system.

All of the kernels are in a self-extracting, compressed format to save space. The kernels are located in the /boot directory, along with an initial RAM disk image, and device maps of the hard drives.

After the selected kernel is loaded into memory and begins executing, it must first extract itself from the compressed version of the file before it can perform any useful work. Once the kernel has extracted itself, it loads systemd, which is the replacement for the old SysV init program, and turns control over to it.

This is the end of the boot process. At this point, the Linux kernel and systemd are running but unable to perform any productive tasks for the end user because nothing else is running.

The startup process

The startup process follows the boot process and brings the Linux computer up to an operational state in which it is usable for productive work. systemd is the mother of all processes and it is responsible for bringing the Linux host up to a state in which productive work can be done.

First, systemd mounts the filesystems as defined by /etc/fstab, including any swap files or partitions. At this point, it can access the configuration files located in /etc, including its own. It uses its configuration file, /etc/systemd/system/default.target, to determine which state or target, into which it should boot the host.

The sysinit.target starts up all of the low-level services and units required for the system to be marginally functional and that are required to enable moving on to the basic.target.

After the sysinit.target is fulfilled, systemd next starts the basic.target, starting all of the units required to fulfill it. The basic target provides some additional functionality by starting units that are required for the next target. These include setting up things like paths to various executable directories, communication sockets, and timers

Overall, Linux embodies core principles of multitasking, multiprocessing, and providing a robust environment for users, developers, and administrators. Its boot and startup processes, managed by systemd, ensure that the system is initialized correctly, enabling productive work to commence. Linux’s architectural design, modular components, and vibrant community continue to drive its widespread adoption and success in the technology world.

Linux in Indian Defense: The Maya Example

One compelling example of Linux’s adoption for enhanced security comes from the Indian Defense Ministry. They have made a significant shift by transitioning from Microsoft Windows to a Linux-based operating system called Maya. Developed in-house by Indian government agencies in just six months, Maya is based on Ubuntu and designed with a strong emphasis on security. It includes features specific to the needs of the Indian military, such as support for Indian languages, keyboards, and compatibility with a wide range of Indian military software and hardware.

Benefits of the Indian Defense Ministry’s Transition to Maya:

- Improved Security: Linux, known for its robust security features, combined with Maya’s tailored security measures, bolsters the defense against malware attacks and cyber threats.

- Cost-Efficiency: Linux is cost-effective as it is free and open source, eliminating the need for expensive licensing fees associated with proprietary software.

- Independence: Relying on domestically developed software reduces dependence on foreign technology providers, ensuring greater control over critical infrastructure.

- Fostering Innovation: Maya’s open-source nature empowers Indian developers to contribute to its development actively and create new applications and services.

Conclusion

Linux operating systems have come a long way since their inception, and they continue to shape the tech landscape with their versatility, open-source ethos, and robust performance. Whether you’re a seasoned Linux user or considering making the switch, exploring the diverse world of Linux distributions is a rewarding journey. With options tailored to every need and a vibrant community backing them, Linux offers a compelling alternative to proprietary operating systems, paving the way for a future where technology remains accessible, secure, and customizable for all. The Indian Defense Ministry’s adoption of Linux, exemplified by Maya, demonstrates how Linux’s inherent strengths in security, cost-efficiency, independence, and innovation can benefit organizations worldwide.

International Defense Security & Technology Your trusted Source for News, Research and Analysis

International Defense Security & Technology Your trusted Source for News, Research and Analysis