Introduction:

Artificial Intelligence (AI) has evolved rapidly, propelling machines to match or surpass human intelligence in various tasks. Within the expansive domain of AI, machine learning (ML) plays a crucial role, allowing computers to learn from data and make predictions without explicit programming. While the last decade witnessed remarkable advancements in data-driven ML applications, the Defense Advanced Research Projects Agency (DARPA) recognizes the limitations, especially in the context of military applications.

In the ever-evolving landscape of military intelligence, ensuring the assurability and trustworthiness of Intelligence, Surveillance, and Reconnaissance (ISR) missions is paramount. The Defense Advanced Research Projects Agency (DARPA) has taken a groundbreaking leap with the Assured Neural and Symbolic Reasoning (ANSR) program, employing innovative hybrid AI methods. ANSR represents a paradigm shift in military intelligence, integrating neural networks and symbolic reasoning to enhance the reliability and security of ISR mission outcomes.

Artificial Intelligence technologies aim to develop computers, or robots that match or exceed the abilities of human intelligence in tasks such as learning and adaptation, reasoning and planning, decision making and autonomy; creativity; extracting knowledge and making predictions from data. Within AI is a large subfield called machine learning or ML. Machine Learning enables coDARPA’s Assured Neural and Symbolic Reasoning program stands at the forefront of advancing AI technologies for military ISR missions.mputers with capability to learn from data, so that new program need not be written. Machine learning Algorithms extract information from the training data to discover patterns which are then used to make predictions on new data.

The last decade witnessed tremendous progress in applications of data-driven ML, fueled by growth in compute power and data, in areas that span a wide spectrum ranging from board games to protein folding, language translation to medical image analysis. In several of these applications, ML and related techniques have demonstrated performance that rivals, and occasionally surpasses, human capability with respect to a set of narrowly curated metrics.

The Evolution of AI in Military Operations:

AI has become integral to various military capabilities, including intelligence, target identification, rapid weapon development, command and control, and logistics. The speed and accuracy with which AI systems analyze vast amounts of data have revolutionized decision-making processes, enabling faster responses to potential threats.

AI is enabling many military capabilities and operations such as intelligence, surveillance, and reconnaissance, identifying targets, speed weapon development and optimization, command and control, logistics and developing war games. Adversaries could use AI to carry out information operations or psychological warfare.

AI systems can accurately analyze huge amounts of data generated during peace and conflicts. It can quickly interpret information, which could lead to better decision-making. It can fuse data from different sensors into a coherent common operating picture of the battlefield. AI systems can react significantly faster than systems that rely on human input; Therefore, AI is accelerating the complete “kill chain” from detection to destruction. This allows militaries to better defend against high-speed weapons such as hypersonic weapons which travel at 5 to 10 times the speed of sound.

AI enhances of autonomy of unmanned Air, Ground, and Underwater vehicles. It is enabling concepts like vehicle swarms in which multiple unmanned vehicles autonomously collaborate to achieve a task. For example, drone swarms could overwhelm or saturate adversary air defensive systems.

Autonomy and highly autonomous systems are the desired capability for many Department of Defense (DoD) missions – Intelligence, Surveillance and Reconnaissance (ISR), Logistics, Planning, Command and Control among others. The purported benefits are many, including – (1) improved operational tempo and mission speeds; (2) reduced cognitive demands on warfighters in operation and supervision of autonomous systems; and (3) increased standoff for improved warfighter safety.

However, the black-box nature of deep learning algorithms poses challenges in terms of transparency and trust, particularly in critical military scenarios. A crucial desideratum associated with autonomy is the need for trustworthiness and trust, as emphasized by the 2016 Defense Science Board (DSB) Report on Autonomy. Informally, trust is an expression of confidence in an autonomous system’s ability to perform an underspecified task. Assuring that autonomous systems will operate safely and perform as intended is integral to trust, which is key to DoD’s success in the adoption of autonomy.

Since the DSB report on Autonomy publication, significant improvements have been made in machine learning (ML) algorithms that are central to achieving autonomy. Simultaneously, innovations in assurance technologies have delivered mechanisms to assess the correctness and safety trustworthiness of systems at design time and be resilient at operation time.

The ISR Challenge:

Military ISR missions demand a level of assurability and trustworthiness that goes beyond the capabilities of traditional artificial intelligence (AI) systems. The dynamic and often unpredictable nature of warfare requires intelligent systems that can adapt, reason, and make decisions with a high degree of confidence. ANSR addresses the challenge of ensuring the trustworthiness of AI systems in complex military environments where decisions can have critical consequences.

Challenges and Limitations of Current ML Algorithms:

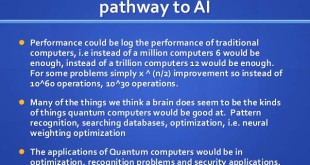

DARPA defines different generations of AI. The first Wave of AI was rule-based AI systems where machines followed the rules defined by humans. The second ongoing wave of AI includes all machine learning techniques where machines define rules by clustering, classifications and use those models to predict and make decisions.

But the problem with deep learning is that it is a black box, we don’t know the reasoning behind the decisions it makes. This makes it hard for people to trust them and humans working closely with robots risky. DARPA is now developing “third wave” AI theory and applications that make it possible for machines that can explain their decisions and adapt to changing situations. Instead of learning from data, intelligent machines will perceive the world on its own, learn and understand it by reasoning. Artificial intelligence systems will then become trustworthy and collaborative partners to soldiers in battlefield.

Despite the success of ML algorithms, there are inherent challenges, such as a lack of transparency, interpretability, and robustness. State-of-the-art ML struggles with generalization, especially in unfamiliar situations, and is susceptible to adversarial attacks.

However, these approaches also have limitations when used in real-world autonomy applications. They fare poorly when dealing with real-world uncertainty and high dimensional sensory data, which is integral to perception and situation-understanding applications, The ruleset and stateful logic in these decision-making applications are often incomplete and insufficient when exposed to unanticipated situations. Further, it is well understood that common-sense knowledge is intractable to codify. For example, the Cyc11 knowledge base includes millions of concepts and tens of millions of rules and yet is inadequate for many real-world tasks.

The prevailing trend in industrial ML research is towards scaling up to Giga- and Tera- scale models (100’s of billions of parameters) as a means to improve accuracies and performance. These trends are not sustainable because of the extremely high computational and data needs for training such models, as well as scaling laws. These trends are also not responsive to the needs of DoD applications, which are typically data- and compute-starved with limited access to cloudscale compute resources. Furthermore, DoD applications are safety and mission-critical, need to operate in unseen environments, need to be auditable, and need to be trustable by human

operators. In sum, the prevailing trends in ML research are not conducive to the assurability and trustworthiness needs of DoD applications.

DARPA acknowledges these limitations and aims to go beyond the prevailing trends in ML research, which often involve scaling models to unsustainable sizes for improved accuracy. The ANSR program seeks to bridge the gap by developing hybrid AI algorithms that incorporate symbolic reasoning to enhance robustness and trustworthiness.

The Hybrid AI Approach:

The challenge of assuring cyber physical systems (CPS) with ML components has been an active area of research supported by DARPA’s ongoing Assured Autonomy program as well as other research initiatives. Specifically, in Assured Autonomy, the assurance approach developed by the program has resulted in: (1) formal and simulation-based verification tools that can comprehensively explore the behavior of a CPS; (2) monitoring tools that can detect deviations of ML components from expected inputs and behavior; resilience and recovery strategies to

avoid worst-case safety consequences; and (3) an assurance case framework that enables structured argumentation backed by evidence in support of the claim that major safety hazards have been identified and their root causes have been adequately mitigated.

The advances in assurance technologies, including formal and simulation-based approaches, have helped in accelerating identification of failure modes and defects of the ML algorithms. Unfortunately, the ability to repair defects in SOTA ML remains limited to retraining, which is not guaranteed to eliminate defects or to improve the generalizability of ML algorithms. Further, while the runtime assurance architecture – including monitoring and recovery – ensures operational safety, frequent invocations of fallback recovery – triggered by brittleness and

generalizability of ML – compromises the ability to accomplish the mission.

DARPA launched its newest artificial intelligence (AI) program, Assured Neuro Symbolic Learning and Reasoning (ANSR) in June 2022, which seeks to motivate new thinking and approaches that will take ML beyond data-driven pattern recognition and augment it with knowledge-driven reasoning that includes context, physics, and other background information.

We define a system as trustworthy, if it is: (a) robust to domain informed and adversarial perturbations; (b) supported by an assurance framework that creates and analyzes heterogenous evidence towards safety and risk assessments; and (c) predictable with respect to some specification and models of “fitness.”

At the core of ANSR’s innovation is its hybrid AI approach, combining neural networks and symbolic reasoning. Neural networks excel in pattern recognition and learning from vast amounts of data, while symbolic reasoning allows for logical decision-making based on rules and explicit knowledge representation. By integrating these two approaches, ANSR creates a symbiotic relationship that leverages the strengths of each, resulting in a more robust and trustworthy AI system.

We hypothesize that several of the limitations in ML today are a consequence of (1) the inability to incorporate contextual and background knowledge; and (2) treating each data set as an independent uncorrelated input. In the real-world, observations are often correlated and a product of an underlying causal mechanism, which can be modeled and understood. We posit that hybrid AI algorithms capable of acquiring and integrating symbolic knowledge and performing symbolic reasoning at scale, will deliver robust inference, generalize to new situations, and provide evidence for assurance and trust.

ANSR will explore diverse, hybrid architectures that can be seeded with prior knowledge, acquire both statistical and symbolic knowledge through learning, and adapt learned representations. The program includes demonstrations to evaluate hybrid AI techniques through relevant military use cases where assurance and autonomy are mission-critical. Specifically, selected teams will develop a common operating picture of a dynamic, dense urban environment using a fully autonomous system equipped with ANSR technologies. The AI would deliver insights to the warfighter that could help characterize friendly, adversarial and neutral entities, the operating environment, and threat and safety corridors.

Symbolic Reasoning for Explainability:

Symbolic reasoning provides transparency and interpretability, allowing AI systems to explain their decisions and adapt to changing circumstances. The program envisions a modification of both training and inference procedures, interleaving symbolic and neural representations for iterative adaptation.

The traditional approaches to building intelligent applications and autonomous systems rely heavily on knowledge representations and symbolic reasoning. For example, complex decision-making in these approaches is often implemented with programmed condition-based rules, stateful logic encoded in finite state machines, and physics-based dynamics of environments and objects represented using ordinary differential equations. There are numerous advantages of these classical techniques:

they use rich abstractions that are grounded in domain theories and associated formalisms and that are supported by advanced tools and methods (Statecharts, Stateflow, Simulink, etc.);

they can be modular and composable in ways supported by software engineering practices that promote reuse, precision, and automated analyses; and

they can be analyzable and assurable in ways supported by formal specification and verification technologies that have been demonstrated in hardening mission and safety critical systems against cyber attacks.

One of the key benefits of symbolic reasoning in the ANSR program is the ability to provide explainability in AI decision-making. In military operations, understanding the rationale behind AI-driven decisions is crucial for commanders and operators.

Some recent results for specific applications provide the basis for confidence. For example, a recent study prototyped a hybrid reinforcement learning (RL) architecture that acquires a set of symbolic policies through data-driven learning. The symbolic policies are in the form of a small program that is interpretable and verifiable. The approach demonstrably inherits the best of both worlds: it learns policies that are highly performant in a known environment, and it generalizes well by remaining safe (crash-free) in an unknown environment. Another recent approach uses symbolic reasoning to fix errors in a NN in estimating the object-poses in a scene, and it achieves

substantially higher (30-40% above baseline) accuracy in several cases.

Symbolic reasoning enables ANSR to generate explanations for its decisions, allowing humans to comprehend and trust the output of the system. The ultimate goal is to create AI systems that are trustworthy, robust to perturbations, and predictable, aligning with the Department of Defense’s (DoD) mission-critical requirements.

Neural Networks for Adaptive Learning:

Neural networks bring adaptability and learning capabilities to ANSR. In dynamic military scenarios, the ability to learn from new data and adapt strategies is essential. Neural networks enable ANSR to continuously improve its decision-making processes by learning from real-time data, making it a highly responsive and adaptive system.

Assurability through Formal Verification:

To ensure the reliability of ANSR in critical military applications, DARPA has incorporated formal verification techniques. These methods involve mathematical proofs and analysis to verify the correctness of the system’s algorithms and decision-making processes. By subjecting ANSR to rigorous formal verification, DARPA aims to provide assurance of its trustworthiness in the field.

Technical Areas of Development:

The ANSR program is organized into four technical areas (TAs) to address different facets of hybrid AI development. TA1 focuses on algorithms and architecture, exploring various patterns suitable for different tasks. TA2 is dedicated to specification and assurance, developing frameworks to derive evidence of correctness and quantify mission-specific risks. TA3 involves the development of platforms and capability demonstrations, with an emphasis on mission-relevant applications in dynamic urban environments. TA4, the assurance assays and evaluation, acts as a red team, rigorously testing the robustness, generalizability, and assurance claims through adversarial evaluations.

DARPA Awards

DARPA selected the following teams to explore diverse, hybrid architectures that integrate data-driven machine learning with symbolic reasoning, a problem-solving method that uses symbols or abstract representations to understand information or follow rules to reach conclusions.

- The University of California, Los Angeles, University of Central Florida, SRI International, Monash University, Carnegie Mellon University, Vanderbilt University, University of California, Berkeley, and Collins Aerospace will develop and model new neuro-symbolic AI algorithms and architectures for different tasks.

- SRI International, Vanderbilt University, University of California, Berkeley, and Collins Aerospace will develop an assurance framework and methods for deriving and integrating correctness evidence and quantifying mission-specific risks.

- Systems & Technology Research (STR) LLC will develop demonstrable use cases and architectures for engineering mission-relevant applications of hybrid AI algorithms where robustness and assurance are critical for mission success.

- Johns Hopkins University Applied Physics Laboratory will test and evaluate the technologies created by other performers and demonstrate value to Department of Defense (DOD) missions through those use case demonstrations.

The program will take place throughout three phases, beginning with development in gaming environments and culminating in a demonstration of a fully autonomous intelligence, surveillance, and reconnaissance mission via a live exercise at DOD facilities.

The autonomous system provisioned with ANSR technologies must develop a comprehensive situation understanding and make maneuver decisions while maintaining safety.

Real-world Applications:

The ANSR program holds promise for a variety of real-world military applications. From autonomous drones making split-second decisions during ISR missions to strategic decision support systems aiding military commanders, the hybrid AI methods employed by ANSR are set to redefine the capabilities of military intelligence.

The hybrid AI techniques developed by the program will enable new mission capabilities. The program intends to demonstrate assured execution of an unaided ISR mission to develop a Common Operating Picture (COP) of a highly dynamic dense urban environment. The autonomous system performing the ISR mission will carry an effects payload to reduce sensor to-effects delivery time. While the delivery of effects is gated by human on-the-loop, an effects carrying system is quintessentially a safety and mission-critical system and, therefore, requires

strong guarantees of collision avoidance and mission performance. The capabilities required of the autonomous system in terms of deep situational understanding and decision-making are not achievable by SOTA machine learning or standalone symbolic reasoning systems. The training data is sparse, further motivating the use of hybrid AI methods.

Conclusion:

By harnessing the complementary strengths of neural networks and symbolic reasoning, ANSR aims to deliver a new standard of assurability and trustworthiness in military AI systems. DARPA’s ANSR program marks a significant step towards building AI systems that are not only proficient in learning from data but also capable of reasoning and adapting to real-world uncertainties. By merging symbolic reasoning with data-driven learning, the program aims to create trustworthy and assured AI systems, particularly vital for military applications where precision, transparency, and adaptability are critical.

DARPA’s Assured Neural and Symbolic Reasoning program stands at the forefront of advancing AI technologies for military ISR missions. As this innovative program progresses, the defense community anticipates a transformation in the capabilities and reliability of intelligent systems deployed in the service of national security. The successful development of the ANSR program could redefine the landscape of military autonomy, setting new standards for the integration of AI in defense operations.

International Defense Security & Technology Your trusted Source for News, Research and Analysis

International Defense Security & Technology Your trusted Source for News, Research and Analysis