The first Wave of AI is Crafted Knowledge, which includes rule-based AI systems. In the first wave,humans defined the rules for the Intelligent machines and they follow the rules. The second wave of AI is Statistical Learning, which includes machine becoming intelligent by using statistical methods. This includes all machine learning techniques that relying on the machines to define rules by clustering, classifications and use those models to predict and make decisions.

DARPA-funded projects enabled some of the first successes in AI, such as expert systems and search, and more recently the agency has advanced machine learning algorithms and hardware. Machine learning (ML) methods have demonstrated outstanding recent progress and, as a result, artificial intelligence (AI) systems can now be found in myriad applications, including autonomous vehicles, industrial applications, search engines, computer gaming, health record automation, and big data analysis.

But the problem with deep learning is that it is a black box, which means it is very difficult to investigate the reasoning behind the decisions it makes. The opacity of AI algorithms complicates their use, especially where mistakes can have severe impacts. For instance, if a doctor wants to trust a treatment recommendation made by an AI algorithm, they have to know what is the reasoning behind it. The same goes for a judge who wants to pass sentence based on recidivism prediction made by a deep learning application. These are decisions that can have a deep impact on the life of the people affected by them, and the person assuming responsibility must have full visibility on the steps that go into those decisions, says David Gunning, Program Manager at XAI, DARPA’s initiative to create explainable artificial intelligence models.

Current artificial intelligence (AI) systems only compute with what they have been programmed or trained for in advance; they have no ability to learn from data input during execution time, and cannot adapt on-line to changes they encounter in real environments.

DARPA is now interested in researching and developing “third wave” AI theory and applications that address the limitations of first and second wave technologies by making it possible for machines to contextually adapt to changing situations. In the third wave, instead of learning from data, intelligent machines will understand and perceive the world on its own, and learn by understanding the world and reason with it. The agency’s diverse portfolio of fundamental and applied AI research programs is aimed at shaping a future in which AI-enabled machines serve as trusted, collaborative partners in solving problems of importance to national security.

Experts also point to the Fourth Wave of AI will be an advancement of the third wave, but beside of adapting to the world, the AI can have common sense and understand ethics. In this area, the machine doesn’t only learn from the environment but also can make decisions based on ethics, regulations, and law to avoid misuse.

AIE Opportunities will focus on “third wave” theory and applications of AI. We see this third wave is about contextual adaptation, and in this world we see that the systems themselves will over time build underlying explanatory models that allow them to characterize real-world phenomena,” John Launchbury, director of DARPA’s Information Innovation Office, said in a video.

Another DARPA’s many exciting projects is Explainable Artificial Intelligence (XAI), an initiative launched in 2016 aimed at solving one of the principal challenges of deep learning and neural networks, the subset of AI that is becoming increasing prominent in many different sectors. “XAI is trying to create a portfolio of different techniques to tackle [the black box] problem, and explore how we might make these systems more understandable to end users. Early on we decided to focus on the lay user, the person who’s not a machine learning expert,” Gunning says.

The problem will become further risky as humans start to work more closely with robots, in collaborative tasks or social or assistive contexts, as it will be hard for people to trust them if their autonomy is such that we find it difficult to understand what they’re doing. In a paper published in Science Robotics, researchers from UCLA have developed a robotic system that can generate different kinds of real-time, human-readable explanations about its actions, and then did some testing to figure which of the explanations were the most effective at improving a human’s trust in the system. This work was funded by DARPA’s Explainable AI (XAI) program, which has a goal of being able to “understand the context and environment in which they operate, and over time build underlying explanatory models that allow them to characterize real world phenomena.”

DARPA to explore the “third wave” of artificial intelligence

DARPA announced in September 2018 a multi-year investment of more than $2 billion in new and existing programs called the “AI Next” campaign.

Under AI Next, DARPA will build on its past experience, and the key areas to be explored include:

- Automating critical DoD business processes, such as security clearance vetting in a week or accrediting software systems in one day for operational deployment.

- Improving the robustness and reliability of AI systems.

- Enhancing the security and resiliency of machine learning and AI technologies.

- Reducing power, data, and performance inefficiencies.

- Pioneering the next generation of AI algorithms and applications, such as “explainability” and common sense reasoning.

The AI Exploration (AIE) program is one key element of DARPA’s broader AI investment strategy that will help ensure the U.S. maintains a technological advantage in this critical area. Since the AIE launch, DARPA has been issuing “AIE Opportunities” that focus on technical domains important to DARPA’s goals in pursuing disruptive third wave AI research concepts. The ultimate goal of each AIE Opportunity is to invest in research that leads to prototype development that may result in new, game-changing AI technologies for U.S. national security.

The thrust in Machine learning and Artificial Intelligence is part of Third offset strategy. US’s Third Offset strategy is leveraging new technologies such as artificial intelligence, autonomous systems and human-machine networks to equalise advances made by the nations opponents in recent years.

DARPA AIE Opportunities

AIE Opportunities will focus on “third wave” theory and applications of AI. The projects pursued may include proofs of concept; pilots; novel applications of commercial technologies for defense purposes; creation, design, development, and demonstration of technical or operational utility; or combinations of the foregoing.

DARPA is currently pursuing more than 20 programs that are exploring ways to advance the state-of-the-art in AI, pushing beyond second-wave machine learning techniques towards contextual reasoning capabilities. In addition, more than 60 active programs are applying AI in some capacity, from agents collaborating to share electromagnetic spectrum bandwidth to detecting and patching cyber vulnerabilities.

DARPA Taps GrammaTech for Artificial Intelligence Exploration (AIE) Program Apply AI to Reverse Engineer Code, reported in Jan 2021

GrammaTech is developing ReMath, an AI tool that can automatically infer high-level mathematical representations from existing binaries in cyber-physical systems and embedded software. Currently, subject matter experts (SMEs) must manually analyze binaries through a time-consuming and expensive process, using low-level tools such as disassemblers, debuggers, and decompilers to recover the higher-level constructs encoded in software. This requires extensive reverse engineering to be able to understand and modify systems. ReMath aims to address this gap and dramatically improve productivity by recovering and converting machine language into representations that SMEs find natural to work with.

“ReMath will enable subject matter experts to rapidly understand and model hardware-interfacing computations embedded in cyber-physical system binaries,” said Alexey Loginov, Vice President of Research at GrammaTech. “This research will greatly lower the cost of analyzing, maintaining, and modernizing cyber-physical devices.” Sample applications for this research include industrial control systems used in power and chemical processing plants where domain experts without reverse-engineering or coding experience could maintain and make changes to existing software.

The goal is to free developers up to build new software, rather than continuously updating software that has already been deployed, noted Loginov. This approach should ultimately reduce the backlog of application and software development projects organizations have, added Loginov.vAs software development becomes more efficient, the current developer shortage should also ease, and open up resources to launch a greater number of application development projects. Rather than spending time on manual tasks that provide no differentiated value, Loginov said software engineers will be able focus more of their time and energy on process innovation.

In the meantime, developers should expect to see a rash of development tools with AI capabilities in the months ahead. None of those tools are likely to eliminate the need for developers any time soon; however, much of the drudgery involved in writing code today is likely to be sharply reduced as machine learning algorithms and other forms of AI are applied to software development.

Dramatic success in machine learning has led to a torrent of Artificial Intelligence (AI) applications. Continued advances promise to produce autonomous systems that will perceive, learn, decide, and act on their own. However, the effectiveness of these systems is limited by the machine’s current inability to explain their decisions and actions to human users.

The four-year grant from DARPA will support the development of a paradigm to look inside that black box, by getting the program to explain to humans how decisions were reached. “Ultimately, we want these explanations to be very natural – translating these deep network decisions into sentences and visualizations,” said Alan Fern, principal investigator for the grant and associate director of the College of Engineering’s recently established Collaborative Robotics and Intelligent Systems Institute.

The Department of Defense is facing challenges that demand more intelligent, autonomous, and symbiotic systems. Explainable AI—especially explainable machine learning—will be essential if future warfighters are to understand, appropriately trust, and effectively manage an emerging generation of artificially intelligent machine partners.

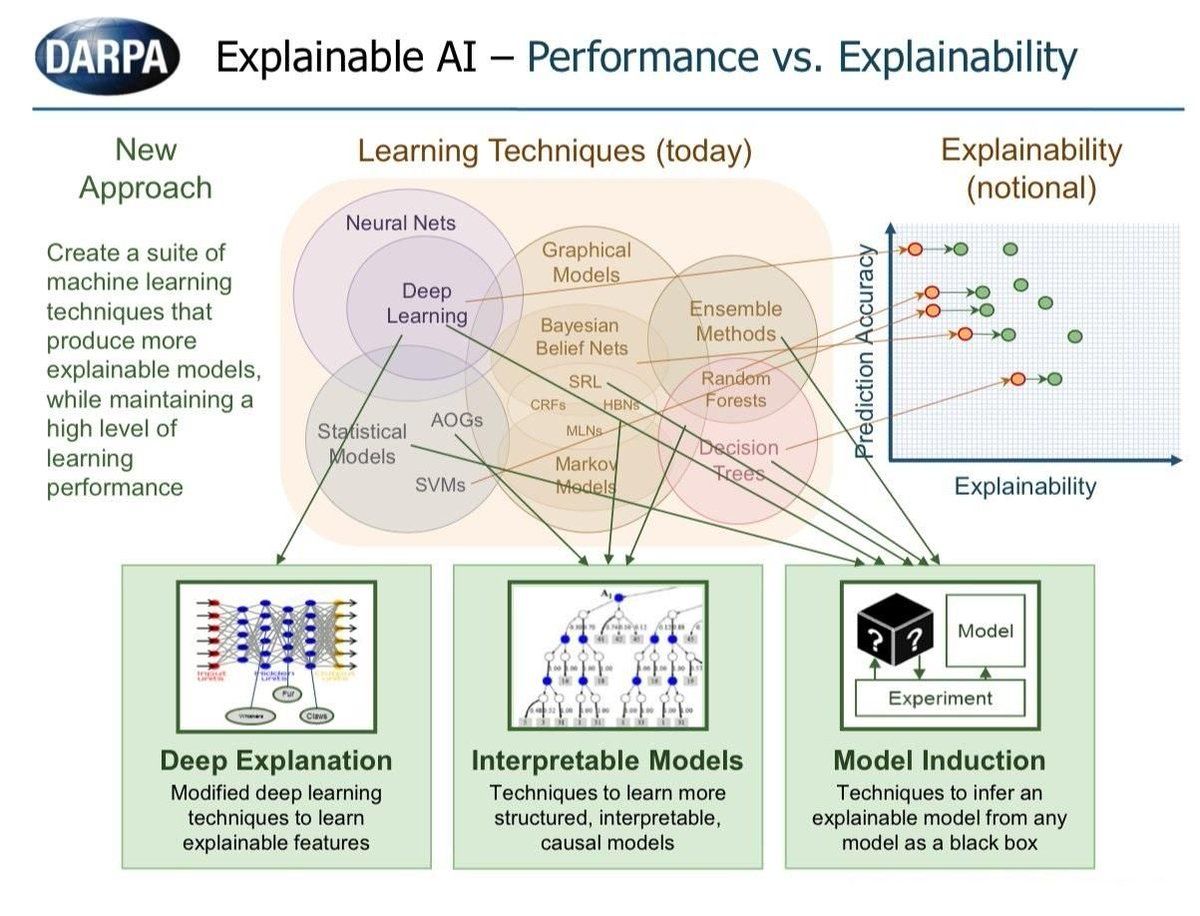

The Explainable AI (XAI) program aims to create a suite of machine learning techniques that:

- Produce more explainable models, while maintaining a high level of learning performance (prediction accuracy); and

- Enable human users to understand, appropriately trust, and effectively manage the emerging generation of artificially intelligent partners.

As the testbed for its XAI initiative DARPA has chosen intelligence analysis, where analysts have to pore over huge volumes of data coming out of videos, cameras and other sources. This is an area where the development of machine learning and computer vision techniques have been very beneficial, helping automate many of the tasks analysts perform. “Intel analysts use AI tools to keep up with the volume of data, but they don’t get an explanation of why the system is picking a particular image or a particular situation,” Gunning says.

To begin developing the system, the researchers will use real-time strategy games, like StarCraft, to train artificial-intelligence “players” that will explain their decisions to humans.The AI players would be trained to explain to human players the reasoning behind their in-game choices. StarCraft is a staple of competitive electronic gaming. Google’s DeepMind has also chosen StarCraft as a training environment for AI. Later stages of the project will move on to applications provided by DARPA that may include robotics and unmanned aerial vehicles.

Another area that DARPA’s XAI program is focusing on is autonomy. “We don’t quite see that problem out in the field yet because autonomous systems that are going to be trained through deep learning are not quite out on the field yet,” Gunning says. “But we’re anticipating that one day we’re going to have autonomous systems, in air vehicles, underwater vehicles, and automobiles that will be trained at least in part through these machine learning techniques. People would want to be able to understand what decisions they make.”

Fern said the research is crucial to the advancement of autonomous and semi-autonomous intelligent systems. “Nobody is going to use these emerging technologies for critical applications until we are able to build some level of trust, and having an explanation capability is one important way of building trust,” he said.

While autonomy in defense scenarios largely involves detecting objects in images and video, Gunning explains that XAI will also focus on developing broader explainability and transparency into decisions made by those autonomous systems. “In the autonomy case, you’re training an autonomous system to have a decision policy. Most of those also involve vision or perception, but it’s really trying to explain the decision points and its decision logic as it is executing a mission,” Gunning says.

Different strategies to develop explainable AI methods

“When the program started, deep learning was starting to become prominent. But today, it’s sort of the only game in town. It’s what everybody’s interested in,” Gunning says. Therefore, XAI’s main focus will be on creating interpretation tools and techniques for deep learning algorithms and neural networks. “But we are also looking at techniques besides deep learning,” Gunning adds.

The teams working under XAI are working in three strategic domains:

Deep explanation: These are efforts aimed at altering deep learning models in ways that make them explainable. Some of the known techniques for doing this involve adding elements in the different layers of neural networks that can help better understand the complicated connections they develop during their training.

Building more interpretable models: Projects in this category focus on supplementing deep learning with other AI models that are inherently explainable. This can be probabilistic relational models or advanced decision trees, structures that were used before the advent of artificial neural networks. These complementary AI models help explain the system’s decisions, Gunning explains.

Model induction: These are model-agnostic methods at explaining AI decisions. “You just treat the model as a black box and you can experiment with it to see if you can infer something that explains its behavior,” Gunning says. Last year, I wrote an in-depth profile of RISE, a model-agnostic AI explanation method developed by researchers at Boston University under the XAI initiative. “[RISE] doesn’t necessarily have to dig inside the neural network and see what it’s paying attention to,” Gunning says. “They can just experiment with the input and develop these heatmaps that shows you what was the net paying attention to when it made those decisions.”

The explainable AI methods should be able to help generate “local” and “global” explanations. Local explanations pertain to interpreting individual decisions made by an AI system whereas global explanations provide the end user the general logic behind the behavior of the AI model. “Part of the test here is to have the users employ any of these AI explanation systems. They should get some idea of both of local and global explanations. In some cases, the system will only give the user local explanations, but do that in a way that they build a mental model that gives them a global idea of how the system will perform. In other cases, it’s going to be an explicit global model,” Gunning says.

New machine-learning systems will have the ability to explain their rationale, characterize their strengths and weaknesses, and convey an understanding of how they will behave in the future. The strategy for achieving that goal is to develop new or modified machine-learning techniques that will produce more explainable models. These models will be combined with state-of-the-art human-computer interface techniques capable of translating models into understandable and useful explanation dialogues for the end user. Our strategy is to pursue a variety of techniques in order to generate a portfolio of methods that will provide future developers with a range of design options covering the performance-versus-explainability trade space.

XAI funding

XAI consists of 12 teams, 11 of which are working on parallel projects to create explainability methods and explainable AI models.

“The projects consist of at least two main components. First, they modify the machine learning process somehow so it produces a more explainable model, meaning if they’re using deep learning, they’re pulling out some features from the system that can be used for explanation, or maybe they’re learning one of these more interpretable models that has more semantics or more information in it that can be used for explanation,” Gunning says.

The second component is making those explanation components understandable to human users. “This includes the explanation interface, the right HCI (human-computer interaction), the right use of cognitive psychology, so you take the features of the content from the explainable model and generate the most understandable explanation you can for the end user,” Gunning says.

The researchers from Oregon State were selected by DARPA for funding under the highly competitive Explainable Artificial Intelligence program. Other major universities chosen include Carnegie Mellon, Georgia Tech, Massachusetts Institute of Technology, Stanford, Texas and University of California, Berkeley.

A team at UC Berkley is overseeing several projects being developed by XAI, including BU’s RISE. “They also have some interesting techniques on how to make the deep learning system itself more explainable,” Gunning says. One of these techniques is to train a neural network in a way that it learns a modular architecture where each module is learning to recognize a particular concept that can then be used to generate an explanation. This is an interesting concept and a break from the current way neural networks work, in which there’s no top-down, human-imposed logic embedded into their structure. The Berkley group is also pursuing what Gunning calls “the way out of this is more deep learning.” “They will have one deep learning system trained to make the decisions. A second deep learning system will be trained to generate the explanation,” Gunning says.

Another effort is led by Charles River Analytics, which has a subcontract with researchers at the University of Massachusetts. “They’re using one of the model induction approaches, where they’re treating the machine learning system as a black box and then experiment with it. Their explanation system will run millions of simulation examples and try all sorts of inputs, see what the output is of the system and see if they can infer a model that can describe its behavior. And then they express that model as a probabilistic program, which is a more interpretable model, and use that to generate explanations,” Gunning says.

While most of the efforts focus on analyzing and explaining AI decisions made visual data, Gunning explains that some of the projects will be taking those efforts a step further. For instance, other teams that work with the UC Berkley group are combining RISE with techniques that use a second deep neural network to generate a verbal explanation of what the net is keying on.

“In some of their examples, they’ll show an image and ask a question about the image and the explanation will be both the RISE system highlighting what features of the image the system was looking at, but there will also be a verbal explanation of what conceptual features the net had found important,” Gunning says. For instance, if an image classifier labels a photo as a particular breed of bird, it should be able to explain what physical features of the bird (beak length, feather structure…) contributed to the decision.

Another team at Texas A&M is working on misinformation. First, they try to train an AI model to identify fake news or misinformation by processing large amounts of text data, including social media content and news articles. The AI then employs explainability techniques to describe what features in a text document indicated that it was fake.

The 12th team in the XAI initiative is a group of cognitive psychologists at the Institute for Human-Machine Cognition in Florida. “They are not developing an explainable AI system. They are just there to dig through all the literature on the psychology of explanation and make the most practical knowledge from all that literature available to the developers. They also help us with figuring out how to measure the effectiveness of the explanations as we do the evaluation,” Gunning says.

A Robot That Explains Its Actions

UCLA has been working on project that aims to disentangle explainability from task performance, measuring each separately to gauge the advantages and limitations of two major families of representations—symbolic representations and data-driven representations—in both task performance and fostering human trust. The goals are to explore (i) what constitutes a good performer for a complex robot manipulation task? (ii) How can we construct an effective explainer to explain robot behavior and foster human trust?

UCLA’s Baxter robot learned how to open a safety-cap medication bottle (tricky for robots and humans alike) by learning a manipulation model from haptic demonstrations provided by humans opening medication bottles while wearing a sensorized glove. This was combined with a symbolic action planner to allow the robot adjust its actions to adapt to bottles with different kinds of caps, and it does a good job without the inherent mystery of a neural network.

Intuitively, such an integration of the symbolic planner and haptic model enables the robot to ask itself: “On the basis of the human demonstration, the poses and forces I perceive right now, and the action sequence I have executed thus far, which action has the highest likelihood of opening the bottle?”

Both the haptic model and the symbolic planner can be leveraged to provide human-compatible explanations of what the robot is doing. The haptic model can visually explain an individual action that the robot is taking, while the symbolic planner can show a sequence of actions that are (ideally) leading towards a goal. What’s key here is that these explanations are coming from the planning system itself, rather than something that’s been added later to try and translate between a planner and a human.

To figure out whether these explanations made a difference in the level of a human’s trust or confidence or belief that the robot would be successful at its task, the researchers conducted a psychological study with 150 participants. Survey results showed that the highest trust rating came from the group that had access to both the symbolic and haptic explanations, although the symbolic explanation was more impactful. In general, humans appear to need real-time, symbolic explanations of the robot’s internal decisions for performed action sequences to establish trust in machines performing multistep complex tasks… Information at the haptic level may be excessively tedious and may not yield a sense of rational agency that allows the robot to gain human trust. To establish human trust in machines and enable humans to predict robot behaviors, it appears that an effective explanation should provide a symbolic interpretation and maintain a tight temporal coupling between the explanation and the robot’s immediate behavior.

This paper focuses on a very specific interpretation of the word “explain.” The robot is able to explain what it’s doing (i.e. the steps that it’s taking) in a way that is easy for humans to interpret, and it’s effective in doing so. However, it’s really just explaining the “what” rather than the “why,” because at least in this case, the “why” (as far as the robot knows) is really just “because a human did it this way” due to the way the robot learned to do the task. While the “what” explanations did foster more trust in humans in this study, long term, XAI will need to include “why” as well, and the example of the robot unscrewing a medicine bottle illustrates a situation in which it would be useful.

Longer-term, the UCLA researchers are working on the “why” as well, but it’s going to take a major shift in the robotics community for even the “what” to become a priority. The fundamental problem is that right now, roboticists in general are relentlessly focused on optimization for performance—who cares what’s going on inside your black box system as long as it can successfully grasp random objects 99.9 percent of the time?

But people should care, says lead author of the UCLA paper Mark Edmonds. “I think that explanation should be considered along with performance,” he says. “Even if you have better performance, if you’re not able to provide an explanation, is that actually better?” He added: “The purpose of XAI in general is not to encourage people to stop going down that performance-driven path, but to instead take a step back, and ask, ‘What is this system really learning, and how can we get it to tell us?’ ”

Evaluation

XAI’s first phase spans over 18 months of work since the program’s launch. At the end of this phase, each of the teams will do an evaluation of their work. The evaluation will consist of two steps. First, each team will build an AI system, show it to the test user without the explanation, and use a variety of measures to evaluate how effective and reliable the model is. Next, the team will show the same or a different group of users the AI system with the explanation, take the same set of measures, and evaluate how effective the explanation is.

The goal is to see if the explanation tools help users’ mental models and whether it enables them to better predict what the system would do in a new situation. The teams will also evaluate whether the AI explanation tools assist users in their task performance and whether users develop better trust of the AI system because of the explanation.

“The user should have a much more refined idea of when it can and can’t trust the system. So instead of blindly trusting or mistrusting the system, they have a much more calibrated idea of how to use the AI,” Gunning says. In the first phase, the development teams design their own evaluation problems. In some cases, it’s a data analytics problem, such as a visual question-answering problem or recognizing patterns in videos and images.

In the autonomy case, it’s a variety of simulated autonomous systems. One of the groups has a simulation environment. Their problem is to explain decisions made by an autonomous system trained to find lost hikers in a national park. The UC Berkley group is using a driving simulator where they’re having the AI system generate explanation of why it is making particular turns or other decisions. “In most of these cases, especially in this first year, the teams are finding Mechanical Turkers or college sophomores as the subjects of these experiments. Most of the problems are designed to be ones where you don’t need a lot of in-depth domain knowledge. Most of the people could be easily trained for the problem they’re trying to solve,” Gunning says.

In future phases, the projects will inch closer toward real DoD scenarios that must be tested by real military personnel. “We might actually get some test data of overhead satellite video that would be unclassified and would be closer to what a DoD intel analyst would be using,” Gunning says. Also, as the program develops, the test problems will be consolidated and generalized into four or five common problems so the teams can get a comparison of the technologies and their performance.

XAI has already wrapped up the first phase of its work and the teams are processing their tests. “We’ll have our next program meeting in February at Berkley, where we’ll get presentations from all the groups. DARPA will put out a consolidated report with all those results,” Gunning says. “We’re getting some initial interesting results from all of these players. There are all sorts of rough edges that we’re dealing with in the bugs, the technology and the challenges, as well as how you measure the effectiveness of the explanation and what are really good test problems to tease that out. That’s not as obvious as you think it might be.”

After February, XAI will have another two and half years of the program to iterate. The teams will do another evaluation each year and gradually improve the technology, see what works and what doesn’t. “This is very much an exploration of a lot of techniques. We’ll see which ones are ready for prime time, then spin-off some transition or engineering projects to take the most promising ideas and insert them into some defense application,” Gunning says.

On August 28, Raytheon partnered with DARPA to where its Explainable Question Answering System (EQUAS) will allow AI programs to “show their work,” increasing the human user’s confidence in the machine’s suggestions. “Our goal is to give the user enough information about how the machine’s answer was derived and show that the system considered relevant information so users feel comfortable acting on the system’s recommendation,” said Bill Ferguson, lead scientist and EQUAS principal investigator at Raytheon BBN in a statement.

EQUAS will show users which data mattered most in the AI decision-making process. Using a graphical interface, users can explore the system’s recommendations and see why it chose one answer over another. The technology is still in its early phases of development but could potentially be used for a wide-range of applications.

References and resources also include:

https://www.fbo.gov/spg/ODA/DARPA/CMO/HR001117S0016/listing.html

http://www.darpa.mil/program/explainable-artificial-intelligence

https://futurism.com/darpa-working-make-ai-more-trustworthy/

https://www.darpa.mil/news-events/2018-05-03

https://sociable.co/technology/darpa-ai-national-defense/

https://bdtechtalks.com/2019/01/10/darpa-xai-explainable-artificial-intelligence/

International Defense Security & Technology Your trusted Source for News, Research and Analysis

International Defense Security & Technology Your trusted Source for News, Research and Analysis