Introduction:

The world is on the brink of a quantum revolution, as quantum computers inch closer to becoming a reality. With their unprecedented computational power, quantum computers pose a significant threat to the cryptographic algorithms that currently protect our digital infrastructure. To address this imminent danger, the need for quantum-proof cryptography has never been more critical. Recognizing the urgency, the National Institute of Standards and Technology (NIST) has taken a proactive role in standardizing post-quantum cryptographic algorithms to safeguard our digital future.

Cryptography, which relies on mathematical problems that are challenging for conventional computers to solve, forms the basis of our protection systems. However, the advent of quantum computers threatens the security provided by current cryptographic protocols. While today’s quantum computers are not yet powerful enough to break existing encryption, it is crucial to prepare for the future when they will be.

Researchers have made significant progress in quantum computing, with companies like Google and IBM developing quantum processors with a high number of qubits. Once large-scale quantum computers become a reality, they will have the capability to break many of the public-key cryptosystems in use today. Experts predict that within the next decade, quantum computers could potentially break widely adopted encryption schemes like RSA-2048.

The consequences of quantum computing breaking encryption systems are profound, as it would compromise the confidentiality and integrity of our digital infrastructure. Critical aspects such as internet payments, banking transactions, emails, and phone conversations would be at risk. Given the need to protect data for extended periods( 10 to 50 years), organizations must start planning for a transition to quantum-resistant cryptographic solutions now.

It is worth noting that intelligence agencies are also investing in quantum computers to exploit their potential for breaking encryption used by adversaries. The ongoing debate centers around balancing the need for law enforcement to access information with the protection of individuals’ privacy.

In conclusion, although the exact timeframe for the arrival of the quantum computing era remains uncertain, the importance of preparing for it is clear. Organizations must take steps to secure their information systems against quantum threats, ensuring the confidentiality, integrity, and availability of sensitive data in the face of evolving technology. The transition to quantum-resistant cryptography is essential to safeguard our digital infrastructure and maintain trust in the digital age.

Vulnerability of Asymmetric cryptography or public-key cryptography

There are two approaches for encrypting data, private-key encryption and public-key encryption. In private-key encryption, users share a key. This approach is more secure and less vulnerable to quantum technology, but it is also less practical to use in many cases.

The public-key encryption system is based on two keys, one that is kept secret, and another that is available to all. While the public key is widely distributed, private keys are computed using mathematical algorithms. Therefore everyone can send encrypted emails to a recipient, who is the only one able to read them. Because data is encrypted with the public key but decrypted with the private key, it is a form of “asymmetric cryptography”.

Public-key cryptography, also known as asymmetric cryptography, is widely used for secure communication. It relies on the difficulty of certain mathematical problems, such as factorization and discrete logarithms, to provide encryption and key exchange capabilities. However, the emergence of quantum computers poses a significant threat to the security of public-key cryptography.

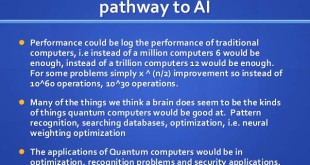

Quantum computers have the potential to break the cryptographic algorithms used in public-key systems by efficiently solving mathematical problems that are currently considered intractable for classical computers. By harnessing quantum super-positioning to represent multiple states simultaneously, quantum-based computers promise exponential leaps in performance over today’s traditional computers. Algorithms like Shor’s algorithm can factor large numbers and compute discrete logarithms at a speed that would render public-key encryption vulnerable.

While today’s quantum computers are not yet powerful enough to break existing encryption, ongoing advancements in quantum technology and research suggest that larger and more complex quantum computers capable of executing Shor’s algorithm could be developed within the next few decades.

Despite the progress in quantum computing, challenges remain in building large-scale and error-tolerant quantum computers. Quantum systems are highly sensitive to noise, temperature changes, and vibrations, requiring a significant number of linked physical qubits to create reliable logical qubits for computation. “To factor [crack] a 1,024-bit number [encryption key], you need only 2,048 ideal qubits,” said Bart Preneel, professor of cryptography at KU Leuven University in Belgium. “So you would think we are getting close, but the qubits announced are physical qubits and there are errors, so they need about 1,000 physical qubits to make one logical [ideal] qubit. So to scale this up, you need 1.5 million physical qubits. This means quantum computers will not be a threat to cryptography any time soon.

While the exact timeframe for quantum computers to become a threat to cryptography remains uncertain, it is crucial to prepare for the future by developing quantum-resistant cryptographic algorithms. The ongoing research and development in post-quantum cryptography, including NIST’s standardization efforts, aim to create robust and secure cryptographic solutions that can withstand attacks from both classical and quantum adversaries.

In conclusion, the vulnerability of public-key cryptography to quantum computers underscores the need for a proactive approach. Organizations and researchers should continue to advance quantum-resistant cryptographic measures to ensure the long-term security and integrity of sensitive data and communication in the quantum computing era.

Some experts even predict that within the next 20 or so years, sufficiently large quantum computers will be built to break essentially all public-key schemes currently in use. Researchers working on building a quantum computer have estimated that it is likely that a quantum computer capable of breaking 2000-bit RSA in a matter of hours could be built by 2030 for a budget of about a billion dollars.

For a deeper understanding on Quantum Computer threat and post-quantum cryptography Please visit: Quantum Computing and Post-Quantum Cryptography: Safeguarding Digital Infrastructure in the Quantum Era

Security Risk

While quantum computing promises unprecedented speed and power in computing, it also poses new risks. As this technology advances over the next decade, it is expected to break some encryption methods that are widely used to protect customer data, complete business transactions, and secure communications. Thus a sufficiently powerful quantum computer will put many forms of modern communication—from key exchange to encryption to digital authentication—in peril.

NSA, whose mission is to protect vital US national security information and systems from theft or damage, is also advising US agencies and businesses to prepare for a time in the not-too-distant future when the cryptography protecting virtually all e-mail, medical and financial records, and online transactions is rendered obsolete by quantum computing.

Post-quantum Cryptography

Organizations are now working on post-quantum cryptography (also called quantum-resistant cryptography), whose aim is to develop cryptographic systems that are secure against both quantum and classical computers, and can interoperate with existing communications protocols and networks.

There is also need to speed up preparations for the time when super-powerful quantum computers can crack conventional cryptographic defenses. The widespread adoption of quantum-resistant cryptography will be a long and difficult process that could probably take 20 years to complete. The first step is to create these new algorithms, then standardize them , integrate them into protocols, then integrate the protocols into products, said Bill Becker, vice president of product management at SafeNet AT, a provider of information assurance to government customers.

NIST launched an international competition in 2017

Historically, it has taken almost two decades to deploy our modern public key cryptography infrastructure. It’s possible that highly capable quantum machines will appear before then, and if hackers get their hands on them, the result could be a security and privacy nightmare.

“Therefore, regardless of whether we can estimate the exact time of the arrival of the quantum computing era, we must begin now to prepare our information security systems to be able to resist quantum computing,” says NIST. Consequently, the search for algorithms believed to be resistant to attacks from both classical and quantum computers has focused on public key algorithms.

US National Institute of Standards and Technology (NIST) will play a leading role in the effort to develop a widely accepted, standardized set of quantum-resistant algorithms. The NIST competition is not just limited to encryption. Other algorithms will have to analyze the signature, in other words, authenticate the source of a message without being susceptible to falsification. In both cases, the criteria clearly include security, but also the system’s speed and fluidity.

The NIST, which is in charge of establishing various technological and measurement standards in the United States, launched an international competition in 2017 to build scientific consensus regarding post-quantum cryptography. This process has entered its third and final phase, with both academic and industrial researchers contributing to the effort. Among the sixty-nine initial submissions, the NIST selected those that would make it to the following stage of the competition based on criteria such as security, performance, and the characteristics of the implementation. It also took into consideration studies published by the scientific community, in addition to possible attacks against each scheme.

The plan is to select the final algorithms in the next couple of years and to make them available in draft form by 2024. So, while organizations can start preparing for post-quantum cryptography now, they will have to wait at least four years to know which algorithm to adopt once Nist has chosen the best submissions for incorporation into a standard.

Symmetric cryptography and Post Quantum Cryptography

In traditional cryptography, there are two forms of encryption: symmetric and asymmetric. Most of today’s computer systems and services such as digital identities, the Internet, cellular networks, and cryptocurrencies use a mixture of symmetric algorithms like AES and SHA-2 and asymmetric algorithms like RSA (Rivest-Shamir-Adleman) and elliptic curve cryptography. The asymmetric parts of such systems would very likely be exposed to significant risk if we experience a breakthrough in quantum computing in the coming decades.

In contrast to the threat quantum computing poses to current public key algorithms, most current symmetric cryptographic algorithms (symmetric ciphers and hash functions) are considered to be relatively secure from attacks by quantum computers. While the quantum Grover’s algorithm does speed up attacks against symmetric ciphers, doubling the key size can effectively block these attacks. Thus post-quantum symmetric cryptography does not need to differ significantly from current symmetric cryptography

In fact, even a quantum computer capable of breaking RSA-2048 would pose no practical threat to AES-128 whatsoever. Grover’s algorithm applied to AES-128 requires a serial computation of roughly 265 AES evaluations that cannot be efficiently parallelized. As quantum computers are also very slow (operations per second), very expensive, and quantum states are hard to transfer from a malfunctioning quantum computer, it seems highly unlikely that even clusters of quantum computers will ever be a practical threat to symmetric algorithms. AES-128 and SHA-256 are both quantum resistant according to the evaluation criteria in the NIST PQC (post quantum cryptography) standardization project

The good news here, said Preneel, is that the impact for symmetric cryptography is not so bad. “You just have larger encryption keys and then you are done,” he said. The goal of post-quantum cryptography (also called quantum-resistant cryptography) is to develop cryptographic systems that are secure against both quantum and classical computers, and can interoperate with existing communications protocols and networks. NIST has initiated a process to solicit, evaluate, and standardize one or more quantum-resistant public-key cryptographic algorithms.

Quantum Resistant Algorithms

Various avenues are being pursued. NIST has identified main families for which post-quantum primitives have been proposed include those based on lattices, codes, and multivariate polynomials, as well as a handful of others.

One of them, cryptographic lattices, involves finding the shortest vectors between two points on a mesh, in a space with hundreds of dimensions. Each vector, therefore, has a huge number of coordinates, and the problem becomes extremely arduous to solve.

Lattice-based cryptography – Cryptosystems based on lattice problems have received renewed interest, for a few reasons. Exciting new applications (such as fully homomorphic encryption, code obfuscation, and attribute-based encryption) have been made possible using lattice-based cryptography. Most lattice-based key establishment algorithms are relatively simple, efficient, and highly parallelizable. Also, the security of some lattice-based systems are provably secure under a worst-case hardness assumption, rather than on the average case. On the other hand, it has proven difficult to give precise estimates of the security of lattice schemes against even known cryptanalysis techniques.

Another approach relies on error-correcting codes, which are used to improve degraded communications, for instance restoring the appearance of a video burned on a DVD that has been damaged. Some of these codes provide a very effective framework for encryption but function quite poorly when it comes to verifying a signature.

Code-based cryptography – In 1978, the McEliece cryptosystem was first proposed, and has not been broken since. Since that time, other systems based on error-correcting codes have been proposed. While quite fast, most code-based primitives suffer from having very large key sizes. Newer variants have introduced more structure into the codes in an attempt to reduce the key sizes, however the added structure has also led to successful attacks on some proposals. While there have been some proposals for code-based signatures, code-based cryptography has seen more success with encryption schemes.

Another solution, multivariate cryptography, is based on a somewhat similar principle by proposing to solve polynomials with so many variables that it is no longer possible to calculate within a reasonable time frame.

Multivariate polynomial cryptography – These schemes are based on the difficulty of solving systems of multivariate polynomials over finite fields. Several multivariate cryptosystems have been proposed over the past few decades, with many having been broken. While there have been some proposals for multivariate encryption schemes, multivariate cryptography has historically been more successful as an approach to signatures.

The construction of lattices is quite present among the finalists, as it works equally well for encryption and signatures. However, everyone in the community does not agree that they should be the focus of attention. The NIST prefers exploring a broad spectrum of approaches for establishing its standards. This way, if a particular solution is attacked, the others will remain secure.

For example, the SPHINCS+ algorithm from the Eindhoven University of Technology in the Netherlands, is based on hash functions. A digital fingerprint is ascribed to data, an operation that is extremely difficult to perform in the opposite direction, even using a quantum algorithm. Still, signatures obtained in this way are resource-intensive.

Hash-based signatures – Hash-based signatures are digital signatures constructed using hash functions. Their security, even against quantum attacks, is well understood. Many of the more efficient hash-based signature schemes have the drawback that the signer must keep a record of the exact number of previously signed messages, and any error in this record will result in insecurity. Another drawback is that they can produce only a limited number of signatures. The number of signatures can be increased, even to the point of being effectively unlimited, but this also increases the signature size.

Other – A variety of systems have been proposed which do not fall into the above families. One such proposal is based on evaluating isogenies on supersingular elliptic curves. While the discrete log problem on elliptic curves can be efficiently solved by Shor’s algorithm on a quantum computer, the isogeny problem on supersingular curves has no similar quantum attack known. Like some other proposals, for example those based on the conjugacy search problem and related problems in braid groups, there has not been enough analysis to have much confidence in their security

There are seven algorithms involving researchers based in France. With regard to encryption, Crystals-Kyber, NTRU, and Saber are based on lattices, while Classic McEliece rely on error-correcting codes. Damien Stehlé, a professor at ENS Lyon and a member of the LIP Computer Science Laboratory,2 and Nicolas Sendrier, from the INRIA research centre in Paris, are taking part in the competition. In the signature category, the Crystals-Dilithium and Falcon algorithms use lattices, and Rainbow opts for multivariate systems. Stehlé is once again part of the team, as are Pierre-Alain Fouque, a professor at Rennes 1 and member of the IRISA laboratory, as well as Jacques Patarin, a professor at the UVSQ (Université de Versailles-Saint-Quentin-en-Yvelines), writes researcher Adeline Roux-Langlois.

I focus on lattice-based cryptography, in which I provide theoretical proof that the security of cryptographic constructions is based on problems that are hard enough to be reliable. Encryption and signature are the first applications that come to mind, but it could also be used to ensure the very particular confidentiality of electronic voting, for which the authenticity of votes must be verified before counting, while not revealing who voted for whom. I am also working on anonymous authentication, which for example enables individuals to prove that they belong to a group without disclosing other information, or that they are adults without giving their age or date of birth, writes Adeline.

NIST Announces First Four Quantum-Resistant Cryptographic Algorithms

The U.S. Department of Commerce’s National Institute of Standards and Technology (NIST) has chosen the first group of encryption tools that are designed to withstand the assault of a future quantum computer, which could potentially crack the security used to protect privacy in the digital systems we rely on every day — such as online banking and email software. The four selected encryption algorithms will become part of NIST’s post-quantum cryptographic standard, expected to be finalized in about two years.

The announcement follows a six-year effort managed by NIST, which in 2016 called upon the world’s cryptographers to devise and then vet encryption methods that could resist an attack from a future quantum computer that is more powerful than the comparatively limited machines available today. The selection constitutes the beginning of the finale of the agency’s post-quantum cryptography standardization project.

The algorithms are designed for two main tasks for which encryption is typically used: general encryption, used to protect information exchanged across a public network; and digital signatures, used for identity authentication. All four of the algorithms were created by experts collaborating from multiple countries and institutions.

For general encryption, used when we access secure websites, NIST has selected the CRYSTALS-Kyber algorithm. Among its advantages are comparatively small encryption keys that two parties can exchange easily, as well as its speed of operation.

For digital signatures, often used when we need to verify identities during a digital transaction or to sign a document remotely, NIST has selected the three algorithms CRYSTALS-Dilithium, FALCON and SPHINCS+ (read as “Sphincs plus”). Reviewers noted the high efficiency of the first two, and NIST recommends CRYSTALS-Dilithium as the primary algorithm, with FALCON for applications that need smaller signatures than Dilithium can provide. The third, SPHINCS+, is somewhat larger and slower than the other two, but it is valuable as a backup for one chief reason: It is based on a different math approach than all three of NIST’s other selections.

Three of the selected algorithms are based on a family of math problems called structured lattices, while SPHINCS+ uses hash functions. The additional four algorithms still under consideration are designed for general encryption and do not use structured lattices or hash functions in their approaches.

While the standard is in development, NIST encourages security experts to explore the new algorithms and consider how their applications will use them, but not to bake them into their systems yet, as the algorithms could change slightly before the standard is finalized.

To prepare, users can inventory their systems for applications that use public-key cryptography, which will need to be replaced before cryptographically relevant quantum computers appear. They can also alert their IT departments and vendors about the upcoming change. To get involved in developing guidance for migrating to post-quantum cryptography, see NIST’s National Cybersecurity Center of Excellence project page.

All of the algorithms are available on the NIST website.

Quantum key distribution (QKD) as the alternative

In addition to post-quantum cryptography running on classical computers, researchers in quantum networking are looking at quantum key distribution (QKD), which would theoretically be a provably secure way to do unauthenticated key exchange. QKD is however not useful for any other use cases such as encryption, integrity protection, or authentication where cryptography is used today as it requires new hardware and is also very expensive compared to software-based algorithms running on classical computers.

In a well-written white paper, the UK government is discouraging use of QKD stating that it seems to be introducing new potential avenues for attack, that the hardware dependency is not cost-efficient, that QKD’s limited scope makes it unsuitable for future challenges, and that post-quantum cryptography is a better alternative. QKD will likely remain a niche product until quantum networks are needed for non-security reasons.

Standardizing post-quantum cryptographic algorithms

The US National Institute of Standards and Technology (NIST) is currently standardizing stateless quantum-resistant signatures, public-key encryption, and key-establishment algorithms and is expected to release the first draft publications between 2022–2024. After this point, the new standardized algorithms will likely be added to security protocols like X.509, IKEv2, TLS and JOSE and deployed in various industries. The IETF crypto forum research group has finished standardizing two stateful hash-based signature algorithms, XMSS and LMS which are also expected to be standardized by NIST. XMSS and LMS are the only post-quantum cryptographic algorithms that could currently be considered for production systems e.g. for firmware updates.

The US government is currently using the Commercial National Security Algorithm Suite for protection of information up to ‘top secret’. They have already announced that they will begin a transition to post-quantum cryptographic algorithms following the completion of standardization in 2024. Why should the industry be taking note of this decision? ‘Top secret’ information is often protected for 50 to 75 years, so the fact that the US government is not planning to finalize the transition to post-quantum cryptography until perhaps 2030 seems to indicate that they are quite certain that quantum computers capable of breaking P-384 and RSA-3072 will not be available for many decades.

NSA Guidance

NSA guidance advises using the same regimen of algorithms and key sizes that have been recommended for years. Those include 256-bit keys with the Advanced Encryption Standard, Curve P-384 with Elliptic Curve Diffie-Hellman key exchange and Elliptic Curve Digital Signature Algorithm, and 3072-bit keys with RSA encryption. But for those who have not yet incorporated one of the NSA’s publicly recommended cryptographic algorithms—known as Suite B in NSA parlance—last week’s advisory recommends holding off while officials plot a new move to crypto algorithms that will survive a postquantum world.

“Our ultimate goal is to provide cost effective security against a potential quantum computer,” officials wrote in a statement posted online. “We are working with partners across the USG, vendors, and standards bodies to ensure there is a clear plan for getting a new suite of algorithms that are developed in an open and transparent manner that will form the foundation of our next Suite of cryptographic algorithms.”

Challenges of post-quantum transition

The replacement of current cryptographic standards with new post-quantum standards presents significant technical challenges due to worldwide interconnectedness and established protocols. Although purchasing post-quantum encryption solutions available on the market today could prove tempting as a way to reduce quantum computing risks, doing so may create a more challenging transition to the NIST-approved standard in 2024 at a significant cost.

It’s going to be a huge amount of effort to develop, standardize, and deploy entirely new classes of algorithms. Previous transitions from weaker to stronger cryptography have been based on the bits-of security paradigm, which measures the security of an algorithm based on the time-complexity of attacking it with a classical computer (e.g. an algorithm is said to have 128 bits of security if the difficulty of attacking it with a classical computer is comparable to the time and resources required to brute-force search for a 128-bit cryptographic key.)

“The transition to post-quantum encryption algorithms is as much dependent on the development of such algorithms as it is on their adoption. While the former is already ongoing, planning for the latter remains in its infancy. We must prepare for it now to protect the confidentiality of data that already exists today and remains sensitive in the future.” – U.S. Secretary of Homeland Security, Alejandro Mayorkas, March 31, 2021

DHS Transition to Post-Quantum Cryptography

The Department of Homeland Security (DHS), in partnership with the Department of Commerce’s National Institute of Standards and Technology (NIST), has released a roadmap to help organizations protect their data and systems and to reduce risks related to the advancement of quantum computing technology.

DHS’s Cybersecurity and Infrastructure Security Agency (CISA) is conducting a macro-level assessment of priority National Critical Functions to determine where post-quantum cryptography transition work is underway, where the greatest risk resides, and what sectors of National Critical Functions may require Federal support.

DHS’s new guidance will help organizations prepare for the transition to post-quantum cryptography by identifying, prioritizing, and protecting potentially vulnerable data, algorithms, protocols, and systems.

“Unfortunately, the bits-of-security paradigm does not take into account the security of algorithms against quantum cryptanalysis, so it is inadequate to guide our transition to quantum-resistant cryptography. There is not yet a consensus view on what key lengths will provide acceptable levels of security against quantum attacks,” “At the same time, this recommendation does not take into account the possibility of more sophisticated quantum attacks, our understanding of quantum cryptanalysis remains rather limited, and more research in this area is urgently needed, says NIST.”

Recent reports from academia and industry now says that large-scale cryptography-breaking quantum computers are highly unlikely during the next decade. There has also been general agreement that quantum computers do not pose a large threat to symmetrical algorithms. Standardization organizations like IETF and 3GPP and various industries are now calmly awaiting the outcome of the NIST PQC standardization.

Quantum computers will likely be highly disruptive for certain industries, but probably not pose a practical threat to asymmetric cryptography for many decades and will likely never be a practical threat to symmetric cryptography. Companies that need to protect information or access for a very long time should start thinking about post-quantum cryptography. But as long as US government protects ‘top secret’ information with elliptic curve cryptography and RSA, they are very likely good enough for basically any other non-military use case.

The Importance of Standardization:

Standardization plays a crucial role in the adoption and implementation of post-quantum cryptographic algorithms. It ensures interoperability, compatibility, and widespread acceptance of quantum-resistant solutions across different systems, platforms, and industries. Standardization efforts by NIST provide organizations with a roadmap for transitioning to quantum-proof cryptography, enabling them to future-proof their digital infrastructure.

Preparing for the Quantum Era:

The transition to quantum-proof cryptography requires careful planning and implementation. Organizations must assess their digital infrastructure, identify vulnerabilities, and develop strategies to migrate from vulnerable cryptographic algorithms to quantum-resistant alternatives. By initiating the transition process early, organizations can stay ahead of potential quantum threats, maintain trust with customers and partners, and ensure the long-term security of sensitive information

Conclusion:

The quantum computing threat poses a significant challenge to the security of our digital infrastructure. To address this challenge, the adoption of quantum-proof cryptography is crucial. The ongoing efforts by NIST in standardizing post-quantum cryptographic algorithms provide a solid foundation for the development and implementation of robust quantum-resistant solutions. By proactively preparing for the quantum era, organizations can safeguard their digital infrastructure, protect sensitive information, and uphold the trust and security that underpin our digital world. Embracing quantum-proof cryptography is not just a necessity but an opportunity to fortify the foundations of our digital future.

References and Resources also include:

http://csrc.nist.gov/publications/drafts/nistir-8105/nistir_8105_draft.pdf

http://csrc.nist.gov/groups/ST/post-quantum-crypto/

https://news.cnrs.fr/articles/towards-a-post-quantum-cryptography

International Defense Security & Technology Your trusted Source for News, Research and Analysis

International Defense Security & Technology Your trusted Source for News, Research and Analysis