Electronic computers are extremely powerful at performing a high number of operations sequentially at very high speeds. However, they struggle with combinatorial tasks that can be solved faster if many operations are performed in parallel for example in cryptography and mathematical optimisation, which require the computer to test a large …

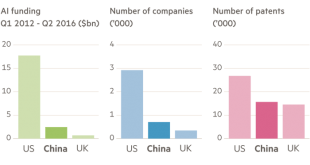

Read More »AI Race among China, United States, European Union and other countries to boost competitiveness, increase productivity, protect national security

Many nations are racing to achieve a global innovation advantage in artificial intelligence (AI) because they understand that AI is a foundational technology that can boost competitiveness, increase productivity, protect national security, and help solve societal challenges. Nations wherein firms fail to develop successful AI products or services are …

Read More »Microprocessor Industry seeing evolution of ARM RISC architecture to open standards RISC V and MIPS

An ARM processor is one of a family of CPUs based on the RISC (reduced instruction set computer) architecture developed by Advanced RISC Machines (ARM). RISC processors are designed to perform a smaller number of types of computer instructions so that they can operate at a higher speed, performing more …

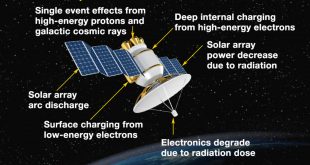

Read More »One of biggest threat to aerospace and defense electronics for deep space missions is radiation

One of the biggest threats to the deployed aerospace and defense electronics systems is radiation. Outside the protective cover of the Earth’s atmosphere, the solar system is filled with radiation. The natural space environment consists of electrons and protons trapped by Earth’s magnetic field, protons and small amount of heavy nuclei …

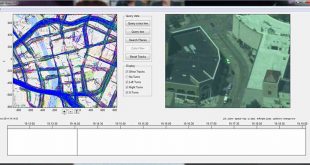

Read More »Wide-area Airborne Motion Imagery (WAMI ) offers Military persistent, real-time surveillance for enhanced situation awarness through an intelligent, airborne sensor system

The DoD has become increasingly reliant on intelligence, surveillance and reconnaissance (ISR) applications. With the advent of expanded ISR capabilities, there is a pressing need to dramatically expand the real-time processing of wide-area, high-resolution video imagery, especially for target recognition and tracking a large number of objects. Not only is …

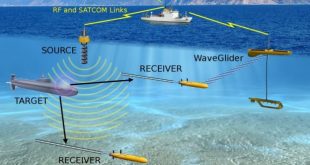

Read More »DARPA’s TIMEly to build Undersea Network Architecture for situational awareness and command and control to dominate in multi domain conflicts

The Defense Advanced Research Projects Agency (DARPA) is soliciting innovative proposals in maritime communications with an emphasis on undersea and cross-domain (above sea to below sea and vice versa) communications. The Defense Advanced Research Projects Agency wants to build an undersea network so that the military can have situational awareness …

Read More »DARPA SC2 challenge use AI to optimize spectrum usage in Wireless Networks and Adaptive Radios to cooperatively share or dominate congested spectrum

Ongoing wireless revolution is fueling a voracious demand for access to the radio frequency (RF) spectrum around the world. In the civilian sector, consumer devices from smartphones to wearable fitness recorders to smart kitchen appliances are competing for bandwidth. Around 50 billion wireless devices are projected to be vying for …

Read More »DARPA BOLT developing handheld device that can translate Language in real time

Being able to converse with people who don’t speak English is essential for the Army, since every day, Soldiers are partnering with militaries in dozens of countries around the world. A number of speech-translator devices are available commercially, and Soldiers have been using them. However, speech translators are seldom completely accurate …

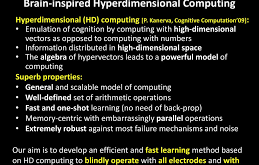

Read More »Brain inspired Hyperdimensional computing for extremely robust brain-computer interfaces, biosignal processing and robotics

From an engineering perspective, computing is the systematized and mechanized manipulation of patterns. A representation is a pattern in some physical medium, for example, the configuration of ONs and OFFs on a set of switches. The algorithm then tells us how to change these patterns—how to set the switches from …

Read More »Rising role of open source software and open-source framework in Military

Open source software is free to license, the preferred choice of geeks everywhere, for rapid development for instance impromptu mapping projects to help victims of the 2010 Haiti Earthquake where open source delivered results much faster than traditional methods. “It probably would have taken a year to come up with …

Read More » International Defense Security & Technology Your trusted Source for News, Research and Analysis

International Defense Security & Technology Your trusted Source for News, Research and Analysis