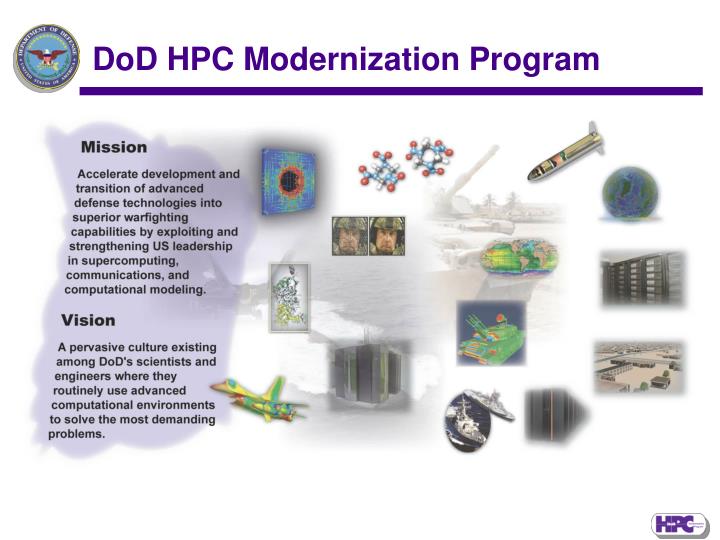

Earlier in the year 2018, Hewlett Packard Enterprise (HPE) announced that it had been awarded a large $57m contract from the US Department of Defense (DoD) to provide supercomputers. As supercomputing has become an ever bigger part of the toolset of the department’s scientists and engineers innovating around the most complex technological challenges, the thirst…