Data-Driven Synthetic Biology: When AI Begins to Design Life Itself

AI and biology are merging to rewrite the code of life — promising breakthroughs in medicine, energy, and agriculture, but also raising profound ethical and security challenges.

Introduction

In recent years, synthetic biology has taken a quantum leap forward—thanks in large part to the integration of massive biological datasets, machine learning, and automated high-throughput experimentation. Known collectively as data-driven synthetic biology, this rapidly evolving field has the power to revolutionize drug discovery, biomanufacturing, agriculture, and environmental restoration. Yet, like all powerful technologies, it comes with a catch: its greatest strengths may also be its greatest risks.

As we stand on the brink of a synthetic biology revolution, it’s critical to understand how data is fueling this transformation—and where caution must be exercised. This article explores the broader implications of AI in synthetic biology and to highlight both opportunities and concerns.

Accelerating Innovation in the Lab

Data-driven synthetic biology is unlocking unprecedented capabilities in biological design and manufacturing. By combining artificial intelligence with genomic, transcriptomic, and proteomic data, scientists can now model and engineer biological systems with a level of precision once thought impossible.

Advances in deep learning and large-scale data analysis have revolutionized how scientists approach biological design. AI models trained on genomic, proteomic, and metabolic datasets can now predict how engineered organisms will behave, optimize DNA sequences for desired functions, and even propose entirely novel biological systems. For example, deep learning models like RoseTTAFold and ESMFold have dramatically improved protein structure prediction, enabling faster drug discovery and enzyme design.

High-throughput “genomic factories” powered by robotics and cloud computing can synthesize, assemble, and test thousands of genetic constructs in parallel. These systems are enabling faster development of biofuels, agricultural enzymes, and even synthetic meat, slashing R&D timelines from years to months.

The field of synthetic biology is undergoing a profound transformation as artificial intelligence (AI) and machine learning become integral to biological design. Recent advances allow researchers to predict protein structures with tools like AlphaFold, optimize genetic circuits through computational modeling, and accelerate metabolic engineering with AI-powered algorithms.

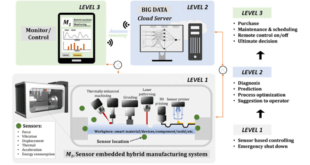

Machine learning algorithms, for example, are now widely used to predict protein folding, optimize gene circuits, and design metabolic pathways. In therapeutic development, companies like LabGenius are leveraging AI to analyze billions of amino acid combinations, dramatically shortening the time required to discover novel antibodies. Elsewhere, digital twin models—virtual replicas of biological processes—are being deployed to simulate and optimize laser-based micromanufacturing techniques, improving outcomes while minimizing trial-and-error experimentation.

However, these powerful capabilities come with significant challenges—from biased datasets to ethical dilemmas and potential misuse.

Real-World Applications: From Medicine to Sustainability

One of the most exciting prospects of data-driven synthetic biology is its application in personalized medicine. AI-enhanced models can simulate patient-specific responses to engineered cell therapies, allowing for the design of tailored interventions for diseases like cancer, rare genetic disorders, and autoimmune conditions.

Meanwhile, sustainable biomanufacturing is undergoing a renaissance. By integrating multi-omics data into predictive metabolic models, researchers can program microbes to convert agricultural waste into biofuels, biodegradable plastics, and high-value chemicals. These innovations not only reduce dependence on fossil fuels but also support the vision of a circular bioeconomy.

In agriculture, synthetic biology is being used to design crops that can self-fertilize or resist drought, guided by AI predictions based on genetic and environmental data. In climate science, genetically modified organisms engineered through data-driven models are being explored to absorb carbon dioxide more efficiently or degrade plastics in ocean environments.

However, the increasing reliance on data-driven approaches raises critical questions about data quality, interpretability, and ethical oversight. Unlike traditional lab experiments, where biological systems can be directly observed, AI models operate as “black boxes,” making their predictions difficult to validate. A 2022 report in Nature Biotechnology warned that over-reliance on AI without experimental verification could lead to flawed biological designs with unintended consequences.

Key Challenges in AI-Powered Synthetic Biology

However, the same tools that empower beneficial innovation also raise serious concerns. Dual-use risks—technologies that can be repurposed for harmful ends—are a persistent worry in synthetic biology. Sophisticated AI models trained on genomic data could, in theory, be used to design dangerous pathogens or circumvent existing biosafety controls. In fact, defense analysts have warned that AI could lower the technical barriers for bioweapon development, emphasizing the urgent need for global governance and oversight.

Data integrity and reproducibility also pose significant challenges. Automated design platforms and synthetic datasets may inadvertently introduce errors or biases, leading to flawed biological designs. One recent review found that nearly half of synthetic biology studies using synthetic datasets could not be replicated. This highlights the pressing need for standardized data protocols, transparent reporting, and rigorous validation procedures.

Ethical dilemmas abound. Who owns genetically designed organisms? Should we engineer species capable of reproducing in the wild? How do we ensure equitable access to the benefits of synthetic biology across different regions and communities? These questions demand inclusive public engagement and policy frameworks that go beyond the lab.

1. Data Limitations and Biases

One of the biggest hurdles in applying AI to synthetic biology is the uneven quality and coverage of biological datasets. Most machine learning models are trained on data from well-studied organisms like Escherichia coli or Saccharomyces cerevisiae, which may not generalize to less-characterized species. A 2023 study in Cell Systems found that AI models for metabolic pathway prediction performed poorly when applied to non-model microbes due to gaps in training data. Additionally, experimental variability—such as differences in lab protocols or measurement techniques—can introduce noise that skews model outputs.

2. The Black Box Problem

Many AI systems, particularly deep neural networks, lack transparency in how they generate predictions. This makes it difficult for researchers to assess whether a model’s output is biologically plausible or merely an artifact of its training data. A 2021 Science article highlighted cases where AI-designed proteins failed in the lab because the models had learned superficial patterns rather than true biochemical principles. Explainable AI (XAI) methods are being developed to address this, but their adoption in synthetic biology remains limited.

3. Dual-Use and Security Risks

The democratization of synthetic biology tools, combined with AI automation, raises concerns about potential misuse. For instance, generative AI could theoretically design harmful biological agents by combining known pathogenic sequences. A 2020 report by the Nuclear Threat Initiative (NTI) warned that AI-powered bioengineering might lower the barrier to creating engineered pathogens. While DNA synthesis screening helps mitigate some risks, AI models that suggest novel, non-natural toxins or virulence factors could bypass existing safeguards.

4. Environmental and Computational Costs

Training large AI models consumes substantial energy, contributing to the carbon footprint of scientific research. A 2022 study in Patterns estimated that training a single protein-folding model like AlphaFold2 emitted as much CO₂ as five cars over a year. Whole-cell simulations, which integrate thousands of biochemical reactions, are even more computationally intensive. Some researchers are exploring energy-efficient algorithms or cloud-based optimization to reduce these impacts, but sustainability remains a pressing issue.

5. Ethical and Societal Implications

Beyond technical challenges, AI-driven synthetic biology poses broader ethical questions. Should AI-designed organisms be subject to stricter regulation than those developed through traditional methods? How can researchers ensure that AI tools do not reinforce biases—for example, by optimizing therapies primarily for well-studied populations while neglecting others? A 2023 Nature editorial called for interdisciplinary oversight committees to evaluate the societal impacts of AI in bioengineering, similar to frameworks used in gene editing.

Toward Responsible Innovation: The Data Hazards Framework

A study published in Synthetic Biology proposes a “Data Hazards” framework to help researchers navigate these risks.

International Defense Security & Technology Your trusted Source for News, Research and Analysis

International Defense Security & Technology Your trusted Source for News, Research and Analysis