Lidars (Light Detection and Ranging) are similar to radars in that they operate by sending light pulses to the targets and calculate distances by measuring the received time. The key advantages of LIDAR is its superior accuracy and its ability to see through masking items, such as leaves, trees, and even camouflaged netting.

LIDAR systems have high accuracy due to its high spatial resolution due to the small focus diameter of the beam, as well as a higher pulse repetition rate. Since they use light pulses that have about 100,000 times smaller wavelength than radio waves used by radar, they have much higher resolution. That makes lidar better at detecting smaller objects and at figuring out whether an object on the side of the road is a pedestrian, a motorcycle, or a stray pile of garbage.

LIDARs are rapidly gaining maturity as very capable sensors for number of applications such as imaging through clouds, vegetation and camouflage, 3D mapping and terrain visualization, meteorology, navigation and obstacle avoidance for robotics as well as weapon guidance. LIDAR data is both high-resolution and high-accuracy, enabling improved battlefield visualization, mission planning and force protection. LIDAR provides a way to see urban areas in rich 3-D views that give tactical forces unprecedented awareness in urban environments.

Airborne LIDARs can calculate accurate three dimensional coordinates of both manmade and naturally occurring targets by combining the laser range, laser scan angle, laser position from GPS, and laser orientation from Inertial Navigation System. Additional information about the object, like its velocity or material composition, can also be determined by measuring certain properties of the reflected signal, such as the induced Doppler shift.

The military uses 3D data capture for a number of applications. The detailed mapping of urban and non-urban terrain can greatly benefit military operations, both from the air and semi-autonomous vehicles. The military depends on LiDAR technology to map out the exact terrain of the battlefield and know the exact position of the enemy and their capacity. The technology can also be used to locate all enemy weaponry including tankers and help in neutralizing the threat on a much larger scale. Among other things, it can be used for air defence, air traffic control, ground surveillance, navigation, search and rescue, fire control radars, and identification of moving targets.

Swedish Defence Research Agency (FOI) have been working on demonstrating the possibilities for airborne sensor systems, especially 3D imaging ladar on different multi-rotor UAVs for research and development purposes. With UAVs we can cover larger survey areas, and detect other objects or regions of interest (e.g., those that are obscured by high levels of vegetation), than we can with systems based on ground vehicles.

They have also proved useful for disaster management missions; emergency relief workers could use LIDAR to gauge the damage to remote areas after a storm or other cataclysmic event. After the January 2010 Haiti earthquake; a single pass by a business jet flying at 10,000 feet over Port-au-Prince was able to display the precise height of rubble strewn in city streets enabled by 30 centimeters resolution LIDAR.

Russia’s state-owned TASS media reported that Zala, a Russian company under the broader umbrella of the Kalashnikov defense complex, is putting LIDAR on its drones for the first time. These light detection and ranging systems are commonly used to create 3-D models of the world, and LIDAR is an important part of most autonomous vehicle designs. The announcement at TASS notes that LIDAR on Zala drones will allow for better inspections of infrastructure and terrain, in ways that existing ground-based or manned aircraft technologies cannot provide.

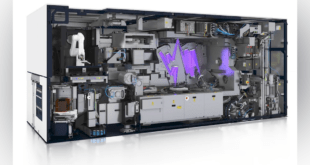

DARPA’s High-Altitude LIDAR Airborne 3D imaging

DARPA’s High-Altitude LIDAR Operations Experiment (HALOE) – First deployed to Afghanistan in 2010 – provided unprecedented access to high-resolution 3D geospatial data for tactical missions, like that needed for helicopter crews to find landing zones. The HALOE sensor pod can collect data more than 10 times faster than state-of-the-art systems and 100 times faster than conventional systems. “At full operational capacity, the HALOE system can map 50 percent of Afghanistan in 90 days,” the DARPA head Regina Dugan said, “whereas previous systems would have required three years.” Navy plans to test LIDAR on a Firescout, a robotic helicopter, to help spot pirates. DARPA is now using HALOE data to create 3D holographic images of urban environments, which soldiers can use to visualize the areas they’re about to enter.

Rapid development of small UAVs with higher quality propulsion systems, inertial navigation systems, electronics, and algorithms is also ongoing. Concurrently, ladar (i.e., laser detection and ranging) sensors that have high reliability, accuracy, pulse repetition frequency, large data capacities, and advanced data analysis techniques are being produced. This drive for the miniaturization of ladar and UAV systems provides new possibilities for 3D imaging from the air.

US Army requirement for state-of-the-art imaging LIDAR for foliage penetration from UAVs

Officials of the Army Contracting Command have issued a source-sought notice (W909MY-17-R-A006) for the LIDAR Payload for Manned and Unmanned Airborne Platforms project. This project seeks to determine the state-of-the-art in LIDAR systems for tactical mapping, mission planning, and target detection using multi-aspect foliage penetration (FOPEN) technology. Of particular interest is LIDAR technology mature enough for an advanced technology demonstrator prototype.

The LIDAR imaging sensor should offer resolution of 30 centimeters from altitudes of at least 18,000 feet above ground level, with point rates of 500,000 to 6.5 million final-product points per second. It should have a variable swath from 200 meters or less to 1 kilometer or more, with slant ranges of 25,000 feet. Researchers want systems that weigh between 200 and 300 pounds, and that measure 18 to 21 inches in diameter.

Army researchers are looking for LIDAR systems that consist of several line-replaceable units (LRUs) including an imaging sensor, data storage, and sensor processing. The imaging sensor must have a pointing and stabilization unit such as a stabilized turret that houses the sensor optics, focal planes, and supporting electronics. The data storage and sensor processing has computer, data storage, sensor-processing algorithms, and imaging sensor command, control software.

Software should include automated feature extraction and aided target recognition algorithms for anomaly detection, segmentation, void detection, plane detection, other features to minimize analyst workload, and limited-bandwidth communications of less than 2 megabits per second. Responses to the data storage and sensor processing unit should focus on unique processing techniques and algorithms, as well as small size, weight, and power consuming (SWaP) hardware.

US Army’s Autonomous Mobility Appliqué System

In June 2016, US Army test-drove a convoy of four trucks equipped with self-driving technology on a highway in Michigan. The four beta trucks drove together over seven miles, using cameras and LIDAR to watch the road. They used dedicated short-range radio, also known as vehicle-to-vehicle communication, to continuously relay their positions, speed, and other information with each other and even with infrastructure Michigan’s DOT installed for the purpose, getting advance notice of things like changing speed limits and closed lanes ahead.

Under an initial $11 million contract in 2012, Lockheed Martin developed a multi-platform kit that integrates low-cost sensors and control systems with Army and Marine tactical vehicles to enable autonomous operation in convoys. According to Lockheed, Autonomous Mobility Appliqué System also gives drivers an automated option to alert, stop and adjust, or take full control under user supervision.

The AMAS hardware and software are designed to automate the driving task on current tactical vehicles. The Unmanned Mission Module part of AMAS, which includes a high performance LIDAR sensor, a second GPS receiver and additional algorithms, is installed as a kit and can be used on virtually any military vehicle. LIDAR system, or Light Detection and Ranging, looks for curves in the road and changes from pavement and gravel to grass and uses those to inform the platform where the road surface is and its expected travel path. The module is also fitted with a GPS receiver to plan, and track the convoy’s route

US Navy employs Airborne Laser Mine Detection System ( ALMDS) , and the Airborne Mine Neutralization System (AMNS) to search and destroy enemy’s mines

Navy requires Cheaper, Faster, More reliable and More capable mine countermeasure and neutralization systems. Airborne platforms can provide rapid search and detection of mines. Two airborne systems, the Airborne Laser Mine Detection System ( ALMDS) , and the Airborne Mine Neutralization System (AMNS), both will operate aboard the Navy’s MH-60S chopper to locate and take out sea mines from the air by means of powerful armor-piercing warheads. Both the AMNS, and ALMDS reached initial operational capability in November 2016.

ALMDS is a laser-based, high-area-coverage system designed to provide a wide-area reconnaissance and assessment of mine threats for Carrier and Expeditionary Strike Groups (CSG/ESG). It uses pulsed laser light and streak tube receivers to image the entire near-surface volume to detect, classify and localise near-surface, moored mines. AMNS-AF is designed to tackle the threat of modern mines. It has the ability to provide reacquisition, identification and neutralisation capability against bottom and moored sea mines. After identifying the threats during mine-hunting operations, AMNS-AF emits a warhead to explosively neutralise the target.

The Airborne Laser Mine Detection System, or ALMDS, which also works with MH-60S helicopters, uses Light Detection and Ranging (LIDAR) technologies to detect, classify and localize naval mines near-surface moored sea mines. “ALMDS is an optical system flown over the water, not towed through it. This enables the helicopter to conduct mine detection operations at greater speeds. This speed, combined with the laser’s wide swath, delivers a high area coverage rate,” explained Baribeau. Detecting mines more effectively and at greater distances with systems such as AMNS, naturally, could massively impact the US navy’s ability to respond to a wide range of threats.

The AN/ASQ‑235 Airborne Mine Neutralization System, or AMNS, receives surveillance information from a range of different navy systems before it reconfirms the target, deploys expendable destructors from MH-60S helicopters below the surface, and destroys the mine. Those vehicles are controlled from a console while they ID mines. Once they do, warheads are detonated to destroy the mines. The Airborne Mine Neutralisation System (AMNS) is designed to enable Carrier Strike Groups, Expeditionary Strike Groups and Amphibious attack missions to improve combat access while lowering risk to surface ships and sailors.

LIDAR on unmanned surface vessel

Leidos has completed initial performance trials of the technology demonstration vessel it is developing for the Defense Advanced Research Projects Agency (DARPA)’s Anti-Submarine Warfare Continuous Trail Unmanned Vessel (ACTUV) program. The at-sea tests took place off the coast of San Diego, California.

DARPA’s Anti-Submarine Warfare (ASW) Continuous Trail Unmanned Vessel (ACTUV) program seeks to develop a new type of unmanned surface vessel that could independently track adversaries’ ultra-quiet diesel-electric submarines over thousands of miles. The technologies sought by the U.S. Defense Advanced Research Projects Agency are sensor systems and image-processing hardware and software that use electro-optical/infrared or light detection and ranging, or LIDAR, approaches for onboard systems to detect and track nearby surface vessels and potential navigation hazards, and classify those objects’ characteristics.

The DARPA RFI invited responses that explore some or all of the following technical areas:

Maritime Perception Sensors: Any combination of non-radar-based imaging and tracking methods, including, but not limited to, passive and active imagers in the visible and infrared wavelengths and Class 1 Laser Rangefinder (LRF) and Flash LIDAR to image ships during day or night in the widest variety of environmental conditions, including haze, fog and rain, over ranges from 4 km to 15 km

Maritime Perception Software: Algorithms and software for detection, tracking and classification of ships by passive optical or non-radar active imagers

Classification Software for Day Shapes/Navigation Lights: Algorithms and software to support detection, tracking and classification of day shapes and navigation lights—standard tools that vessels use to communicate a ship’s position and status—by using passive optical or non-radar active imagers

References and Resources also include:

https://www.militaryaerospace.com/articles/2017/01/lidar-tactical-mapping-uavs.html

International Defense Security & Technology Your trusted Source for News, Research and Analysis

International Defense Security & Technology Your trusted Source for News, Research and Analysis