The Future of Head-Up Displays: AR-Powered Vision Across Industries

From cars to cockpits and operating rooms, HUDs are redefining how we see and interact with the world.

The origin of the name stems from a pilot being able to view information with the head positioned “up” and looking forward, instead of angled down looking at lower instruments. All the information they need to complete a mission is displayed – and tailored to what they need to know at a certain time.

Head-Up Displays (HUDs) are no longer limited to fighter jets and high-performance aircraft. With rapid advancements in optics, augmented reality (AR), artificial intelligence (AI), and display technology, HUDs are now transforming industries such as automotive, healthcare, industrial manufacturing, and gaming. As the demand for seamless, real-time information grows, HUDs are evolving into sophisticated tools that enhance safety, productivity, and user experience across multiple sectors.

Understanding HUD Technology: How It Works and Key Components

Head-Up Display (HUD) technology is designed to project critical information directly into the user’s line of sight, reducing the need to look away from their primary focus area. Originally developed for fighter jets, HUDs have evolved significantly, incorporating cutting-edge advancements in optics, projection systems, and augmented reality. Modern HUDs are now used across various industries, from automotive and aviation to healthcare and industrial applications.

At its core, a HUD consists of several key components that work together to create a seamless visual experience. The projection unit is responsible for generating the visual information, typically using LED, LCD, MicroLED, or OLED-based light sources. More advanced systems now incorporate laser-based projectors, which offer higher brightness and contrast for improved visibility in various lighting conditions. The combiner, a transparent, specially coated glass or plastic surface, reflects the projected image toward the user while ensuring the real-world environment remains visible. In automotive HUDs, the windshield itself often serves as the combiner, whereas in aviation and other applications, a dedicated transparent screen is used.

The optical system, consisting of lenses, waveguides, and mirrors, directs and focuses the image onto the combiner, ensuring clarity and proper alignment with the user’s line of sight. Advanced HUDs employ holographic waveguide technology, which enhances image depth perception and reduces distortion. The processing unit plays a critical role by interpreting data from sensors and external inputs to generate real-time visuals. With AI-driven advancements, modern HUDs now use predictive algorithms to display relevant information dynamically, adjusting based on the user’s context and surroundings. The integration of sensors and connectivity further enhances the functionality of HUDs. By linking with cameras, radar, LiDAR, and external data sources such as GPS, vehicle control systems, and medical imaging devices, HUDs improve situational awareness and provide accurate, real-time information. The incorporation of 5G and IoT connectivity allows HUDs to receive live updates and interact with other systems, making them smarter and more responsive.

HUDs come in various types, each catering to specific needs and applications. Conventional HUDs use simple LED or LCD projectors to display essential data such as speed, navigation, or system status on a transparent screen. More advanced augmented reality (AR) HUDs overlay digital information onto the real-world environment. In automotive applications, for instance, AR HUDs can highlight pedestrians, lane markers, and potential hazards, providing drivers with enhanced situational awareness. Holographic HUDs utilize waveguide technology to generate sharper, multi-depth images with improved brightness and contrast, making them particularly effective in challenging lighting conditions. Meanwhile, wearable HUDs, such as smart glasses and head-mounted displays (HMDs), bring the technology into industrial, medical, and gaming applications. Devices like Microsoft HoloLens and Magic Leap allow for hands-free interaction, enabling real-time collaboration and visualization in fields such as surgery, engineering, and remote maintenance.

Industry-Wide Applications of Heads-Up Displays (HUDs)

Heads-Up Displays (HUDs) are rapidly expanding beyond their traditional roles, integrating augmented reality (AR) and real-time data visualization to enhance safety, efficiency, and user experience across multiple industries.

Automotive Industry

HUDs in modern vehicles have evolved from simple speedometers and navigation aids to sophisticated AR-based systems that improve driver awareness and safety. Luxury brands like Mercedes-Benz and BMW have incorporated advanced HUDs, such as the MBUX AR system, which overlays lane guidance, adaptive cruise control data, and hazard warnings directly onto the windshield. As autonomous vehicles become mainstream, HUDs will play a crucial role in human-machine interaction, providing real-time updates on road conditions, navigation, and system status.

Aviation and Aerospace

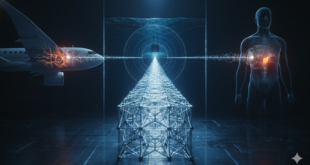

The aviation industry has long been a pioneer in HUD technology, originally deploying it in military aircraft before extending its use to commercial aviation. Modern systems like Boeing’s Enhanced Flight Vision System (EFVS) and Airbus’s HUD-equipped cockpits significantly improve pilot visibility, especially in low-visibility conditions. With AR integration, next-generation HUDs will provide pilots with 360-degree situational awareness, synthetic vision overlays, and even automated landing guidance, reducing reliance on external navigation aids.

Healthcare and Medical Applications

HUDs are revolutionizing the medical field, particularly in surgery and diagnostics. AR-based HUDs enable surgeons to overlay real-time imaging, such as CT scans and MRI results, directly into their field of view, allowing for greater precision during procedures. Companies like Magic Leap and Microsoft HoloLens are at the forefront of developing AR HUD solutions for medical training, remote surgical assistance, and even augmented reality-based patient monitoring.

Industrial and Manufacturing Sectors

In industrial settings, HUDs are enhancing efficiency by providing workers with hands-free, real-time data access. Smart glasses and head-mounted displays (HMDs) integrated with AR enable technicians to follow step-by-step assembly instructions, diagnose machinery faults, and collaborate with remote experts in real time. This technology reduces human error, improves productivity, and supports complex manufacturing processes in industries such as aerospace, automotive, and electronics.

Gaming and Augmented Reality Experiences

HUDs are redefining gaming by delivering immersive AR and mixed reality experiences. Devices like the Apple Vision Pro and Microsoft HoloLens allow gamers to interact with virtual environments seamlessly, blending digital objects with real-world surroundings. As AR technology advances, HUDs will play a crucial role in enhancing gaming realism, creating interactive virtual worlds, and expanding possibilities for entertainment, training simulations, and social experiences.

From enhancing road safety and aviation navigation to transforming healthcare, manufacturing, and entertainment, HUDs are becoming an indispensable tool across multiple industries. As AR technology continues to evolve, the future of HUDs will be defined by greater interactivity, improved visual clarity, and seamless integration with smart systems.

Latest Technologies Shaping HUDs

Recent technological advancements are continuously reshaping HUDs, making them more powerful and versatile. AI integration allows for adaptive displays that change based on user behavior and environmental factors. Holographic waveguides enhance depth perception and reduce eye strain, while MicroLED and OLED displays improve brightness and resolution while consuming less power. Additionally, the integration of 5G and cloud computing is transforming HUDs into dynamic, real-time data interfaces, expanding their potential across multiple industries. As these innovations progress, HUDs will continue to revolutionize human-machine interaction, enhancing safety, efficiency, and user experience in aviation, automotive, healthcare, industrial applications, and beyond.

1. Augmented Reality (AR) Integration

Modern HUDs are moving beyond simple data overlays and incorporating augmented reality (AR) elements. AR-powered HUDs project contextual information onto the real-world environment, improving navigation, targeting, and interaction. For instance, automotive AR HUDs highlight lane markers, upcoming turns, and pedestrian crossings in real-time, while AR-based HUDs in aviation enhance situational awareness for pilots.

2. Artificial Intelligence and Predictive Analytics

AI is playing a crucial role in the evolution of HUDs by enabling predictive analytics and intelligent assistance. AI-powered HUDs in vehicles can analyze road conditions, detect driver fatigue, and provide proactive safety alerts. Similarly, in military and industrial applications, AI-enhanced HUDs offer real-time threat assessment, object recognition, and operational insights.

3. Holographic and 3D Display Technologies

Traditional HUDs rely on flat projections, but the future lies in holographic and 3D display systems. Companies like WayRay and Envisics are pioneering holographic waveguide HUDs that create a more immersive experience by displaying information at multiple depth levels. This innovation enhances depth perception and reduces distractions, making HUDs more effective in high-speed environments like aviation and automotive sectors.

4. MicroLED and OLED for Enhanced Visibility

The latest advancements in display technologies, such as MicroLED and OLED, are making HUDs brighter, more energy-efficient, and capable of displaying high-resolution visuals even in direct sunlight. These improvements are crucial for automotive HUDs, where display clarity under various lighting conditions is a major challenge.

5. 5G Connectivity and IoT Integration

The integration of HUDs with 5G and the Internet of Things (IoT) is enabling real-time data streaming and cloud-based processing. Connected HUDs can provide live traffic updates, vehicle-to-everything (V2X) communication, and remote diagnostics in cars, while in aviation and healthcare, they facilitate seamless data sharing and remote assistance.

Pico-Projectors and Augmented Reality Displays

Pico-projectors with MEMS-based laser scanners are emerging as a key technology for next-generation stereoscopic display systems, particularly in augmented reality (AR). These compact projectors utilize high-performance MEMS scanners combined with red, green, and blue (RGB) lasers to create vivid, full-color images. By individually adjusting the laser intensity for each pixel and scanning the beam across a field, the system generates high-resolution images with smooth motion, leveraging the persistence of vision at refresh rates above 60Hz.

A major advantage of MEMS-based pico-projectors is their ability to maintain focus at any distance without the need for lenses. This allows for projection onto various surfaces without manual adjustments. Additionally, the use of RGB lasers provides a wide color gamut, enabling vibrant and accurate image reproduction. Due to their small size and low power consumption, these projectors can be integrated into compact devices like smartphones and wearable AR systems. However, their brightness is limited to comply with laser safety regulations, restricting their effectiveness in bright environments.

The continuous advancement of MEMS laser scanners has significantly improved display performance, approaching the requirements for full HD projection with refresh rates capable of handling up to 120 million pixels per second. Furthermore, optical waveguide technology is replacing conventional bulky optics in AR systems, embedding microscopic light-guiding materials within thin glass layers. This innovation enables ultra-compact designs while delivering sharp, augmented visuals, making pico-projectors a key enabler for the future of AR-enhanced displays.

Holography Enhancing Heads-Up Displays for Aircraft Pilots

Holography is revolutionizing heads-up displays (HUDs) for aircraft pilots by enabling larger, more advanced visual interfaces without the need for bulky optical components. Researchers have demonstrated that holographic optical elements can significantly expand the eye box—the area where the display remains visible to the pilot—compared to conventional HUDs. This breakthrough allows for clearer, more accessible flight data presentation while maintaining a compact design.

Unlike traditional HUDs that require larger projection optics to enhance the display size, holographic technology utilizes thin optical elements that can be directly applied to the windshield. These elements guide and manipulate light to produce a wider viewing area, limited only by the size of the display glass. By leveraging laser-based fabrication techniques similar to those used in anti-counterfeiting holograms, these optical elements can be produced efficiently at scale, making them an attractive alternative to conventional optics.

Future advancements in this technology aim to integrate full-color displays and expand the field of view, further improving situational awareness for pilots. Ongoing collaborations with industry leaders, such as Honeywell, are accelerating the development of these holographic HUDs, bringing them closer to commercial deployment in modern aviation.

Quantum Technology for Integrated Optics Solutions

Quantum technology is playing a transformative role in the development of integrated optics solutions, enabling advanced light manipulation for next-generation display systems. By leveraging precise control of light within specialized optical structures, this approach enhances energy efficiency, color accuracy, and overall display performance. One key innovation involves the use of femtosecond-laser micromachining to create intricate waveguide networks within transparent materials, allowing for the efficient guiding, splitting, and steering of laser light.

This technique offers significant advantages over traditional optical components, including greater design flexibility, the ability to work with various transparent materials, and low-loss transmission of high-power laser light across different wavelengths. Such innovations open the door to high-performance holographic displays and substantial improvements in conventional LCD technology, particularly for mobile devices, where enhanced efficiency can significantly extend battery life.

A crucial challenge in advancing quantum-integrated optics solutions lies in ensuring compatibility with scalable, cost-effective manufacturing processes. As the cost of femtosecond lasers continues to decline, mass production of these advanced optical chips is becoming more feasible, paving the way for widespread adoption in consumer electronics and other high-tech applications.

Challenges and Future Prospects of Head-Up Displays

Despite the rapid advancements in HUD technology, several challenges continue to hinder widespread adoption and seamless integration across industries. One of the most significant barriers is cost and complexity. High-end HUDs, especially those incorporating augmented reality (AR) and artificial intelligence (AI), require sophisticated optical systems, advanced projection units, and high-resolution display technologies. These factors contribute to increased manufacturing costs, limiting HUD availability primarily to premium automotive models, military aviation, and specialized industrial applications. Reducing production costs while maintaining quality and performance remains a key challenge for manufacturers looking to expand HUD adoption into mid-range and budget-friendly markets.

Another major challenge is display limitations. Achieving optimal brightness, contrast, and clarity across different lighting conditions is crucial, particularly for automotive and outdoor applications. While modern HUDs leverage MicroLED, OLED, and laser-based projection technologies to improve visibility, maintaining image fidelity in bright sunlight or adverse weather conditions remains difficult. Additionally, AR HUDs require precise depth perception and alignment with real-world objects, which demands high processing power and seamless calibration—areas that still require improvement.

User adaptation presents another hurdle in HUD adoption. While HUDs aim to enhance situational awareness by keeping critical information within the user’s field of vision, some individuals may find the projections distracting or difficult to interpret, especially in high-speed or high-stress environments. Ensuring an intuitive and non-intrusive user experience requires further advancements in human-machine interaction research, including optimizing information presentation, reducing cognitive load, and refining eye-tracking mechanisms to personalize HUD displays based on user focus.

Looking ahead, the future of HUDs is promising, with continuous innovation addressing existing limitations and expanding the technology’s capabilities. Quantum-dot displays and flexible OLED screens are expected to revolutionize HUD design, offering higher color accuracy, improved brightness, and adaptable form factors for curved and unconventional surfaces. Meanwhile, AI-driven automation will enhance HUD functionality by dynamically adjusting displayed information based on real-time user behavior, environmental conditions, and predictive analytics. For example, AI-integrated HUDs in vehicles will provide proactive safety alerts, adaptive navigation guidance, and personalized infotainment experiences.

As industries increasingly embrace digital transformation, HUDs will become an integral part of daily life, extending beyond automotive and aviation applications into healthcare, smart wearables, and industrial workspaces. The integration of 5G, cloud computing, and edge AI will enable real-time data synchronization across connected systems, making HUDs more intelligent and responsive. With advancements in holographic waveguides, spatial computing, and AR-enhanced interfaces, HUDs will not only improve efficiency and safety but also redefine the way users interact with digital information in real-world environments. As research and development continue to push the boundaries of what is possible, HUDs will evolve from being supplementary displays to essential components of next-generation human-machine interaction.

Conclusion

The future of HUDs is bright, with advancements in AR, AI, holography, and connectivity pushing the boundaries of what these systems can achieve. Whether in cars, aircraft, hospitals, factories, or gaming, HUDs are revolutionizing how we interact with information and our surroundings. As the technology matures, HUDs will play a crucial role in shaping the next generation of smart, intuitive, and immersive digital experiences.

International Defense Security & Technology Your trusted Source for News, Research and Analysis

International Defense Security & Technology Your trusted Source for News, Research and Analysis