In recent years, the automotive industry has been experiencing a revolutionary shift towards driverless cars. Powered by cutting-edge technologies, these vehicles are reshaping transportation as we know it. Among these technologies, fully autonomous systems have emerged as the driving force behind the global driverless car revolution. In this article, we will explore how fully autonomous technologies are propelling us towards a future where self-driving cars are the norm.

Autonomous vehicles will ease congestion, shorten commutes, reduce fuel consumption, slow global warming, enhance accessibility, liberate parking spaces for better uses, and improve public health and social equity. Analysts predict that by 2050 self-driving cars will save 59,000 lives and 250 million commuting hours annually and support a new “passenger economy” worth $7 trillion USD.

Most global auto manufacturers are actively developing autonomous-vehicle technology, including Google, General Motors, Ford, Volkswagen, Toyota, Honda, Tesla, Volvo, and BMW. They are trying to make drivers obsolete, handing control of the wheel to a computer that can make intelligent decisions about when to turn and how to brake.

Autonomous Technologies

Autonomous Technologies are revolutionizing transportation with self-driving cars, also known as autonomous or driverless cars. Several critical technologies underpin the safe and efficient operation of autonomous vehicles. These include smart sensors, cameras, radar and LIDAR systems, AI and machine vision, high-performance computing, network infrastructure, automotive-grade safety solutions, security, and privacy. The seamless integration of these technologies is crucial to ensure the safety and success of autonomous vehicle operations.

The foundation of autonomous systems lies in constructing a world model, which must be continually updated. To achieve this, the vehicle perceives its environment through cameras, microphones, and tactile sensors, reconstructing an effective and updated model for decision-making.

Among these technologies, an omnidirectional array of cameras serves to cover different visual depths and angles, enabling simultaneous detection of nearby lane markers, construction signs, and distant streetlights. Radar sensors, unaffected by weather conditions, track the distance, size, speed, and trajectory of objects that may intersect the vehicle’s path. Ultrasonic sensors offer close-range detection, particularly useful for parking maneuvers.

In the case of driverless cars and some drones, this process involves a combination of sensors such as LIDAR, traditional radars, stereoscopic computer vision, and GPS technology. These sensors work together to generate a high-definition 3D precision map of the vehicle’s surroundings. By combining this map with high-resolution maps of the world, the autonomous vehicle can safely navigate to its destination while avoiding obstacles such as pedestrians, bicycles, other cars, medians, children playing, fallen rocks, and trees. Additionally, these technologies allow the vehicle to negotiate its spatial relationship with other cars on the road.

LIDAR, by manipulating transmitted light, generates a millimeter-accurate 3D representation of the vehicle’s surroundings known as a point cloud. These accurate point cloud images are compared with 3D maps of the roads, called prior maps, stored in memory. Using well-known algorithms, the LIDAR aligns the point cloud with the prior maps to identify the vehicle’s precise position on the road with sub-centimeter precision. This when combined with high-resolution maps of the world allows it to drive safely to a destination while avoiding obstacles such as pedestrians, bicycles, other cars, medians, children playing, fallen rocks, and trees and negotiate its spatial relationship with other cars. LIDAR also requires 3D SLAM software, which involves constructing or updating a map of an unknown environment while simultaneously tracking a specific point within it.

While LIDAR technology has shown great potential for improvement, such as cost reduction through solid-state sensors, increased sensor range up to 200m, and 4-dimensional capabilities that sense both position and velocity of objects, its significant cost remains a hindrance to wider adoption.

Driverless cars tap into external sources of geospatial data, similar to how we rely on Siri, Google, or mental maps. While standard GPS provides accuracy within several feet, it falls short for autonomous navigation. To address this limitation, the industry is developing dynamic HD maps accurate within inches. These maps offer geographic foresight to

A driverless car computer is required to track all the dynamics of all nearby vehicles and obstacles, constantly compute all possible points of intersection, and then estimate how it thinks traffic is going to behave in order to make a decision to act.

Despite advancements, fully autonomous driving still faces challenges in localization, mapping, scene perception, vehicle control, trajectory optimization, and higher-level planning decisions. These challenges have yet to be fully addressed by systems incorporated into production platforms, even within restricted operational spaces.

The world model of a driverless car is significantly more advanced than that of a typical unmanned aerial vehicle (UAV), reflecting the complexity of the operating environment. Navigation for driverless cars is more difficult as they require maps indicating preferred routes, obstacles, and no-go zones. Moreover, they must understand the positions and movements of nearby vehicles, pedestrians, and cyclists in the next few seconds. The fidelity of the world model and the timeliness of its updates are essential for an effective autonomous system.

For a deeper understanding of Autonomous Vehicle technologies Autonomous Vehicles: A Guide to the Technology, Benefits, and Challenges

Challenges of Fully Autonomous Driverless Cars

While fully autonomous driverless cars hold great promise for revolutionizing transportation, there are several significant challenges that need to be addressed before they can become a widespread reality. These challenges include technological limitations, regulatory frameworks, public acceptance, and ethical considerations. Let’s explore these challenges in more detail:

- Technological Limitations:

Achieving full autonomy in driving is a complex task that requires advanced technologies to work seamlessly together. Current autonomous systems still face limitations in handling certain driving scenarios, such as inclement weather conditions, complex urban environments with heavy traffic, and unexpected road situations. Enhancing the reliability and robustness of autonomous technologies to handle these challenging conditions remains a significant technological challenge.

- Regulatory Frameworks:

Integrating fully autonomous driverless cars into existing regulatory frameworks presents a considerable challenge. Establishing comprehensive and standardized regulations that ensure the safety, security, and accountability of autonomous vehicles is crucial. Developing consistent international standards is also necessary to facilitate the deployment and operation of driverless cars across different regions and countries.

- Public Acceptance and Trust:

Public acceptance and trust in autonomous vehicles are essential for their successful adoption. Convincing the general public that driverless cars are safe, reliable, and capable of making better decisions than human drivers requires effective communication and education. Addressing concerns about privacy, data security, and the potential impact on jobs in the transportation industry is crucial for gaining public acceptance.

- Ethical Considerations:

Fully autonomous driverless cars raise ethical dilemmas that need to be carefully addressed. These vehicles must be programmed to make split-second decisions in life-threatening situations, such as choosing between potential collisions involving pedestrians or passengers. Determining the ethical guidelines and priorities for these decisions raises complex moral and legal questions that require careful consideration and public debate.

- Infrastructure Readiness:

The deployment of fully autonomous driverless cars requires an infrastructure that supports their operation. This includes advanced communication networks, smart traffic management systems, and vehicle-to-infrastructure (V2I) connectivity. Updating existing infrastructure and ensuring interoperability between different systems and manufacturers is a significant challenge that needs to be overcome.

- Liability and Insurance:

Determining liability in accidents involving autonomous vehicles is a complex issue. When accidents occur, it becomes crucial to establish whether the responsibility lies with the vehicle manufacturer, the software developers, or the human occupants. Developing appropriate insurance models that consider the unique risks and liabilities associated with autonomous driving is necessary to provide adequate coverage and protect all stakeholders involved.

Overcoming Challenges with Technologies in Fully Autonomous Driverless Cars

- Technological Advancements:

Continued research and development in technologies such as artificial intelligence (AI), machine learning, and sensor technologies can help overcome the current limitations of autonomous systems. Advancements in sensor technology, including improved lidar, radar, and camera systems, can enhance the perception capabilities of autonomous vehicles, enabling them to navigate complex scenarios and adverse weather conditions more effectively. Additionally, the integration of advanced algorithms and predictive models can enhance decision-making capabilities, ensuring safer and more efficient driving.

- Connectivity and V2X Communication:

Leveraging advancements in communication technologies, such as 5G networks, can enable seamless communication between autonomous vehicles and their surrounding infrastructure. Vehicle-to-everything (V2X) communication can provide real-time data exchange, allowing vehicles to receive information about road conditions, traffic congestion, and other relevant factors. This connectivity enhances the overall situational awareness of autonomous cars, enabling them to make better-informed decisions and navigate challenging scenarios with greater efficiency.

- Simulation and Virtual Testing:

Simulation and virtual testing environments can play a crucial role in overcoming the challenges of real-world testing and validation. By creating highly realistic virtual environments, developers can simulate a wide range of driving scenarios, including rare or dangerous situations that are difficult to reproduce in real life. Virtual testing can accelerate the development process, ensure system robustness, and allow for more comprehensive training of autonomous systems, leading to safer and more reliable autonomous vehicles.

- Data and Machine Learning:

The collection and analysis of vast amounts of data from real-world driving scenarios are instrumental in improving the performance and capabilities of autonomous systems. Machine learning algorithms can process this data to enhance the decision-making abilities of self-driving cars. By continuously learning from real-time data, autonomous vehicles can adapt to evolving road conditions, unexpected situations, and human behavior, thereby improving their overall performance and safety.

- Collaboration and Industry Standards:

Addressing regulatory challenges and establishing industry standards requires collaboration among automakers, technology companies, policymakers, and regulatory bodies. Collaborative efforts can ensure the development of consistent safety protocols, ethical guidelines, and regulatory frameworks that support the deployment and operation of fully autonomous driverless cars. Industry-wide standards for safety, cybersecurity, and data privacy can create a level playing field and foster public trust in autonomous technologies.

- Continuous Testing and Iterative Development:

Continuous testing and iterative development are vital for refining and improving autonomous systems. Real-world testing, combined with virtual simulation, can help identify edge cases, uncover potential vulnerabilities, and validate the performance of autonomous vehicles in various scenarios. By continuously collecting feedback and iteratively enhancing the technology, developers can address challenges and make necessary improvements, ultimately advancing the capabilities and reliability of fully autonomous driverless cars.

Conclusion:

Through technological advancements and collaborative efforts, the challenges associated with fully autonomous driverless cars can be overcome. Continued research, development, and innovation in areas such as AI, connectivity, simulation, data analysis, and industry standards are key to addressing the limitations and concerns surrounding autonomous driving. By leveraging these technologies and fostering collaboration, we can pave the way for a future where fully autonomous driverless cars are safe, efficient, and widely accepted, transforming transportation and enhancing our daily lives.

Road to full autonomy

Driverless cars are moving incrementally towards full autonomy. Many of the basic ADAS system building blocks such as automatic cruise control, automatic emergency braking, and lane-departure warning are already in place and under the control of a central computer that assumes responsibility for driving the vehicles. Increasing levels of capabilities starting from driver assistance to eventually fully autonomous capabilities requires the technologies to mature and prices to drop.

Tony Tung, Sales Manager for Mobileye Automotive Products and Services (Shanghai), explained during Symposium on Innovation & Technology how the road from Advanced Driver Assistance Systems (ADAS) to full autonomy depends on real-time ‘sensing’ of the vehicle’s environment; ‘mapping’ awareness and foresight; and decision-making ‘driving’ through assessing threats, planning maneuvers and negotiating traffic.

Fred Bower, distinguished engineer at the Lenovo Data Center Group, is also optimistic. “Advances in image recognition from deep-learning techniques have made it possible to create a high-fidelity model of the world around the vehicle,” he says. “I expect to see continued development of driver-assist technologies as the on-ramp to fully autonomous vehicles.”

Continued advancements in the technology and widespread acceptance of their use will require significant collaboration throughout the automotive and technology industry. Sciarappo sees three landmarks required to ensure the widespread acceptance of autonomous vehicles. “Number one: ADAS features need to become standard. Number two: there needs to be an industrywide effort to figure out how to measure and test the technology and its ability to avoid accidents and put us on that path to that autonomous future,” she says. “Number three: policymakers need to get on board and help figure out how to push this technology forward.”

In autonomous driving, key technologies are also approaching the tipping point: the object tracking algorithm, the algorithm used to identify objects near vehicles, has reached a 90% accuracy rate. Solid-state LiDAR (similar to radar but based on light from lasers) was introduced for high-frequency data collection of vehicle surroundings.

VoxelFlow’s™ lightning-fast 3D technology that scans the area around Autonomous Vehicles (AV) and Advanced Driver Assistance Systems (ADAS) vehicle at a radius of 40 meters with a response time of three milliseconds, much faster than today’s ADAS systems which take 300 milliseconds. STARTUP AUTOBAHN is powered by Plug and Play, and sponsored by Dalmer and the University of Stugart.

Lots of companies are building maps like this, including Alphabet’s Waymo, German automakers’ HERE, Intel’s Mobileye, and the Ford-funded startup Civil Maps. They send their own Lidar-topped cars out into the streets, harvest “probe data” from partner trucking companies, and solicit crowdsourced information from specially-equipped private vehicles; and they use artificial intelligence, human engineers, and consumer “ground-truthing” services to annotate and refine meaningful information within the captured images. Even Sanborn, the company whose incredibly detailed fire insurance maps anchor many cities’ historic map collections, now offers geospatial datasets that promise “true-ground-absolute accuracy.” Uber’s corporate-facing master map, which tracks drivers and customers, is called “Heaven” or “God View”; the parallel software which reportedly tracked Lyft competitors was called “Hell.”

Because these technologies have quickly become viable, major technology companies like Google, Nvidia, Intel, and BMW are accelerating efforts to develop self-driving vehicles.

Baidu has opened up its driverless car technology for auto makers to use as it aims to be the default platform for autonomous driving in a bid to challenge the likes of Google and Tesla. The Chinese internet giant said that the new project named Apollo, will provide the tools carmakers would need to make autonomous vehicles. There would be reference designs and a complete software solution that includes cloud data services. Essentially, Baidu is trying to become to cars what Google’s Android has become to smartphones – an operating system that will power a number of driverless vehicles.

Safety and Security

Autonomous vehicles won’t gain widespread acceptance until the riding public feels assured of their safety and security, not only of passengers but also other vehicles and pedestrians. A self-driving Uber test vehicle made the headlines when it killed a pedestrian in Phoenix, Arizona, in 2018, even though it had a back-up driver inside, and sparked renewed debate on the safety of self-driving cars, especially in dense, big cities. A 2021 survey by the American Automobile Association showed that only 14 per cent of respondents trusted a vehicle to drive itself safely.

Achieving zero roadway deaths is necessary for universal adoption of autonomous driving and is the objective of the recently released U.S. National Roadway Safety Strategy.

Sciarappo points to the Responsibility-Sensitive Safety (RSS) framework, a safety standard Intel has developed, to help drive this level of acceptance. “The RSS framework is a way for us to start talking about the best practices for keeping cars in safe mode.” While safe on-road operations are the primary aspect of autonomous-vehicle safety and security, the potential for hacking a self-driving vehicle is another key concern.

Autonomous Driving With Deep Learning

Autonomous cars have to match humans in decision-making while multitasking between different ways of reading and understanding the world, while also distributing the sensors among different widths and depths of field and lines of sight (not to mention dashboards and text messages and unruly passengers). All this sensory processing, ontological translation, and methodological triangulation can be quite taxing.

Artificial intelligence powers self-driving vehicle frameworks. Within AI is a large subfield called machine learning or ML. Machine Learning enables computers with capability to learn from data, so that new program need not be written. Deep Neural Networks or DNNs are type of neural networks which have large number of layers typically ranging from five to more than a thousand. Machine learning, could only learned classifications, DNN addressed that limitation by also learning relevant features. This capability allowed deep learning systems to be trained on raw image, video, or audio data rather than on feature-based training sets.

Engineers of self-driving vehicles utilize immense information from image recognition systems, alongside AI and neural networks, to assemble frameworks that can drive self-sufficiently. The neural networks distinguish patterns in the data, which is fed to the AI calculations. That data includes images from cameras for self-driving vehicles. The neural networks figure out how to recognize traffic signals, trees, checks, people on foot, road signs, and different pieces of any random driving environment.

Tesla (which, for now, insists that its cars can function without Lidar) has built a “deep” neural network to process visual, sonar, and radar data, which, together, “provide a view of the world that a driver alone cannot access, seeing in every direction simultaneously, and on wavelengths that go far beyond the human senses.”

Coming straight out of Stanford’s AI Lab Mountain View, Calif.-based Drive has adopted scalable deep-learning approach for working toward Level 4 autonomy (a self-driving system that doesn’t require human intervention in most scenarios). Reiley says. “We’re solving the problem of a self driving car by using deep learning for the full autonomous integrated driving stack—from perception, to motion planning, to controls—as opposed to just bits and pieces like other companies have been using for autonomy. We’re using an integrated architecture to create a more seamless approach.”Each of Drive’s vehicles carries a suite of nine HD cameras, two radars, and six Velodyne Puck lidar sensors that are continuously capturing data for map generation, for feeding into deep-learning algorithms, and of course for the driving task itself.

When you’re developing a self-driving car, the hard part is handling the edge cases. These include weather conditions like rain or snow, for example. Right now, people program in specific rules to get this to work. The deep learning approach instead learns what to do by fundamentally understanding the data. Says Tandon:The first step for Drive.ai is to get a vehicle out on the road and start collecting data that can be used to build up the experience of their algorithms. Deep-learning systems thrive on data. The more data an algorithm sees, the better it’ll be able to recognize, and generalize about, the patterns it needs to understand in order to drive safely.

“It’s not about the number of hours or miles of data collected,” says Tandon. “It comes down to having the right type of experiences and data augmentation to train the system—which means having a team that knows what to go after to make the system work in a car. This move, from simulation environments and closed courses onto public roads, is a big step for our company and we take that responsibility very seriously.”

Wayve, a London-based startup working on autonomous vehicles, said it has developed AI technology that can enable any electric vehicle to drive autonomously in any city. Wayve, has made a machine-learning model that can drive two different types of vehicle: a passenger car and a delivery van. It is the first time the same AI driver has learned to drive multiple vehicles.

Wayve’s AI model is trained using a combination of reinforcement learning, where it learns by trial and error, and imitation learning, where it copies the actions of human drivers. Wayve’s technology promises to do for autonomous cars what Windows did for personal computers: provide an operating system that functions the same, irrespective of the hardware, eliminating the need to develop custom software every time.

Ghost, which is based in Mountain View, California, Instead of a single large model, trains many hundreds of smaller models, each with a specialism. It then hand codes simple rules that tell the self-driving system which models to use in which situations. According to Volkmar Uhlig, Ghost’s co-founder and CTO, splitting the AI into many smaller pieces, each with specific functions, makes it easier to establish that an autonomous vehicle is safe. “At some point, something will happen,” he says. “And a judge will ask you to point to the code that says: ‘If there’s a person in front of you, you have to brake.’ That piece of code needs to exist.” The code can still be learned, but in a large model like Wayve’s it would be hard to find, says Uhlig.

(Ghost’s approach is similar to that taken by another AV2.0 firm, Autobrains, based in Israel. But Autobrains uses yet another layer of neural networks to learn the rules.). Wayve Waabi and Ghost are part of a new generation of startups, sometimes known as AV2.0, that is ditching the robotics mindset embraced by the first wave of driverless car firms—where driverless cars rely on super-detailed 3D maps and separate modules for sensing and planning. Instead, these startups rely entirely on AI to drive the vehicles.

Computing power that could accelerate the arrival of driverless cars

Neural Propulsion Systems (NPS), a pioneer in autonomous sensing platforms, issued a paper in March 2022. The paper finds that zero deaths require sensing and processing a peak data rate on the order of 100 X 1012 bits per second (100 Terabits per second) for vehicles to safely operate under worst roadway conditions. This immense requirement is 10 million times greater than the sensory data rate from our eyes to our brains.

The paper also shows that sensing and processing 100 Tb/s can be accomplished by combining breakthrough analytics, advanced multi-band radar, solid state LiDAR, and advanced system on a chip (SoC) technology. Such an approach will allow companies developing advanced human driver assistance systems (ADAS) and fully autonomous driving systems to accelerate progress.

NPS achieved pilot scale proof-of-concept of the core sensor element required for zero roadway deaths at a Northern California airfield in December 2021. One reason for this successful historic event is the Atomic Norm, a recently discovered mathematical framework that radically changes how sensor data is processed and understood. Atomic Norm was developed at Caltech and MIT and further developed specifically for autonomous driving by NPS.

Hod Hasharon-based Valens showcased a new chipset that can transmit up to 2 Gbps of data over a single 50-foot cable. Autonomous vehicles require a lot of data for all those cameras, sensors and radar units (not to mention the in-car entertainment system) to work – and the data has to move fast. Current in-car cabling tops out at only 100 Mbps, so the Valens solution is significantly faster. Valens is also using standard unshielded cables, which are cheaper and lighter.

Tech giant Nvidia has unveiled a “plug and play” operating system that would allow car companies to buy the computer power needed to process the complex task of real world driving — without the need for a driver to touch the steering wheel or the pedals. Nvidia says its invention of “the world’s first autonomous machine processor” is in the final stages of development and will be production ready by the end of the year.

The boss of Nvidia, Jensen Huang, says the most important aspect of autonomous car computer power is not the ability to operate the vehicle but the processing speed needed to double check the millions of lines of data — while detecting every obstacle — and then enabling one computer to make the right decision when the other misdiagnoses danger.

Nvidia’s solution is a super-fast computer chip that duplicates every piece of data — gathered from cameras, GPS, lidar and radar sensors — required to make a decision in an autonomous car. It has “dual execution, runs everything twice without consuming twice the horsepower,” he said. “If a fault is discovered inside your car it will continue to operate incredibly well”.Unveiling the “server on a chip” after pulling it out of his back pocket, Mr Huang said autonomous car technology “can never fail because lives are at stake”. However, he said the road to driverless cars was “incredibly complex” because the car must “make the right decision running software the world has never known how to write”.

Nvidia is working on two types of autonomous tech: “level four” for cars with drivers who may need to take control, and “level five” cars dubbed “robot taxis” that don’t require a driver. The tech company says it is also working on systems that will give drivers voice control to open and close windows or change radio stations, but also track eye movement to monitor fatigue.Nvidia has also developed virtual reality technology to create a simulator to initially test its autonomous car software off the streets. It is able to replicate or create dangerous scenarios to “teach” the car new evasive manoeuvres or to detect danger earlier.

Network infrastructure

Industry players are developing dynamic HD maps, accurate within inches, that would afford the car’s sensors some geographic foresight, allowing it to calculate its precise position relative to fixed landmarks. Yet achieving real-time “truth” throughout the network requires overcoming limitations in data infrastructure. The rate of data collection, processing, transmission, and actuation is limited by cellular bandwidth as well as on-board computing power. Mobileye is attempting to speed things up by compressing new map information into a “Road Segment Data” capsule, which can be pushed between the master map in the Cloud and cars in the field. If nothing else, the system has given us a memorable new term, “Time to Reflect Reality,” which is the metric of lag time between the world as it is and the world as it is known to machines.

Rapid and consistent connectivity between autonomous vehicles and outside sources such as cloud infrastructure ensures signals get to and from the vehicles more quickly. The emergence of 5G wireless technology, which promises high-speed connections and data downloads, is expected to improve connectivity to these vehicles, enabling a wide range of services, from videoconferencing and real-time participation in gaming to health care capabilities such as health monitoring.

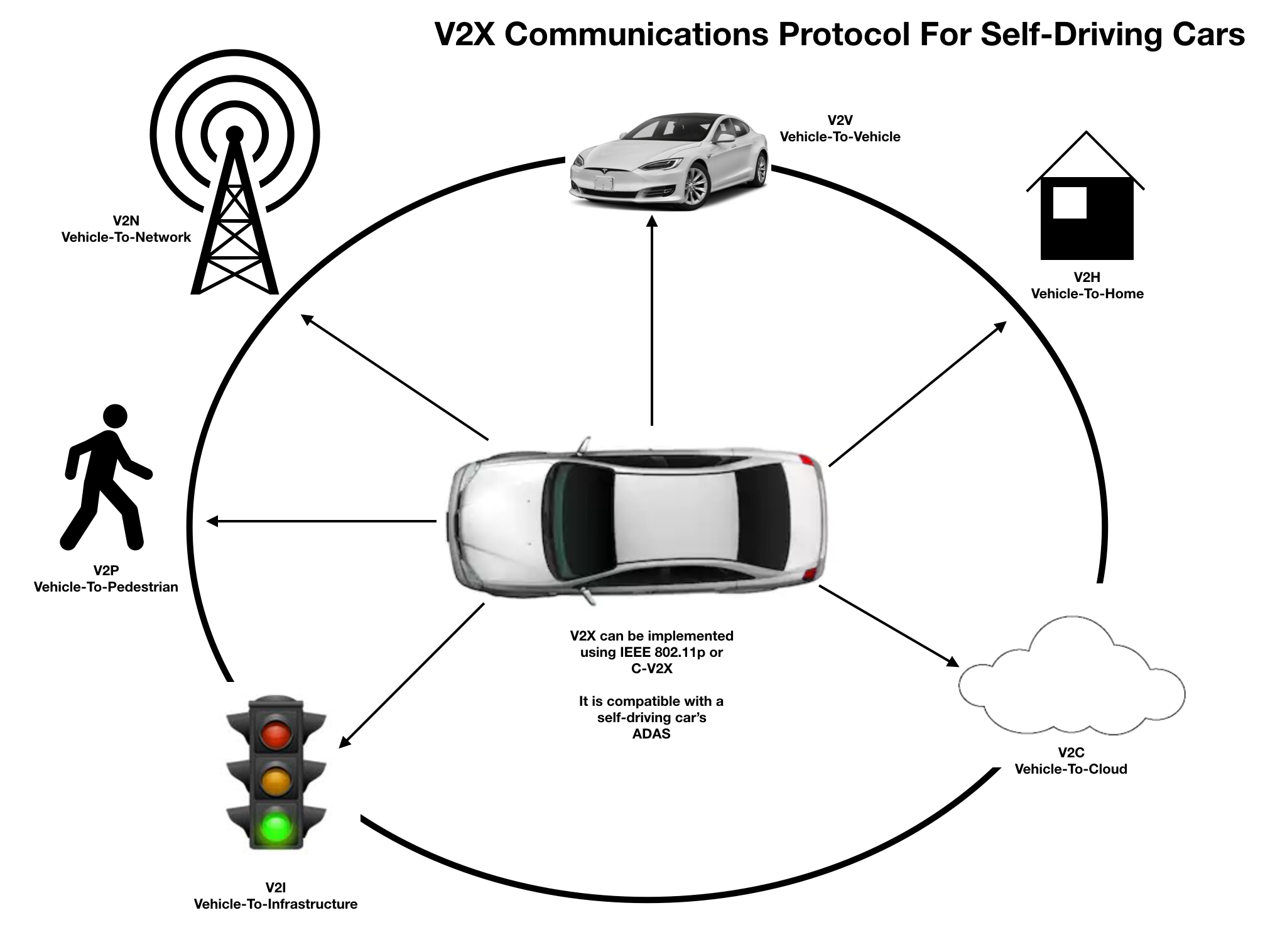

There are several protocols under which autonomous vehicles communicate with their surroundings. The inclusive term is V2X, or vehicle to everything, which includes:

- Vehicle-to-infrastructure communication, which allows for data exchange with the surrounding infrastructure to operate within the bounds of speed limits, traffic lights, and signage. It can also manage fuel economy and prevent collisions.

- Vehicle-to-vehicle communication, which permits safe operations within traffic situations, also working to prevent collisions or even near misses.

Autonomous-vehicle technology resides largely onboard the vehicle itself but requires sufficient network infrastructure, according to Genevieve Bell, distinguished professor of engineering and computer science at the Australian National University and a senior fellow at Intel’s New Technology Group. Also necessary are a road structure and an agreed-on set of rules of the road to guide self-driving vehicles. “The challenge here is the vehicles can agree to the rules, but human beings are really terrible at this,” Bell said during a presentation in San Francisco in October 2018.

Vehicle Communications

As automotive technologies continue to advance with vehicles becoming increasingly connected and autonomous, cellular vehicle-to-everything (C-V2X) technology is a key enabler for connected cars and the transportation industry of the future. Alex Wong, Hong Kong Director of Solution Sales for leading global information and communications technology (ICT) solutions provider Huawei, explained the latest C-V2X advancements; with enhanced wireless capabilities including extended communication range, improved reliability and transmission performance “enabling vehicles to talk with each other and make our transportation systems safer, faster and more environmental friendly”.

Israeli vehicle chip-maker Autotalks developed “vehicle to everything” communication technology, which can help self-driving and conventional cars avoid collisions and drive through hazardous roads. V2X communication alerts the autonomous vehicle about objects it cannot directly see (non-line-of-sight), which is vital for safety and facilitates better decisions by the robot car.

Human machine Interation

CAVs need to be able to understand the limitations of their human driver, and vice versa, says Marieke Martens, a professor in automated vehicles and human interaction from the Eindhoven University of Technology in the Netherlands. In other words, the human driver needs to be ready to take control of the car in certain situations, such as dealing with roadworks, while the car also needs to be able to monitor the capacity of the human in the car.

‘We (need) systems that can predict and understand what people can do,’ she said, adding that under certain conditions these systems could decide when it’s better to take control or alert the driver. For example, if the driver is fatigued or not paying attention, she says, then the car ‘should notice and take proper actions’, such as telling the driver to pay attention or explaining that action needs to be taken.

Prof. Martens added that rather than just a screen telling the driver automated features have been activated, better interfaces known as HMIs (human machine interaction) will need to be developed to talk between the driver and the car ‘so that the person really understands what the car can and cannot do, and the car really understands what the person can and cannot do.’

Protecting Privacy

When we talk about CAVs, we often discuss how they share information with other road users. But, notes Professor Sandra Wachter from the University of Oxford, UK, an Associate Professor in the law and ethics of AI, data, and robotics, that raises the significant issue of data protection. ‘That’s not really the fault of anybody, it’s just the technology needs that type of data,’ she said, adding that we need to take the privacy risks seriously.

That includes sharing location data and other information that could reveal a lot about a person when one car talks to another. ‘It could be things like sexual orientation, ethnicity, health status,’ said Prof. Wachter, with things like ethnicity being possible to glean from a postcode for example. ‘Basically anything about your life can be inferred from those types of data.

Solutions include making sure CAVs comply with existing legal frameworks in other areas, such as the General Data Protection Regulation (GDPR) in Europe, and deleting data when it is no longer needed. But further safeguards might be needed to deal with privacy concerns caused by CAVs. ‘Those things are very important,’ said Prof. Wachter.

Rigorous testing

Much of the self-driving car testing that has happened so far has been in relatively easy environments, says Dr John Danaher from the National University of Ireland, Galway, a lecturer in law who focuses on the implications of new technologies. In order to prove they can be safer than human-driven cars, we will need to show they can handle more taxing situations.

‘There are some questions about whether they are genuinely safe,’ he said. ‘You need to do more testing to actually ascertain their true risk potential, and you also need to test them in more diverse environments, which is something that hasn’t really been done (to a sufficient degree).

‘They tend to be tested in relatively controlled environments like motorways or highways, which are relatively more predictable and less accident-prone than driving on wet and windy country roads. The jury is still out on whether they are going to be less harmful, but that is certainly the marketing pitch.’

Waymo catalogs the mistakes its cars make on public roads, then recreates the trickiest situations at Castle, its secret “structured testing” facility in California’s Central Valley. The company also has a virtual driving environment, Carcraft, in which engineers can run through thousands of scenarios to generate improvements in their driving software

References and resources also include:

http://www.techrepublic.com/article/autonomous-driving-levels-0-to-5-understanding-the-differences/

https://www.technologyreview.com/s/612754/self-driving-cars-take-the-wheel/

International Defense Security & Technology Your trusted Source for News, Research and Analysis

International Defense Security & Technology Your trusted Source for News, Research and Analysis