DevOps is a software engineering culture and practice that aims at unifying software development (Dev) and software operation (Ops) and removing the traditional barriers between the two. DevOps is an ideology comprising three pillars—organizational culture, process, and technology and tools—to help development and IT operations teams work collaboratively to build, test, and release software in a faster, more agile, and more iterative manner than traditional software development processes.

The main characteristic of the DevOps movement is to strongly advocate automation and monitoring at all steps of software construction, from integration, testing, releasing to deployment and infrastructure management. DevOps aims at shorter development cycles, increased deployment frequency, and more dependable releases, in close alignment with business objectives.

CI/CD

Continuous Integration (CI) and Continuous Delivery (CD) are key parts of the DevOps pipeline. It aims at building, testing, and releasing software with complete automation. The approach helps reduce the cost, time, and risk of delivering changes by allowing for more incremental updates to applications in production.

Continuous integration (CI) is the practice of consolidating all-new source code into a shared version control server such as GitHub, several times a day. Successful CI means new code changes to an app are regularly built, tested, and merged to a shared repository.

It’s a solution to the problem of having too many branches of an app in development at once that might conflict with each other. In modern application development, the goal is to have multiple developers working simultaneously on different features of the same app. However, if an organization is set up to merge all branching source code together on one day (known as “merge day”), the resulting work can be tedious, manual, and time-intensive. Continuous integration (CI) helps developers merge their code changes back to a shared branch, or “trunk,” more frequently—sometimes even daily.

Once a developer’s changes to an application are merged, those changes are validated by automatically building the application and running different levels of automated testing, typically unit and integration tests, to ensure the changes haven’t broken the app. The team writes automated tests for each new feature, improvement, or bug fix. The continuous integration server monitors the main repository and runs the tests automatically for every new commit pushed.

Following the automation of builds and unit and integration testing in CI, continuous delivery automates the release of that validated code to a repository. Continuous delivery (CD) is used to deliver (release) software in short cycles, ensuring that the software can be reliably released at any time. Continuous deployment ( “CD”) can refer to automatically releasing a developer’s changes from the repository to production, where it is usable by customers. A straightforward and repeatable deployment process is important for continuous delivery.

Leveraging DevOps and implementing continuous integration and continuous delivery (CI/CD) allows organizations to see a tremendous improvement in deployment frequency, and lead time, all while driving innovation and increasing employee engagement and communication. They are making applications more secure and stable through faster detection of cybersecurity vulnerabilities and flaws, mean time to repair and mean time to recovery,

For more information on DEVOPS please visit: DevOps: A Comprehensive Guide to Continuous Integration and Delivery

Microservices

Microservices architecture is a variant of the service-oriented architecture (SOA) application architecture where the application is developed as a collection of services. In a microservices architecture, services are fine-grained and the protocols are lightweight. At a very high level, microservices can be conceptualized as a new way to create corporate applications, where applications are broken down into smaller, independent services, that are not dependent upon a specific coding language.

The benefit of decomposing an application into different smaller services is that it improves modularity and makes the application easier to understand, develop, test, and more resilient to architecture erosion. It parallelizes development by enabling small autonomous teams to develop, deploy and scale their respective services independently.

Adopting microservices allows organizations to achieve greater agility and realize lower costs, thanks to the inherent granularity and reusability of what constitutes a microservice. Microservices-based architectures enable continuous delivery and deployment.

However, microservices, which build on the principles of SOA, are now a reality with the introduction of new technologies, which constitute the three main building blocks of a microservices architecture:

APIs: The adoption and increased capabilities offered by APIs has created a robust and standardized format for communications between applications, services and servers. REST (Representation State Transfer) APIs in particular are key to microservices architecture: ;A RESTful API breaks down a transaction to create a series of small modules, each of which addresses a particular underlying part of the transaction. This modularity provides developers with a lot of flexibility for developing lightweight APIs, which are more suitable for browser-powered applications.

Scalable Cloud Infrastructures: Public, private and hybrid Cloud infrastructures are all now capable of delivering resources on demand and can effectively scale to deliver services, regardless of loads or associated traffic. That brings elasticity to microservices and in turn makes them more adaptable and efficient.

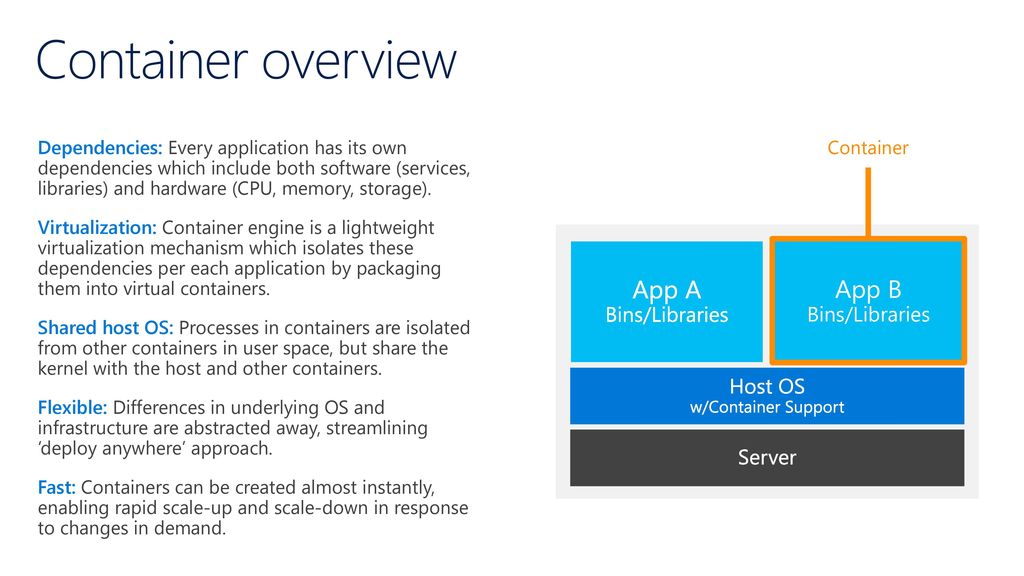

Containers: Software containers have created a standardized frame for all services by abstracting the core OS code from the underlying hardware. The standardization offered by containers eliminates what was once a painful integration process in a heterogeneous infrastructure world.

Containers

In traditional infrastructure, applications were designed to access or use all the physical resources which required one server per application, which wasted resources and didn’t scale and when multiple applications were running on a single server, this created resource conflicts and bottlenecks for some applications.

Then came Virtual machines (VMs) that are servers abstracted from the actual computer hardware, enabling you to run multiple VMs on one physical server or a single VM that spans more than one physical server. Each VM runs its own OS instance, and you can isolate each application in its own VM, reducing the chance that applications running on the same underlying physical hardware will impact each other.

Containers take this abstraction to a higher level—specifically, in addition to sharing the underlying virtualized hardware, they share an underlying, virtualized OS kernel as well. A container is an executable unit of software in which application code is packaged—together with libraries and dependencies—in common ways so that it can be run anywhere on the desktop, traditional IT, or the cloud. Containers take advantage of a form of operating system (OS) virtualization that lets multiple applications share the OS by isolating processes and controlling the amount of CPU, memory, and disk those processes can access.

Containers offer the same isolation, scalability, and disposability of VMs, but because they don’t carry the payload of their own OS instance, they’re lighter weight (that is, they take up less space) than VMs. They’re more resource-efficient—they let you run more applications on fewer machines (virtual and physical), with fewer OS instances. Containers are more easily portable across desktop, data center, and cloud environments. And they’re an excellent fit for Agile and DevOps development practices.

As containers proliferated—today, an organization might have hundreds or thousands of them—operations teams needed to schedule and automate container deployment, networking, scalability, and availability. And so, the container orchestration market was born. Kubernetes quickly became the most widely adopted container orchestration option (in fact, at one point, it was the fastest-growing project in the history of open source software).

Kubernetes

Kubernetes —also known as ‘k8s’ or ‘kube’— is an open source container orchestration platform that automates deployment, management and scaling of applications. Kubernetes was first developed by engineers at Google before being open sourced in 2014. It is a descendant of ‘Borg,’ a container orchestration platform used internally at Google.

Kubernetes schedules and automates a number of container-related tasks such as

Deployment, i.e. deploying a specified number of containers to a specified host and keep them running in the desired state.

Rollouts: A rollout is a change to a deployment. Kubernetes lets you initiate, pause, resume, or roll back rollouts. Service discovery: Kubernetes can automatically expose a container to the internet or to other containers using a DNS name or IP address.

Storage provisioning: Set Kubernetes to mount persistent local or cloud storage for your containers as needed.

Load balancing and scaling: When traffic to a container spikes, Kubernetes can employ load balancing and scaling to distribute it across the network to maintain stability.

Self-healing for high availability: When a container fails, Kubernetes can restart or replace it automatically; it can also take down containers that don’t meet your health-check requirements.

Kubernetes also distills the most successful architectural and API patterns of prior systems and couples them with load balancing, authorization policies, and other features needed to run and manage applications at scale. This in turn provides the groundwork for cluster-wide abstractions that allow true portability across clouds.

Today, Kubernetes and the broader container ecosystem are maturing into a general-purpose computing platform and ecosystem that rivals—if not surpasses—virtual machines (VMs) as the basic building blocks of modern cloud infrastructure and applications. This ecosystem enables organizations to deliver a high-productivity Platform-as-a-Service (PaaS) that addresses multiple infrastructure- and operations-related tasks and issues surrounding cloud native development so that development teams can focus solely on coding and innovation.

Every enterprise, regardless of its core business, is embracing more digital technology. The ability to rapidly adapt is fundamental to continued growth and competitiveness. Cloud-native technologies, and especially Kubernetes, arose to meet this need, providing the automation and observability necessary to manage applications at scale and with high velocity. Organizations previously constrained to quarterly deployments of critical applications can now deploy safely multiple times a day.

Kubernetes’s declarative, API-driven infrastructure empowers teams to operate independently, and enables them to focus on their business objectives. An inevitable cultural shift in the workplace has come from enabling greater autonomy and productivity and reducing the toil of development teams. New applications such as machine learning, edge computing, and the Internet of Things are finding their way into the cloud native ecosystem via projects like Kubeflow. Kubernetes is almost certain to be at the heart of their success.

Kubernetes is ideal for running a CI/CD pipeline in a DevOps environment

Kubernetes in a CI/CD workflow is great for handling DevOps in an organisation. It allows the entire process, from prototyping to the final release, to be completed in rapid successions. All is done while maintaining the scalability and reliability of the software production environment. So, Kubernetes is able to effectively increase the agility of the DevOps process.

Developers chose (and continue to choose) Kubernetes for its breadth of functionality, its vast and growing ecosystem of open source supporting tools, and its support and portability across the leading cloud providers (some of whom now offer fully managed Kubernetes services).

Kubernetes was inspired by Borg, Google’s internal platform for scheduling and managing the hundreds of millions, and eventually billions, of containers that implement all of our services. The thing about Kubernetes is that it was built mainly to address the issues that the developers face in an Agile environment and automate those processes to implement a seamless workflow.

Flexibility of pods

In a Kubernetes environment, the pods are generally assumed as the smallest unit that runs one container, but the thing about pods is that multiple containers can be run within a single pod. This results in better utilization of resources. The flexibility of pods means you’ll be able to run containers that provide additional features or services alongside the main app. Since its maximum resources can be utilized by flexible pods, certain features such as load balancing and routing can be completely separated from microservices and the app functionalities.

Reliability

Another core component that makes Kubernetes suited to CI/CD processes is its robust reliability. Kubernetes platform has a series of health-check features that eliminate many headaches associated with deploying a new iteration. In earlier times, whenever a new pod was being deployed, it was often faulty and crashed frequently however, kubernetes manages to keep the entire system running through its built-in auto-healing feature.

Updates & Rollbacks

The addition of newer pods in a kubernetes environment suits the CI/CD workflow perfectly. When newer pods are added, the existing pods are not generally replaced, instead, they are updated using a feature that belongs to the Kube deployment object ensuring that it does not cause any impact to the end user. The traffic is then directed to the newly added pod by a service. In case if the update fails to work properly, rolling back to a previous revision is quite easy as the previous version is stored in the version control system.

References and Resources also include:

https://thenewstack.io/how-the-u-s-air-force-deployed-kubernetes-and-istio-on-an-f-16-in-45-days/

https://www.urolime.com/blogs/how-kubernetes-benefits-devops/

International Defense Security & Technology Your trusted Source for News, Research and Analysis

International Defense Security & Technology Your trusted Source for News, Research and Analysis