Introduction:

Artificial intelligence (AI) continues to evolve at a breathtaking pace, reshaping industries and redefining what’s possible in technology. However, as AI models grow in complexity, the demand for faster and more energy-efficient computing systems has reached unprecedented levels. Enter photonic chips, a revolutionary approach to computing that uses light instead of electricity to process information. These chips promise to deliver AI at lightspeed, dramatically enhancing both efficiency and computational power.

As we delve into the world of AI at lightspeed, we’ll explore how photonic chips are supercharging efficiency, accelerating computation, and opening new frontiers in AI applications. In this article, we delve into the world of AI at lightspeed and explore how photonic chips are supercharging efficiency and speed in AI applications.

The Need for a New Era in AI Computing

The rapid evolution of AI applications, from natural language processing to image recognition and autonomous systems, has placed unprecedented demands on computational power. Traditional electronic chips, such as graphics processing units (GPUs) and central processing units (CPUs), have been the backbone of AI’s growth, enabling breakthroughs in machine learning and neural networks. However, these components face inherent limitations that hinder their ability to sustain the exponential growth of AI.

Energy consumption is a major bottleneck. Training state-of-the-art AI models like GPT-4 requires immense power, with costs escalating as models grow larger and more complex. This not only raises operational expenses but also poses significant sustainability challenges. Furthermore, the energy-intensive nature of traditional hardware leads to excessive heat generation, necessitating sophisticated and costly cooling systems that reduce overall system efficiency. In addition, the architecture of electronic chips struggles to meet the demands of parallel computations required for real-time AI applications, creating performance bottlenecks that impede progress in advanced tasks.

These challenges have sparked a search for alternative computing paradigms, paving the way for innovations like photonic chips. By leveraging the unique properties of light for data processing, photonic technologies promise to revolutionize AI by delivering faster, more energy-efficient solutions. This shift toward photonic-based hardware marks a pivotal step in overcoming the limitations of traditional electronics, setting the stage for a new era in AI computing that can support the development of even more ambitious models and applications.

Photonic chips, or optical chips, represent a paradigm shift in computing, utilizing photons—particles of light—to process and transmit information. Unlike traditional electronic chips that rely on the movement of electrons, photonic chips exploit the unique properties of light to achieve extraordinary speed, efficiency, and parallel processing capabilities. This breakthrough is especially relevant for addressing the limitations of conventional chips, including energy inefficiency, latency, and the inability to scale effectively for increasingly complex AI models.

Silicon Photonics: Merging Optics with Electronics

Parallel Processing and Neural Networks:

One of the key advantages of photonic chips in AI lies in their ability to facilitate parallel processing. Neural networks, the backbone of many AI algorithms, often involve complex calculations and data manipulations. Photonic chips can perform these operations simultaneously, enabling a dramatic acceleration in processing speed.

Photons love working together, traveling in multiple paths simultaneously. This opens the door to massive parallel processing, letting AI algorithms tackle complex problems in a fraction of the time, like a team of synchronized swimmers gliding through the data pool.

Photonic chips, however, can perform multiple computations simultaneously by manipulating light waves through various optical components such as waveguides and modulators. Lightwaves exhibit the superposition property, which allows for optical multiplexing: waveguides can carry many signals along different wavelengths or time slots simultaneously without taking up additional space. This combination enables an enormous amount of information—easily more than one terabyte per second—to flow through a waveguide only half a micron wide.

This parallelism is a natural fit for the parallel nature of neural network computations.

Reducing Latency:

In AI applications, especially those requiring real-time responses, latency is a critical factor. Photonic chips excel in reducing latency due to their ability to transmit information at the speed of light. Tasks that traditionally took considerable time for computation can now be executed in fractions of a second, opening new possibilities for applications such as autonomous vehicles, healthcare diagnostics, and more.

Energy Efficiency and Sustainability:

As the demand for AI continues to grow, so does the need for sustainable and energy-efficient computing solutions. Photonic chips offer a compelling advantage in this regard. The use of light-based signals reduces energy consumption compared to traditional electronic chips.

They also boast unmatched energy efficiency, thanks to the inherently low heat generation of optical signals. This translates to greener AI solutions, consuming a fraction of the power traditional systems guzzle. This not only makes AI systems more sustainable but also aligns with global efforts to develop green technologies.

AI at Lightspeed: Transforming Efficiency and Speed

The integration of photonic chips into AI systems is unlocking a host of exciting opportunities across a variety of industries. One of the most significant advantages of photonic chips is their ability to accelerate deep learning algorithms, making them ideal for applications that require real-time analysis and decision-making. In fields like image recognition, natural language processing, and autonomous vehicles, photonic chips can process vast amounts of data with remarkable speed and efficiency. This capability allows AI systems to deliver faster, more accurate responses, enhancing performance in high-demand environments.

For AI training, which involves processing immense datasets, photonic chips excel with their capacity for parallel computations, slashing training times for large-scale models like GPT and DALL·E. Training AI models like GPT or DALL·E involves processing vast datasets and performing complex calculations, tasks that strain traditional computing systems. In deep learning, photonic chips enable real-time processing for tasks like image recognition and speech analysis, enhancing user experiences and operational capabilities.

Additionally, their energy efficiency—reaching up to 160 trillion operations per watt (TOPS/W)—far surpasses that of traditional chips, dramatically lowering operational costs and making AI systems more sustainable. This leap in efficiency can significantly lower the energy costs of training and deploying AI systems, making large-scale AI projects more sustainable and environmentally friendly.

In real-time scenarios, such as autonomous vehicles, robotics, and natural language processing, the speed of light processing offered by photonic chips ensures near-instantaneous decision-making. By enabling instantaneous decision-making, photonic chips can enhance the responsiveness and intelligence of AI systems operating in dynamic and complex settings.

Financial markets also benefit significantly from this technology, as photonic chips empower high-frequency trading systems to execute decisions in milliseconds, offering a decisive competitive advantage. Similarly, data centers leverage photonic chips to meet the escalating demands of cloud computing, ensuring faster data processing while dramatically reducing energy consumption.

The integration of photonic chips extends to edge AI, where on-device processing facilitates real-time decision-making without relying on cloud infrastructure. This innovation is critical for applications in smart cities, IoT systems, and autonomous devices, where immediate responses are paramount. As photonic chips gain traction, their ability to revolutionize industries by combining efficiency, speed, and adaptability underscores their potential to reshape the technological landscape and drive the next wave of AI advancements.

This combination of speed, efficiency, and scalability positions photonic chips as a cornerstone for advancing AI applications, including edge computing, high-frequency trading, and cloud data centers. With their transformative impact, photonic chips are paving the way for smarter, faster, and more energy-conscious AI systems.

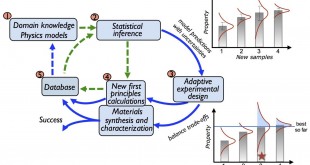

Evolution towards Photonic Neural netoworks

In the landscape of AI development hardware, including GPUs, TPUs, FPGAs, and neuromorphic computers, photonic chips emerge as a transformative force. Neuromorphic computing, inspired by the human brain’s structure and function, seeks to create efficient and low-power AI systems.

Neuromorphic photonics processors are a type of photonic chip that are specifically designed to mimic the way the human brain works, which allows for more efficient and effective AI processing. A photonics-based neuromorphic computer would encode information in spikes of light intensity, transmitted between so-called optical neurons.

For deeper understanding of Photonics AI technology and applications please visit: Photonic Computing and AI: Accelerating Intelligence through Light

Princeton University researchers are engineering dynamical lasers, operating at speeds approximately 100 million times faster than biological neurons, with excitability akin to neural spikes. Companies like Optalysys, Lightmatter, and Lightelligence are leveraging optical computing for AI co-processors, photonic chip-based technologies, and matrix multiplication using light, attracting significant investments. Integrated silicon photonics, exemplified by Queen’s University’s research, showcases a neuromorphic computing chip with optical neurons, promising advancements in high-performance computing, image processing, and complex problem-solving.

In 2021, Swinburne University achieved a milestone in neuromorphic processing with the world’s fastest optical neuromorphic processor. Operating at speeds exceeding 10 trillion operations per second, it utilized optical micro-combs for ultra-large scale data processing, showcasing capabilities in facial recognition. MIT’s nanophotonic processor enhances artificial neural network performance by using light beams for deep learning tasks, demonstrating applications in vowel recognition and high-speed analog signal processing. Lightspeed Venture Partners invests in AI startups with a focus on photonic chips, supporting companies like Lightelligence and Snorkel AI. Boston-based startup Lightmatter develops photonic chips, such as Envise, a dedicated photonic AI accelerator for matrix multiplications, demonstrating applications in various industries.

Envise’s potential applications range from autonomous driving and predictive maintenance in manufacturing to robotics, e-commerce, healthcare, signal processing, language translation, and text-to-speech development. Despite its innovation, photonic computing faces challenges, including the analog nature of calculations leading to potential inaccuracies and system noise. A breakthrough from Princeton University involves an integrated silicon photonic neuromorphic chip utilizing electro-optic modulators as photonic neurons. The “broadcast-and-weight” architecture proposes a silicon-compatible photonic neural networking approach with potential hardware acceleration factors.

Researchers from Oxford, Münster, and Exeter universities advance photonic computing by combining phase-change materials (PCMs) with integrated photonic circuits, creating synapses operating a thousand times faster than the human brain. Lumai, an Oxford University spin-out, receives £1.1 million from Innovate UK to develop all-optical neural networks, offering speed, efficiency, and scalability advantages over traditional transistor-based digital electronics. Taiwan Semiconductor Manufacturing Co. (TSMC) invests in silicon photonics to enhance AI performance, focusing on energy efficiency and computing power for applications like ChatGPT. This reflects a broader industry trend, with major players like Intel, Cisco, IBM, Nvidia, and Huawei exploring silicon photonics for diverse applications, from data centers to autonomous vehicles. TSMC’s efforts include developing an integrated silicon photonics system to address the computational demands of large language models, exemplified by ChatGPT and Bard.

Photonic Accelerators: Beyond Blazing Speed – Latest Breakthroughs Fueling the AI Engine

Revolutionizing AI Computing with Photonic Chips

Researchers at the University of Pennsylvania have made a transformative leap in optical computing by developing a silicon photonic chip capable of performing matrix computations at the speed of light. This innovation has the potential to dramatically accelerate AI training processes while significantly enhancing security and energy efficiency, making it a compelling alternative to traditional electronic processors, which are often power-intensive and vulnerable to hacking.

The new photonic chip leverages the unique properties of light to execute complex calculations. By precisely manipulating the height variations on a silicon wafer, the researchers engineered a chip that scatters light in specific patterns to encode and process data. Unlike conventional electronics, where matrix multiplication operations are performed sequentially, the photonic approach enables parallel processing, allowing multiple operations to be executed simultaneously. This parallelism not only speeds up computations but also eliminates the need for intermediate data storage, thereby reducing potential security risks.

The development process was further streamlined by utilizing 2D simulations, which simplified the design and enabled the creation of larger-scale chips. While the current prototype handles matrices up to 3×3, the team is actively working on scaling the technology to support larger matrices and incorporating reconfigurability for enhanced flexibility. This breakthrough positions optical computing as a viable path forward in the quest for faster, more secure, and energy-efficient AI systems. The potential applications of this technology are vast, spanning areas such as image recognition, natural language processing, and autonomous vehicles, where rapid and secure data processing is paramount.

Taichi: A Modular Photonic Chiplet

One of the most groundbreaking advancements in photonic computing is the development of Taichi, a modular photonic chiplet created by a research team in China. This chip has made significant strides in the field, achieving a network scale of 13.96 million artificial neurons—setting a new record for photonic computing. What truly sets Taichi apart is its exceptional energy efficiency, delivering 160.82 TOPS/W, far exceeding the capabilities of traditional electronic chips, which typically provide less than 10 TOPS/W. This remarkable energy efficiency, combined with its ability to perform complex AI tasks such as image classification and AI-generated content creation, highlights Taichi’s potential for real-world applications.

Taichi’s modular design offers another key advantage: scalability. This feature makes the chip a prime candidate for future AI systems, including the ambitious goal of achieving artificial general intelligence (AGI). By leveraging its modular structure, Taichi can expand to meet the growing computational demands of next-generation AI, making it a strong contender in the race to develop more powerful, energy-efficient AI systems.

Challenges and Future Outlook:

While the promise of AI at lightspeed powered by photonic chips is vast, significant challenges remain in fully realizing this potential. One of the main obstacles is the integration of photonics with existing electronic systems. To harness the power of photonic chips, there must be seamless synchronization between hardware and software components, a challenge that requires novel design paradigms. Ensuring compatibility between photonics and electronics, while minimizing latency and maintaining signal integrity, adds another layer of complexity. Additionally, transitioning to optical accelerators must be done without compromising the accessibility and affordability of current integrated electronic systems, which are crucial for mass adoption.

The manufacturing of photonic chips presents its own set of difficulties, as it requires specialized materials and advanced fabrication techniques that are both costly and time-intensive. Scaling these chips for widespread use in data centers and consumer devices is also an ongoing challenge, as it demands significant advancements in both scalability and cost-efficiency. Despite these hurdles, continued research and investment in photonics technologies are paving the way for solutions to these problems. As the field evolves, the integration of photonic chips into AI systems is expected to accelerate, opening the door to a transformative future in AI computing. With the development of new materials, manufacturing processes, and innovative design strategies, the dream of lightspeed AI may soon become a reality, revolutionizing industries and pushing the boundaries of what AI can achieve.

Conclusion:

As we stand on the brink of a new era in AI, the marriage of artificial intelligence with photonic chips holds the promise of unprecedented efficiency and speed. By leveraging the speed and efficiency of light, these chips have the potential to supercharge AI applications, enabling breakthroughs in performance, energy efficiency, and scalability.

The ability to process information at lightspeed not only enhances the performance of AI applications but also opens doors to innovations that were once deemed impractical. With ongoing research and advancements, we can anticipate a future where AI at lightspeed becomes the norm, transforming industries and reshaping the possibilities of what AI can achieve. As the world embraces AI at lightspeed, we can look forward to a future where machines truly match and even surpass the capabilities of the human brain, driving innovation and transforming industries in ways we have yet to imagine.

References and Resources also include:

https://www.sciencedaily.com/releases/2021/01/210107112418.htm

International Defense Security & Technology Your trusted Source for News, Research and Analysis

International Defense Security & Technology Your trusted Source for News, Research and Analysis