In the realm of electronic embedded systems, where performance, efficiency, and reliability are paramount, the concept of Hardware-Software Co-Design (HSCD) has emerged as a powerful methodology for achieving optimal system-level performance. By seamlessly integrating hardware and software components at the design stage, HSCD enables developers to harness the full potential of both domains, resulting in highly efficient and versatile embedded systems.

This synergy, known as Hardware-Software Co-Design (HSCD), is driving innovation across a multitude of industries, from automotive and aerospace to telecommunications and consumer electronics. In this article, we explore the principles, methodologies, and applications of HSCD, shedding light on its transformative impact on electronic system design.

Embedded Design

Embedded system design is a meticulously structured process, commencing with a meticulous delineation of product requirements and culminating in the delivery of a fully functional solution that aligns seamlessly with these stipulations. At the outset, the requirements and product specifications are meticulously documented, outlining the essential features and functionalities that the product must embody. This crucial phase often involves inputs from various stakeholders, including experts from marketing, sales, and engineering domains, who possess profound insights into customer needs and market dynamics. By accurately capturing these requirements, the project is set on a trajectory of success, minimizing the likelihood of future modifications and ensuring a viable market for the developed product. Successful products, after all, are those that aptly address genuine needs, deliver tangible benefits, and boast user-friendly interfaces, ensuring widespread adoption and enduring satisfaction.

Design Goals

When crafting embedded systems, designers contend with a myriad of constraints and design objectives. These encompass performance metrics such as speed and adherence to deadlines, as well as considerations regarding functionality and user interface. Timing intricacies, size, weight, power consumption, manufacturing costs, reliability, and overall cost also factor prominently into the design equation. Navigating these parameters requires a delicate balancing act to optimize system performance within the constraints imposed by the final product’s size, weight, and power (SWaP) requirements.

System Architecture

System architecture serves as the blueprint delineating the fundamental blocks and operations within a system, encompassing interfaces, bus structures, hardware functionality, and software operations.

System designers leverage simulation tools, software models, and spreadsheets to craft an architecture aligned with the system’s requisites. Addressing queries like packet processing capacity or memory bandwidth demands, system architects sculpt an architecture tailored to specific performance criteria.

Hardware design may rely on microprocessors, field-programmable gate arrays (FPGAs), or custom logic. Microprocessor-based design emphasizes software-centric embedded systems, offering versatility across diverse functions with streamlined product development. Microcontrollers, with fixed instruction sets, facilitate task execution through assembly language or embedded C.

Alternatively, hardware-based embedded design harnesses FPGAs, flexible integrated circuits programmable to execute designated tasks. These are integrated circuits that contain millions of logic gates that can be electrically configured (i.e. the gates are field programmable) to perform certain tasks.

Although FPGAs enable custom computing device creation at an architectural level, they differ from microcontrollers in computational prowess, akin to Application Specific Integrated Circuits (ASICs).

Programming FPGAs employs Verilog or VHDL languages, transforming code into digital logic blocks fabricatable onto FPGA chips. VHDL or Verilog facilitate hardware design from the ground up, enabling the creation of specialized computing systems tailored to specific applications, including the potential recreation of microprocessor or microcontroller functionality given adequate logic block resources.

Like C Code or assembly code is converted to machine code for execution on respective CPU, VHDL language converts to digital logic blocks that are then fabricated on FPGA chip to design a custom computer for specific application. Even a microprocessor or microcontroller can be designed on FPGA provided it has sufficient logic blocks to support such design.

Architecting Embedded Systems

An embedded system comprises three essential components: embedded hardware, embedded software programs, and in many cases, a real-time operating system (RTOS) that oversees the utility software. The RTOS ensures precise scheduling and latency management, governing the execution of application software according to predetermined plans. While not always present in smaller embedded devices, an RTOS plays a pivotal role in larger systems, enforcing operational rules and enhancing system functionality.

Leveraging powerful on-chip features such as data and instruction caches, programmable bus interfaces, and higher clock frequencies significantly boosts performance and streamlines system design. These hardware advancements enable the integration of RTOS, further enhancing system performance and complexity. Embedded hardware typically centers around microprocessors and microcontrollers, encompassing memory, bus interfaces, input/output mechanisms, and controllers.

On the software side, embedded systems host embedded operating systems, various applications, and device drivers. The architecture of an embedded system involves key components such as sensors, analog-to-digital converters, memory modules, processors, digital-to-analog converters, and actuators. These components operate within the framework of either Harvard or Von Neumann architectures, serving as the foundational structure for embedded system designs.

The design process for embedded systems typically begins with defining product requirements and specifications. This phase, crucial for setting the foundation of the design, involves input from various stakeholders and experts to ensure that the resulting product meets market demands and user expectations.

Hardware Software Tradeoff

When navigating the hardware-software tradeoff in embedded system design, it’s crucial to consider the implications of implementing certain subsystems in hardware versus software. While hardware implementations typically offer enhanced operational speed, they may come at the expense of increased power requirements.

For instance, Certain subsystems in hardware (microcontroller), real-time clock, system clock, pulse width modulation, timer and serial communication can also be implementable by software. Software implementations bring several advantages, including flexibility for accommodating new hardware iterations, programmability for intricate operations, and expedited development cycles. They also afford modularity, portability, and leverage standard software engineering tools. Moreover, high-speed microprocessors enable swift execution of complex functions.

On the other hand, hardware implementations boast reduced program memory requirements and can minimize the number of chips required, albeit potentially at a higher cost. Additionally, internally embedded codes enhance security compared to external ROM storage solutions. Thus, the optimal choice hinges on balancing performance, cost considerations, and security requirements specific to the embedded system’s context.

Traditional design

Traditional embedded system design follows a structured approach, commencing with architectural specifications encompassing functionality, power consumption, and costs. The first step (milestone 1) architecture design is the specification of the embedded system, regarding functionality, power consumption, costs, etc.

The subsequent phase, partitioning, segregates the design into hardware and software components, delineating tasks;

• A hardware part, that deals with the functionality implemented in hardware add-on components like ASICs or IP cores.

• A software part, that deals with code running on a microcontroller, running alone or together with a real-time-operating system (RTOS). Microprocessor selection, a pivotal challenge, involves assessing various factors like performance, cost, power efficiency, software tools, legacy compatibility, RTOS support, and simulation models.

The second step is mostly based on the experience and intuition of the system designer. After completing this step, the complete hardware architecture will be designed and implemented (milestones 3 and 4). After the target hardware is available, the software partitioning can be implemented.

The last step of this sequential methodology is the testing of the complete system, which means the evaluation of the behavior of all the hardware and software components. Unfortunately developers can only verify the correctness of their hardware/software partitioning in this late development phase. If there are any uncorrectable errors, the design flow must restart from the beginning, which can result in enormous costs.

In the rapidly evolving landscape of electronics, driven not only by innovation but also by evolving consumer demands, the imperative for smarter devices becomes increasingly evident. With technology becoming ubiquitous in both personal and professional spheres, the expectation for enhanced functionality continues to rise. However, conventional design practices often suffer from early and rigid hardware-software splits, leading to suboptimal designs and compatibility challenges during integration.

Most of all, this design process restricts the ability to explore hardware and software trade-offs, such as the movement of functionality from hardware to software and vice-versa, and their respective implementation, from one domain to other and vice-versa. Consequently, this approach hampers flexibility in exploring hardware-software trade-offs and adapting functionalities between the two domains, ultimately impacting time-to-market and hindering product deployment efficiency.

However, this linear methodology faces limitations in an increasingly complex technological landscape, necessitating hardware-software co-design. Leveraging machine learning, this collaborative approach optimizes hardware and software configurations, ensuring scalability and alignment with evolving demands and advancements across diverse applications.

Understanding Hardware-Software Co-Design (HSCD)

Hardware-Software Co-Design (HSCD) is a design methodology that involves the simultaneous development of hardware and software components for embedded systems. Unlike traditional approaches where hardware and software are developed in isolation and then integrated later in the design process, HSCD emphasizes the close collaboration between hardware and software engineers from the outset. By jointly optimizing hardware and software architectures, HSCD aims to achieve higher performance, lower power consumption, and faster time-to-market for embedded systems.

The Synergy of Hardware and Software

At the heart of HSCD lies the synergy between hardware and software components. HSCD recognizes the interdependence between the two domains and seeks to leverage their combined strengths for optimal system performance.

The landscape of electronic system design is evolving, driven by factors like portability, escalating software and hardware complexities, and the demand for low-power, high-speed applications. This evolution gravitates towards System on Chip (SoC) architectures, integrating heterogeneous components like DSP and FPGA, epitomizing the shift towards Hardware/Software Co-Design (HSCD). Hardware/software co-design aims for the cooperative and unification of hardware and software components. Hardware/software co-design means meeting system-level objectives by exploiting the synergism of hardware and software through their concurrent design

In contemporary systems, whether electronic or those housing electronic subsystems for monitoring and control, a fundamental partitioning often unfolds between data units and control units. While the data unit executes operations like addition and subtraction on data elements, the control unit governs these operations via control signals. The design of these units can adopt diverse methodologies: software-only, hardware-only, or a harmonious amalgamation of both, contingent on non-functional constraints such as area, speed, power, and cost.

The software design methodology can be selected for the systems with specifications as less timing related issues and less area constraints. Using the software design system less area and low speed systems can be designed. To design a system with high speed, timing issues need to be considered. The hardware design methodology is one solution to design high speed systems with more area compared to software designs.

Because of present SoC designs, systems with high speed, less area, portability, low power have created the need of combining the hardware and software design methodologies called as Hardware/Software Co-Design. The co-design can be defined as the process of designing and integrating different components on to a single IC or a system. The components can be a hardware component like ASIC, software component like microprocessor, microcontroller, electrical component or a mechanical component etc.

By co-designing hardware and software in tandem, developers can exploit the strengths of each domain to overcome the limitations of the other. For example, hardware acceleration can offload compute-intensive tasks from software, improving performance and energy efficiency. Conversely, software optimizations can leverage hardware features to maximize throughput and minimize latency. By leveraging this synergistic relationship, HSCD enables developers to create embedded systems that are greater than the sum of their parts. The dividends of HSCD extend across the PCB industry, fostering manufacturing efficiency, innovative design paradigms, cost reduction, and expedited prototype-to-market cycles.

Benefits of HSCD

The benefits of HSCD are manifold. Firstly, by co-designing hardware and software components in parallel, developers can identify and address system-level bottlenecks early in the design process, reducing the risk of costly redesigns later on. Secondly, HSCD enables developers to achieve higher levels of performance, efficiency, and scalability by optimizing hardware and software architectures holistically. Thirdly, HSCD facilitates rapid prototyping and iteration, allowing developers to quickly evaluate different design choices and iterate on their designs in real-time.

Applications of HSCD

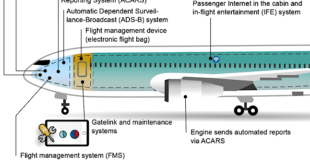

HSCD finds applications in a wide range of domains, including automotive, aerospace, telecommunications, consumer electronics, and industrial automation. In automotive systems, for example, HSCD enables the development of advanced driver assistance systems (ADAS) that combine hardware accelerators for image processing with software algorithms for object detection and classification. In aerospace applications, HSCD is used to design avionics systems that integrate hardware-based flight controllers with software-based navigation algorithms.

Hardware-software co-design process: Co-specification, Co-synthesis, and Co-simulation/Co-verification

Hardware-software co-design is a multifaceted process that encompasses various stages to ensure seamless integration and optimization of both hardware and software components. The process typically involves co-specification, co-synthesis, and co-simulation/co-verification, each playing a crucial role in achieving the desired system functionality and performance.

Co-specification is the initial phase where engineers develop a comprehensive system specification outlining the hardware and software modules required for the system, as well as the relationships and interactions between them. This specification serves as a blueprint for the subsequent design stages, providing clarity on the system’s requirements and constraints.

Co-synthesis involves the automatic or semi-automatic design of hardware and software modules to fulfill the specified requirements. During this phase, engineers utilize design tools and methodologies to generate hardware and software implementations that are optimized for performance, power consumption, and other relevant metrics. The goal is to iteratively refine the design to meet the specified objectives while balancing trade-offs between hardware and software implementations.

Co-simulation and co-verification are integral aspects of the co-design process, enabling engineers to assess the system’s behavior and functionality through simultaneous simulation of both hardware and software components. By running coordinated simulations, engineers can validate the design’s correctness, performance, and interoperability, identifying and addressing potential issues early in the development cycle. This iterative process of simulation and verification helps ensure that the final integrated system meets the specified requirements and functions as intended.

Ultimately, hardware-software co-design is a collaborative endeavor that requires close coordination between hardware and software engineers throughout the design process. By integrating co-specification, co-synthesis, and co-simulation/co-verification into the development workflow, teams can streamline the design process, improve efficiency, and deliver high-quality, optimized systems that meet the demands of modern applications.

HW/SW Co-Specification

HW/SW Co-Specification is the foundational step in the collaborative design of hardware and software systems, prioritizing a formal specification of the system’s design rather than focusing on specific hardware or software architectures, such as particular microcontrollers or IP-cores. By leveraging various methods from mathematics and computer science, including petri-nets, data flow graphs, state machines, and parallel programming languages, this methodology aims to construct a comprehensive description of the system’s behavior.

This specification effort yields a decomposition of the system’s functional behavior, resulting in a set of components that each implement distinct parts of the overall functionality. By employing formal description methods, designers can explore different alternatives for implementing these components, fostering flexibility and adaptability in the design process.

The co-design of HW/SW systems typically unfolds across four primary phases, as depicted in the diagram: Modeling, Partitioning, Co-Synthesis, and Co-Simulation. In the Modeling phase, designers develop abstract representations of the system’s behavior and structure, laying the groundwork for subsequent design decisions. The Partitioning phase involves dividing the system into hardware and software components, balancing performance, power consumption, and other design considerations. Co-Synthesis entails the automated or semi-automated generation of hardware and software implementations based on the specified requirements and constraints. Finally, Co-Simulation facilitates the simultaneous simulation of both hardware and software components, enabling designers to validate the system’s behavior and performance before committing to a final design.

Modeling:

Modeling constitutes a crucial phase in the design process, involving the precise delineation of system concepts and constraints to refine the system’s specification. At this stage, designers not only specify the system’s functionality but also develop software and hardware models to represent its behavior and structure. One primary challenge is selecting an appropriate specification methodology tailored to the target system. Some researchers advocate for formal languages capable of producing code with provable correctness, ensuring robustness and reliability in the final design.

The modeling process can embark on three distinct paths, contingent upon its initial conditions:

- Starting with an Existing Software Implementation: In scenarios where an operational software solution exists for the problem at hand, designers may leverage this implementation as a starting point for modeling. This approach allows for the translation of software functionality into a formal specification, guiding subsequent design decisions.

- Leveraging Existing Hardware: Alternatively, if tangible hardware components, such as chips, are available, designers can utilize these hardware implementations as the foundation for modeling. This route facilitates the translation of hardware functionalities into an abstract representation, informing the subsequent design process.

- Specification-Driven Modeling: In cases where neither an existing software implementation nor tangible hardware components are accessible, designers rely solely on provided specifications. This scenario necessitates an open-ended approach to modeling, affording designers the flexibility to devise a suitable model that aligns with the given requirements and constraints.

Regardless of the starting point, the modeling phase serves as a pivotal precursor to subsequent design activities, setting the stage for informed decision-making and ensuring the fidelity of the final system design.

Hierarchical Modelling methodology

Hierarchical modeling methodology constitutes a systematic approach to designing complex systems, involving the precise delineation of system functionality and the exploration of various system-level implementations. The following steps outline the process of creating a system-level design:

- Specification Capture: The process begins with decomposing the system’s functionality into manageable pieces, creating a conceptual model of the system. This initial step yields a functional specification, which serves as a high-level description of the system’s behavior and capabilities, devoid of any implementation details.

- Exploration: Subsequently, designers embark on an exploration phase, wherein they evaluate a range of design alternatives to identify the most optimal solution. This involves assessing various architectural choices, algorithms, and design parameters to gauge their respective merits and drawbacks. Through rigorous analysis and experimentation, designers aim to uncover the design configuration that best aligns with the project requirements and objectives.

- Specification Refinement: Building upon the insights gained from the exploration phase, the initial functional specification undergoes refinement to incorporate the decisions and trade-offs identified during the exploration process. This refined specification serves as a revised blueprint, capturing the refined system requirements and design constraints, thereby guiding the subsequent implementation steps.

- Software and Hardware Implementation: With the refined specification in hand, designers proceed to implement each component of the system using a combination of software and hardware design techniques. This entails translating the abstract system design into concrete software algorithms and hardware architectures, ensuring that each component functions seamlessly within the overall system framework.

- Physical Design: Finally, the design process culminates in the generation of manufacturing data for each component, facilitating the fabrication and assembly of the physical system. This phase involves translating the software and hardware implementations into tangible hardware components, such as integrated circuits or printed circuit boards, ready for deployment in real-world applications.

By adhering to the hierarchical modeling methodology, designers can systematically navigate the complexities of system design, from conceptualization to physical realization, ensuring the development of robust and efficient systems that meet the desired specifications and performance criteria.

There are many models for describing a system’s functionality:

There exist various models for describing the functionality of a system, each offering distinct advantages and limitations tailored to specific classes of systems:

- Dataflow Graph: This model breaks down functionality into discrete activities that transform data, illustrating the flow of data between these activities. It provides a visual representation of data dependencies and processing stages within the system.

- Finite-State Machine (FSM): FSM represents the system as a collection of states interconnected by transitions triggered by specific events. It is particularly suitable for modeling systems with discrete operational modes or sequences of events.

- Communicating Sequential Processes (CSP): CSP decomposes the system into concurrently executing processes, which communicate through message passing. It is adept at capturing parallelism and synchronization in systems where multiple activities occur simultaneously.

- Program-State Machine (PSM): PSM integrates the features of FSM and CSP, allowing each state in a concurrent FSM to incorporate actions described by program instructions. This model facilitates the representation of complex systems with both state-based behavior and concurrent processing.

While each model offers unique benefits, none is universally applicable to all types of systems. The selection of the most suitable model depends on the specific characteristics and requirements of the system under consideration.

In terms of specifying functionality, designers commonly utilize a range of languages tailored to their preferences and the nature of the system:

- Hardware Description Languages (HDLs) such as VHDL and Verilog: These languages excel in describing hardware behavior, offering constructs for specifying digital circuitry and concurrent processes. They are favored for modeling systems with intricate hardware components and interactions.

- Software Programming Languages (e.g., C, C++): Software-type languages are preferred for describing system behavior at a higher level of abstraction, focusing on algorithms, data structures, and sequential execution. They are well-suited for modeling software-centric systems and algorithms.

- Domain-Specific Languages (e.g., Handel-C, SystemC): These languages are tailored to specific application domains, providing constructs optimized for modeling particular types of systems or behaviors. They offer a balance between hardware and software abstraction levels, catering to diverse design requirements.

Ultimately, the choice of modeling language depends on factors such as design complexity, performance constraints, existing expertise, and design objectives, with designers selecting the language that best aligns with their specific design needs and preferences.

Partitioning: how to divide specified functions between hardware, software and Interface

Partitioning, the process of dividing specified functions between hardware and software, is a critical step in system design that hinges on evaluating various alternatives to optimize performance, cost, and other constraints. The functional components identified in the initial specification phase can be implemented either in hardware using FPGA or ASIC-based systems, or in software.

The partitioning process aims to assess these hardware/software alternatives based on metrics like complexity and implementation costs, leveraging tools for rapid evaluation and user-directed exploration of design spaces. The goal of the partitioning process is an evaluation of these hardware/software alternatives, given constraints such as time, size, cost and power. Depending on the properties of the functional parts, like time complexity of algorithms, the partitioning process tries to find the best of these alternatives. This evaluation process is based on different conditions, such as metric functions like complexity or the costs of implementation

While automatic partitioning remains challenging, designers increasingly rely on semi-automatic approaches, such as design space exploration, to navigate the complex trade-offs involved. FPGA or ASIC-based systems typically incorporate proprietary HDL code, IP blocks from manufacturers, and purchased IP blocks, alongside software components like low-level device drivers, operating systems, and high-level APIs. However, the significance of an effective interface submodule cannot be overstated, as its proper development is crucial for seamless integration and prevents disruptions during design reconfigurations.

In the realm of System-on-Chip (SoC) design, defining the hardware-software interface holds paramount importance, particularly for larger teams handling complex SoCs. The central “Interface” submodule is frequently overlooked in system design, leading to integration challenges later on. In embedded systems employing codesign methodologies, often at a low-level of programming like assembly code, meticulous development of interfaces is crucial, especially considering that design reconfigurations can significantly impact these critical modules.

Address allocation must be meticulously managed to avoid conflicts, ensuring alignment between hardware and software implementations. Effective interface design not only facilitates smoother integration but also enhances scalability and flexibility, laying a robust foundation for cohesive hardware-software co-design efforts.

Cosynthesis: generating the hardware and software components

After a set of best alternatives is found, the next step is the implementation of the components. This includes hardware sythesis, software synthesis and interface synthesis.

This involves concurrent synthesis of hardware, software, and interface, leveraging available tools for implementation. All potentially available components can be analyzed using criteria like functionality, technological complexity, or testability. The source of the criteria used can be data sheets, manuals, etc. The result of this stage is a set of components for potential use, together with a ranking of them.

Codesign tools should generate hardware/software interprocess communication automatically, and schedule software processes to meet timing constraints .

An essential goal of today’s research is to find and optimize algorithms for the evaluation of partitioning. Using these algorithms, it is theoretically possible to implement hardware /software co-design as an automated process. Advanced research aims to automate this process through optimized algorithms, while hardware is typically synthesized using VHDL or Verilog, and software is coded in languages like C or C++.

Codesign tools facilitate automatic generation of interprocess communication and scheduling to meet timing constraints. Analysis of available components involves assessing functionality, complexity, and testability, with DSP software posing a unique challenge due to limited compiler support for specialized architectures. High-level synthesis (HLS) has emerged as a solution, addressing the long-standing goal of automatic hardware generation from software.

System Integration

System integration represents the culmination of the hardware/software co-design process, where all components are assembled and assessed against the initial system specifications. System integration puts all hardware and software components together and evaluates if this composition complies with the system specification, done in step one. If any inconsistencies arise, the partitioning process may need to be revisited.

The algorithmic foundation of hardware/software co-design offers significant advantages, enabling early-stage verification and modification of system designs. However, certain limitations must be considered:

Insufficient knowledge: Effective implementation relies on comprehensive descriptions of system behavior and component attributes. While IP-cores are commonly used, their blackbox nature may hinder complete understanding and integration. Degrees of freedom: While hardware components offer limited flexibility, the substitution between hardware and software elements is more prevalent. This flexibility is particularly pronounced with ASICs and IP cores, providing greater adaptability for specialized applications.

Co-simulation: evaluating the synthesized design

Co-simulation plays a crucial role in enhancing design integrity and safety, particularly in light of recent aircraft incidents, where robust testing and fault diagnosis are paramount. Through simulation, designers can meticulously refine their designs, mitigating risks and ensuring optimal performance. Co-simulation orchestrates the interaction of hardware, software, and interfaces in real-time, facilitating the verification of design specifications and constraints by validating input-output data consistency. This iterative process not only saves time and costs but also enhances overall design quality and safety standards.

Verification: Does It Work?

Verification in embedded systems ensures the absence of hardware or software bugs through rigorous testing and analysis. Software verification entails executing code and monitoring its behavior, while hardware verification confirms proper functionality in response to external inputs and software execution. These verification processes guarantee the reliability and performance of embedded systems, minimizing the risk of malfunctions and ensuring seamless operation in various environments and conditions.

Validation: Did We Build the Right Thing?

Validation in embedded systems ensures that the developed system aligns with the intended requirements and objectives, surpassing or meeting expectations in functionality, performance, and power efficiency. By addressing the question, “Did we build the right thing?” validation confirms the accuracy of the system’s architecture and its optimal performance. Through rigorous testing and analysis, validation assures that the system fulfills its intended purpose and delivers the desired outcomes, thereby ensuring its effectiveness and suitability for deployment in real-world scenarios.

Challenges and Considerations

While HSCD offers numerous benefits, it also presents unique challenges and considerations. Firstly, HSCD requires close collaboration between hardware and software engineers, necessitating effective communication and coordination between interdisciplinary teams. Secondly, HSCD requires specialized tools and methodologies for co-design, simulation, and verification, which may require additional training and investment. Lastly, HSCD introduces complexity and uncertainty into the design process, requiring careful planning and management to ensure successful outcomes.

Leveraging AI and Machine Learning

The advent of Artificial Intelligence (AI) and Machine Learning (ML) technologies is reshaping the landscape of hardware-software co-design. AI-driven workloads demand specialized hardware architectures optimized for performance, efficiency, and scalability.

In the context of hardware/software co-design, machine learning plays a pivotal role in streamlining input variation analysis. This process involves identifying and analyzing the potential variations or uncertainties in the input parameters that could impact the performance or behavior of the system under design. Machine learning algorithms can be trained to recognize patterns and correlations in large datasets of historical input variations and their corresponding outcomes.

By leveraging machine learning, engineers can identify which variables are most likely to lead to failure or undesirable outcomes based on past data. These identified variables can then be prioritized for further analysis or mitigation strategies. Moreover, machine learning algorithms can also help in predicting the behavior of the system under different input scenarios, enabling proactive measures to be taken to address potential issues before they manifest.

Overall, by harnessing the power of machine learning, input variation analysis becomes more efficient and effective. The algorithms can sift through vast amounts of data to identify critical variables and patterns, thus reducing the time and effort required for manual analysis. Additionally, machine learning enables engineers to make more informed decisions and implement targeted interventions to enhance the robustness and reliability of the system design.

AI and ML technologies have reshaped the approach to technology, shifting from hardware-first to software-first paradigms. Understanding AI workloads is pivotal for devising hardware architectures, as diverse models necessitate different hardware configurations. Specialized hardware is essential for meeting latency requirements, particularly as data processing moves to edge devices. The trend of software/hardware co-design drives hardware development to accommodate software needs, marking a departure from the past. Optimization of hardware, AI algorithms, and compilers is crucial for AI applications, requiring a phase-coupled approach. Beyond AI, this trend extends to various domains, driving the emergence of specialized processing units tailored for specific tasks, alongside efforts to streamline software-to-hardware transitions. As processing platforms become more heterogeneous, challenges arise in directing software algorithms towards hardware endpoints seamlessly, necessitating closer collaboration between software developers and hardware designers.

As AI applications proliferate across diverse domains, the need for adaptable and versatile hardware-software solutions becomes increasingly apparent.

Future Perspectives

Looking ahead, hardware-software co-design is poised to play a pivotal role in driving innovation and addressing the evolving demands of electronic systems. From edge computing and IoT devices to data centers and autonomous vehicles, HSCD offers a pathway to enhanced performance, efficiency, and reliability.

Conclusion

Hardware-Software Co-Design (HSCD) represents a paradigm shift in the design and development of electronic embedded systems. By seamlessly integrating hardware and software components at the design stage, HSCD enables developers to achieve higher levels of performance, efficiency, and scalability than ever before. From automotive and aerospace systems to telecommunications and consumer electronics, HSCD is driving innovation and unlocking new possibilities across a wide range of industries. As the demand for intelligent, connected, and energy-efficient embedded systems continues to grow, HSCD is poised to play an increasingly vital role in shaping the future of technology.

References and Resources also include:

https://webhome.cs.uvic.ca/~mserra/HScodesign.html

https://semiengineering.com/software-hardware-co-design-becomes-real/

http://www.uml.org.cn/embeded/pdf/embed_design.pdf

https://www.researchgate.net/publication/342242101_Design_Issues_in_HardwareSoftware_Co-Design_R_Ganesh

International Defense Security & Technology Your trusted Source for News, Research and Analysis

International Defense Security & Technology Your trusted Source for News, Research and Analysis