Weaponized AI: How Generative Models Are Fueling Extremism

Terrorists are turning generative AI into a digital weapon—spreading propaganda, deepfakes, and cyber threats with alarming speed.

Artificial Intelligence (AI) has rapidly evolved, becoming a central topic of discussion in public, academic, and political spheres. Among its many applications, Generative AI has emerged as a powerful tool capable of creating text, images, audio, and videos, offering transformative potential across industries such as education, entertainment, healthcare, and scientific research.

However, while AI’s advancements present numerous benefits, they also introduce significant risks. The ability of generative AI to fabricate highly realistic content has raised concerns about its misuse, particularly by malicious actors—including terrorist organizations—who seek to exploit this technology for propaganda, recruitment, and misinformation. This article explores how terrorist groups are leveraging generative AI to enhance their operations, manipulate public perception, and advance their extremist agendas.

Understanding Generative AI and Its Capabilities

Generative AI refers to a subset of artificial intelligence that creates new content rather than just analyzing or classifying existing data. Unlike traditional AI models that predict or categorize information, generative AI can produce human-like text, realistic images, deepfake videos, and even synthetic voices.

Some of the most well-known generative AI models include:

- Text-based AI: ChatGPT, Claude, Google Gemini, Llama 2

- Image & Video Generators: DALL-E 3, Midjourney, Stable Diffusion

- Voice Generators: Microsoft VALL-E

These AI systems, originally designed for positive applications, have also drawn significant interest from terrorist groups seeking to exploit them for illicit purposes.

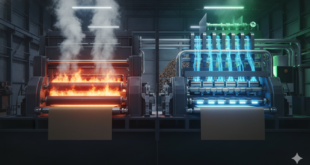

A Game-Changer in Efficiency and Accessibility

As of February 2025, ChatGPT has over 400 million weekly active users, reflecting its rapid global adoption. In the U.S., around 67.7 million people use it monthly, with the platform processing over 1 billion queries daily. This revolutionary technological advancement has transformed the way individuals and companies manage their everyday tasks, thanks to its ability to communicate in natural language and provide quick, accurate information on a wide range of topics. However, alongside its incredible benefits, ChatGPT also brings potential risks, particularly the misuse of such advanced technology by malicious actors.

ChatGPT’s user-friendly design makes it accessible to a broad audience, including those with little to no technical knowledge. Users can interact with ChatGPT as they would with another person, making it an invaluable tool for various applications. In customer service, ChatGPT can handle customer inquiries efficiently, reducing wait times and improving user satisfaction. In content creation, it assists in drafting emails, generating reports, and producing high-quality content quickly. For research assistance, ChatGPT provides immediate answers, supporting academic and professional research with relevant information. As a personal assistant, it simplifies scheduling, reminders, and task management, enhancing organizational capabilities.

The Dark Side: Potential Misuse by Malicious Actors

Despite its many advantages, ChatGPT’s capabilities also present significant risks. The application of sophisticated deep-learning models like ChatGPT by terrorists and violent extremists is a growing concern. These models could be exploited to enhance malicious operations both online and in the real world.

Potential Threats and Misuses

Extremists could exploit ChatGPT for operational planning, enhancing the efficiency and secrecy of their strategies. The AI’s capability to generate persuasive and targeted content could significantly boost the creation and dissemination of extremist propaganda. Additionally, ChatGPT’s natural language processing proficiency poses risks for social engineering and impersonation attacks, leading to fraud or unauthorized access. Furthermore, the AI could be misused to generate malicious code, facilitating various forms of cybercrime. These potential misuses highlight the need for robust safeguards and monitoring to prevent such exploitation.

Polarizing or Emotional Content

Polarizing or emotional content is strategically employed to create division and elicit strong emotional responses among target audiences. This type of content can exploit societal fractures by emphasizing contentious issues, often leading to increased tensions and conflicts. The goal is to manipulate individuals’ emotions, such as anger, fear, or hatred, to deepen divides and disrupt social cohesion. By doing so, malicious actors can destabilize communities, polarize opinions, and amplify ideological differences, making it easier to sway public sentiment and achieve their objectives.

AI-Generated Propaganda and Disinformation

Disinformation and misinformation are powerful tools used to spread false information and manipulate public perception. Disinformation involves deliberately creating and disseminating false information to deceive people, while misinformation refers to the spread of incorrect information without necessarily intending to mislead. Both tactics can undermine trust in institutions, confuse the public, and create widespread uncertainty. By distorting reality and promoting false narratives, malicious actors can influence opinions, shape public discourse, and achieve strategic goals that align with their interests.

Propaganda has always been a critical tool for terrorist organizations, and generative AI has significantly enhanced its reach and effectiveness. By leveraging AI-generated fake news articles, deepfake videos, and synthetic images, extremist groups can manipulate public perception on an unprecedented scale. AI-generated content can fabricate distressing images of war victims or violent attacks, amplifying public outrage and inciting unrest. Additionally, AI can craft misleading narratives that align with extremist ideologies, making false reports appear legitimate and persuasive. The rapid dissemination of such content across social media platforms allows extremist propaganda to spread faster than ever before.

Case Study: The Use of AI in the Israel-Gaza Conflict

During the recent Israel-Gaza conflict, various AI-generated images and videos surfaced online, specifically designed to manipulate public sentiment. Reports indicated that terrorist groups exploited AI to fabricate graphic images of war casualties, inciting violence and fueling tensions. Deepfake videos emerged portraying IDF soldiers in compromising situations, aiming to damage morale and weaken public trust. AI-written articles promoting false narratives were widely shared on extremist forums, further exacerbating misinformation. These tactics illustrate how generative AI has become a powerful weapon in modern disinformation campaigns.

AI-Generated Deepfakes and Psychological Warfare

The emergence of deepfake technology has introduced a new dimension to psychological warfare, enabling terrorists to manipulate reality with alarming precision. AI-generated deepfakes can create fake videos of political leaders making inflammatory statements, leading to widespread misinformation and political instability. Terrorists can also impersonate security officials to spread false orders or misinformation, causing confusion and panic. Additionally, AI-generated hostage situations or fabricated threats can instill fear and destabilize entire communities.

The inability to distinguish between real and AI-generated content presents a serious national security risk. Deepfakes can be weaponized to undermine trust in legitimate media, destabilize governments, and influence public opinion based on fabricated events. As this technology becomes more sophisticated, the potential for large-scale manipulation and deception grows, posing a significant challenge to global security.

AI-Powered Recruitment Strategies

Recruitment efforts are focused on expanding membership, gaining followers, and gathering support for extremist causes. By appealing to individuals’ sense of belonging, purpose, and identity, recruiters can attract new members to their organizations. Recruitment strategies often involve personalized messaging, propaganda, and targeted outreach that resonates with potential recruits’ beliefs and grievances. Successful recruitment not only increases the size and strength of extremist groups but also enhances their ability to influence and mobilize supporters.

Terrorist organizations are increasingly adopting AI-driven chatbots and large language models (LLMs) to refine their recruitment strategies. AI-powered systems can engage potential recruits in highly personalized conversations, analyzing their social media activity to identify vulnerabilities and tailor messages accordingly. By leveraging AI’s ability to simulate human interaction, extremist groups can create an illusion of personal connection, gradually leading individuals toward radicalization.

Example: AI-Driven Chatbots for Extremist Recruitment

Recent reports suggest that terrorist networks have experimented with LLMs trained on extremist literature to recruit and indoctrinate individuals. These AI-powered recruiters can adapt their messaging to appeal to different demographics, making radicalization efforts more efficient and difficult to detect. By continuously interacting with potential recruits, AI-driven systems can reinforce extremist ideologies over time, drawing individuals deeper into violent beliefs.

Tactical Learning

Tactical learning involves the acquisition of specific knowledge or skills necessary for carrying out operations. Extremist groups seek out information on various topics, such as combat techniques, surveillance methods, or bomb-making instructions. Access to this knowledge enhances their operational capabilities and effectiveness. By continually updating their tactical knowledge, these groups can adapt to new challenges, improve their methodologies, and maintain a strategic advantage over their adversaries.

Attack Planning

Attack planning is the process of strategizing and preparing for specific attacks. This involves detailed planning, coordination, and execution of operations intended to achieve maximum impact. Planning activities can include selecting targets, gathering intelligence, acquiring necessary resources, and rehearsing the attack. By meticulously planning their actions, extremist groups aim to maximize the effectiveness and success of their operations, often seeking to cause significant harm, disrupt societal functions, and draw attention to their cause.

Security Concerns: Jailbreaking the Safeguards

The potential for misuse extends beyond core functionalities. Malicious actors could potentially bypass safety measures. Through manipulation (“jailbreaking”), extremists might circumvent the safeguards designed to prevent the generation of harmful content. The use of multiple accounts could allow extremists to leverage different large language models for diverse purposes, maximizing their reach and impact.

AI-Enhanced Cyberterrorism

Generative AI is also being exploited in cyberterrorism, significantly increasing the effectiveness of cyberattacks. AI-powered systems enable the automation of phishing attacks, generating emails and messages that mimic legitimate communications with near-perfect accuracy. Terrorists can leverage AI to develop sophisticated malware capable of bypassing conventional cybersecurity defenses, making hacking attempts more effective and difficult to trace.

Beyond cyberattacks, AI-generated deepfake identities facilitate financial fraud and illicit transactions. By synthesizing realistic voices and images, terrorists can impersonate individuals in banking, defense, and intelligence sectors, increasing the risk of security breaches. This technological advancement in cyberterrorism threatens not only financial institutions but also national security frameworks, highlighting the urgent need for AI-driven countermeasures.

Research and Reports on Security Implications

Several studies and reports have highlighted the risks associated with the misuse of generative AI models like ChatGPT. The 2020 McGuffie & Newhouse Study on GPT-3 Abuse serves as a cautionary tale. Researchers McGuffie and Newhouse explored the vulnerabilities of GPT-3, revealing a significant risk: bad actors could exploit these models to radicalize and recruit individuals online on a large scale.

The April 2023 EUROPOL Innovation Lab Report identifies concerning applications, including impersonation, social engineering attacks, and cybercrime tools. The August 2023 ActiveFence Study on Safeguard Gaps exposes a critical vulnerability: the potential inadequacy of existing safeguards in large language models. The August 2023 Australian eSafety Commissioner Report raises concerns about terrorists using AI for financing terrorism, cybercrime, and propaganda.

Implications and Recommendations

The potential for AI to be used both as a tool and a threat in the context of extremist activities highlights the need for vigilant monitoring and proactive measures by governments and developers. Developers have already begun addressing these issues. For instance, an OpenAI spokesperson mentioned that they are “always working to make our models safer and more robust against adversarial attacks.” However, it remains unclear whether this proactive stance is industry-wide or limited to specific companies. Given the high success rates observed even without jailbreaks, merely focusing on jailbreak prevention is insufficient.

A comprehensive response requires a collaborative effort across the industry. Governments are beginning to recognize the need for regulation, as evidenced by the European Union’s agreement on an AI Act in December 2023 and President Biden’s executive order imposing new rules on AI companies and directing federal agencies to establish guardrails around the technology.

The Need for Vigilant Safeguards and Research

As the adoption of ChatGPT and similar technologies continues to grow, so does the urgency to address these security challenges. It is crucial for researchers, policymakers, and tech companies to collaborate on developing robust safeguards and countermeasures to prevent misuse. Key areas of focus include security vulnerability assessments, countermeasure development, robust safeguards, enhanced security measures, user education, and regulatory oversight.

AI and Counterterrorism: Leveraging Technology for Security

While the risks of AI in the hands of terrorists are significant, AI also presents powerful opportunities to enhance counterterrorism efforts. Governments and security agencies are increasingly utilizing AI-driven technologies to prevent attacks, disrupt extremist networks, and strengthen national security.

Surveillance and Monitoring

One of AI’s most critical applications in counterterrorism is its ability to analyze live video feeds and identify suspicious behavior or objects in real time. AI-powered surveillance systems can detect anomalies in crowded public spaces, alerting authorities to potential threats before an incident occurs. Facial recognition and pattern analysis enable law enforcement to track known extremists and identify individuals exhibiting concerning behavior. These technologies allow for a proactive approach to security, reducing response time and increasing the likelihood of preventing attacks.

Counter-Propaganda and De-Radicalization

AI can play a crucial role in curbing the spread of terrorist propaganda and assisting in de-radicalization efforts. Advanced AI algorithms can automatically detect and remove extremist content from social media and online platforms, limiting the reach of terrorist organizations. By analyzing language patterns, AI can identify emerging extremist narratives and counter them with accurate, fact-based information. Additionally, AI-driven psychological analysis can help de-radicalization programs by identifying at-risk individuals based on their online behavior and engagement with extremist content. This enables early intervention strategies, potentially steering vulnerable individuals away from radicalization.

Predictive Analytics and Threat Detection

AI’s ability to process vast amounts of data makes it a powerful tool for predictive analytics in counterterrorism. By analyzing historical attack patterns, social media activity, and other intelligence sources, AI can identify indicators of radicalization and predict potential threats. Machine learning models can assess individual and group behavior, detecting signs of extremism and alerting authorities to emerging risks. This predictive capability enhances intelligence efforts, allowing counterterrorism units to act before an attack is executed.

Data Analysis and Intelligence Gathering

AI significantly improves intelligence operations by integrating data from multiple sources, including surveillance footage, digital communications, financial transactions, and social media. Natural Language Processing (NLP) enables AI to analyze vast amounts of text, audio, and video content, identifying extremist rhetoric, coded language, or suspicious conversations. By automating intelligence gathering and analysis, AI enhances the ability of security agencies to uncover terrorist networks, track illicit financing, and disrupt coordinated attacks.

Operational Efficiency in Counterterrorism

AI enhances the efficiency of counterterrorism operations by optimizing resource allocation and decision-making processes. AI-driven tools can analyze real-time data to assist law enforcement and military personnel in strategic planning, ensuring that resources are deployed where they are most needed. AI-powered simulations can help security agencies anticipate different attack scenarios, improving readiness and response strategies. Additionally, AI-assisted decision-making tools provide commanders with real-time recommendations based on intelligence data, enabling faster and more effective responses to potential threats.

While AI offers significant advantages in counterterrorism, its deployment must balance security measures with ethical considerations. Safeguarding civil liberties, ensuring data privacy, and preventing misuse of AI technologies are crucial in maintaining the democratic principles that counterterrorism efforts aim to protect. Striking the right balance between security and personal freedoms will determine how effectively AI can be leveraged to combat terrorism while preserving the values of an open society.

Future Directions and Collaboration

Governments, technology companies, and security researchers need to collaborate on establishing regulations and best practices to minimize the risk of misuse. Increased cooperation between the private and public sectors, academia, the tech industry, and the security community is essential. Such collaboration would enhance awareness of AI misuse by violent extremists and foster the development of more sophisticated protections and countermeasures. Without these efforts, the dire predictions of industry leaders, such as OpenAI’s chief executive Samuel Altman—who warned, “if this technology goes wrong, it can go quite wrong”—may come true.

Conclusion

References and Resources also inlude;

https://ict.org.il/generating-terror-the-risks-of-generative-ai-exploitation/

https://icct.nl/publication/exploitation-generative-ai-terrorist-groups

International Defense Security & Technology Your trusted Source for News, Research and Analysis

International Defense Security & Technology Your trusted Source for News, Research and Analysis