The growing demand for high-efficiency fighter aircraft, commercial airbuses and the ever-evolving Aerospace and Defense requirements are driving the demand for next-gen airborne electronics systems. Air transportation agencies and aviation OEMs across the globe have been striving to build next-generation Airborne electronics systems to make flying more reliable, predictable, and safer.

DO-254

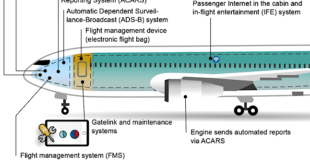

Airborne electronics such as communication systems, transmit-receivers, guidance & navigation systems, flight control computers, and fire-control systems among others are some of the critical components of an aircraft. The design of Airborne electronics systems and sub-systems demands higher safety and reliability to ensure airworthiness of these sub-systems. All major avionics OEMs, R&D and System Engineering companies designing airborne electronics hardware and software have to ensure compliance with several regulatory standards like DO-254, DO-178B/C, ARINC, MIL-STDs, and DO-160 to develop high performance, reliable products.

DO-254 (also known as DO254, D0254 and Eurocae ED-80) is a formal avionics standard which provides guidance for design assurance of airborne electronic hardware. DO-254 provides information from project conception, planning, design, implementation, testing, and validation, including DO-254 Tool Qualification considerations.

DO-254 and DO-178 are actually quite similar, with both having major contributions via personnel with formal software process expertise. Today, avionics systems are comprised of both hardware and software, with each having near-equal effect upon airworthiness. Now, most avionics projects adhere to DO-254 certification or compliance.

DO-178 Standard

DO-178 is the international and de facto standard for certifying all aviation safety-critical software developed by the Radio Technical Commission for Aeronautics (RTCA) in 1992. The first version, DO-178 covered the basic avionics software lifecycle. The second version, DO-178A, added avionics software criticality level details and emphasized software component testing to obtain quality.

Aviation OEMs have introduced advanced and more efficient methodologies such as Model-based Software Development and Verification and Object-oriented Program in airborne software development. The current version is Do-178C and, DO-178 has evolved so it contains objectives and guidance for new technologies used in development, like OOA/OOD, MBD (Model based Development), formal Methods, and software configuration and quality via added planning, continuous quality monitoring, and verification and testing in real-world conditions.

DO-178C, is the primary document by which the certification authorities such as FAA, EASA and Transport Canada approve all commercial software-based aerospace systems. The document is published by RTCA, Incorporated, in a joint effort with EUROCAE, and replaces DO-178B. The new document is called DO-178C/ED-12C and was completed in November 2011 and approved by the RTCA in December 2011. It became available for sale and use in January 2012.

A DER (Designated Engineering Representative) is an appointed engineering resource who has the authority to pass judgment on aviation-related design/development. An avionics software Designated Engineering Representative may be appointed to act as a Company DER and/or a Consultant DER. A Company DER can act as a Designated Engineering Representative for his/her employer and may only approve or recommend approval of technical data to the FAA for that company.

DO178 Gap Analysis is an evaluation of your current avionics software engineering process and artifacts as contrasted to those required by DO-178. While DO-178 was principally written to cover original, custom-developed avionics software, there is recognition that previously developed software can be DO-178 certified.

In many cases, particularly military avionics software, DO-178 Compliance is used instead of DO-178 certification. DO-178 Compliance is near-certification but does not require FAA involvement and several of the formal DO-178 requirements are lessened. DO-178 Gap Analysis is typically performed by trained DO-178 consultants or Designated Engineering Representatives. The resultant DO-178 Gap Analysis Roadmap assesses all of the software processes and artifacts. It provides details for filling the gap to meet DO-178 compliance or certification requirements.

Design Assurance Level (DAL)

The purpose of DO-178C is to provide guidance for developing airborne software systems to ensure that it performs its intended function with a level of confidence that commiserates with its

airworthiness requirement.

DAL defines the process of establishing that hardware (DO-254) and software (DO-178B) will operate in a precise and predictable manner. Flight-safety certification is complex and detailed work requiring all software and hardware processing permutations to be evaluated for determinism and to remove opportunities for unplanned outcomes.

DO-178C standard outlines the definition of Design Assurance Levels (DAL) for airborne software. The Software Level, also known as the Design Assurance Level (DAL) or Item Development Assurance Level (IDAL) as defined in ARP4754, is determined from the safety assessment process and hazard analysis by examining the effects of a failure condition in the system. The failure conditions are categorized by their effects on the aircraft, crew, and passengers.

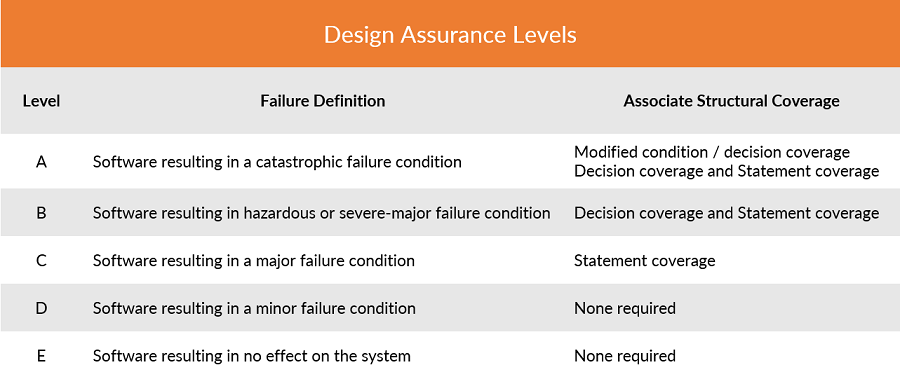

There are five assurance levels as in DO-254 or DO-178, which describe the impact of a potential software failure to the system in its entirety. There are multiple criticality levels for this software (A, B, C, D, and E).

Each software component has a corresponding software level (also referred to as a Development Assurance Level, or DAL) based on the impact of that component’s anomalous behavior on the continued safe operation of the aircraft.

Different airworthiness levels within DO-178C—A, B, C, D and E—directly correspond to the consequences of a potential software failure: catastrophic, hazardous/severe-major, major,

minor or no effect, respectively.

- Level A Catastrophic – Failure may cause deaths, usually with loss of the airplane.

- Level B Hazardous – Failure has a large negative impact on safety or performance or reduces the ability of the crew to operate the aircraft due to physical distress or a higher workload, or causes serious or fatal injuries among the passengers.

- Level C Major – Failure significantly reduces the safety margin or significantly increases crew workload. May result in passenger discomfort (or even minor injuries).

- Level D Minor – Failure slightly reduces the safety margin or slightly increases crew workload. Examples might include causing passengers inconvenience or a routine flight plan change.

- Level E No Effect – Failure has no impact on safety, aircraft operation, or crew workload.

As the criticality level increases, so does the degree of rigor associated with documentation, design, reviews, implementation, and verification. Accordingly, cost and schedule increase as well. DO-178 can add 30-150% to avionics software development costs.

Software objectives

DO-178C is objective-driven and companies may use a variety of means to achieve compliance as long as they meet the objective(s) in question. To comply with DO-178C, companies must provide multiple supporting documents and records surrounding their development processes.

Safety-Critical

Safety typically refers to being free from danger, injury, or loss. In the commercial and military industries, this applies most directly to human life. Critical refers to a task that must be successfully completed to ensure that a larger, more complex operation succeeds.

Safety-critical systems demand software that has been developed using a well-defined, mature software development process focused on producing quality software. Safety-critical systems go through a rigorous development, testing, and verification process before getting certified for use. Achieving certification for safety-critical airborne software is costly and time-consuming.

Safety-critical software is typically DO-178B level A or B. Typical safety-critical applications include both military and commercial flight, and engine controls. At these higher levels of software criticality, the software objectives defined by DO-178B must be reviewed by an independent party and undergo more rigorous testing. Objectives requiring independence need documentary evidence that the person verifying the item is not the person who developed the item.

Once certification is achieved, the deployed software cannot be modified without recertification. Unauthorized modification of the certified software presents a significant risk to safe operation, and today’s safety-critical systems face a variety of threats from unintentional and malicious actors. If the software is changed maliciously or even unintentionally from the certified configuration, it is no longer safe. Bottom-line: a system that is not secure puts safety at risk.

Mission Critical

A mission refers to an operation or task that is assigned by a higher authority. Therefore a mission-critical application for an RTOS implies that a failure by the operating system will prevent a task or operation from being performed, possibly preventing successful completion of the operation as a whole.

Mission-critical systems must also be developed using well-defined, mature software development processes. Therefore they also are subjected to the rigors of DO-178B. However, unlike safety-critical applications, mission-critical software is typically DO-178B level C or D. Mission-critical systems only need to meet the lower criticality levels set forth by the DO-178B specification. Generally, mission-critical applications include navigation systems, avionics display systems, and mission command and control.

The following shows the relationship between the system life cycle processes and the software and hardware life cycle processes. The key takeaway is that safety (and, implicitly, security) requirements and responsibilities must be determined at the system level but with feedback from the other life cycle processes.

These different software level certifications also determine the rigor required in testing and other aspects of development—the most challenging of which is software verification.

DO-178 & DO-254 have three integral processes: Planning, Development, and Correctness

DO-178C compliance involves six key processes: planning, development, verification, configuration management, quality assurance (QA) and certification liaison

DO-178C requires five DO-178C Plans, three DO-178C Standards, and 20+ DO-178C checklists. DO-178C Plans and Checklist Templates cover all phases of the system’s software project lifecycle and are developed with DO-178C in mind.

Software life cycle processes

DO-178C objective Table A-2 calls for the project to establish key process elements to be followed for the project. These elements are common to any development. They include understanding and gaining agreement on what is to be done (requirements), deriving requirements and communicating them to stakeholders, defining the software product architecture, delineating low level requirements, translating requirements into source code, and ensuring the resultant executable works in the target environment.

DO-178B is mainly divided into 5 major processes. They are: Software Planning, Software Development, Software Verification, Software Configuration Management and Software Quality Assurance

Fig. shows the main elements of DO-178C; namely, the various software life cycle processes comprising planning, development, and several associated “integral” processes.

Plans: DO-178 Planning requires five plans for any DALs (Design Assurance Levels). These DO-178 Plans need to be in compliant to DO-178 and with specific information. Developing the right level of plans are the key to success of any DO-178 project. The top 5 DO-178 Plans are:

- Plan for Software Aspects of Certification (PSAC): an overall synopsis for how your software engineering will comply with DO-178, and the roles for FAA certification and EASA certification.

- Software Quality Assurance Plan (SQAP): details how DO-178’s quality assurance objectives will be met for this project.

- Software Configuration Management Plan (SCMP): details how DO-178’s change management and baseline/storage objectives will be performed on this project.The ability to control and recreate any snapshot of the project is covered by Configuration Management (CM). However, CM also ensures security, backups, completed reviews, problem reporting, and version control.

- Software Development Plan (SDP): summarizes how software requirements, design, code, and integration will be performed in conjunction with the usage of associated tools to satisfy DO-178’s development objectives.

- Software Verification Plan (SVP): summarizes the review, test, and analysis activities, along with associated verification tools, to satisfy DO-178’s verification objectives. DO-178 mandates that software components that could be affected by hardware be tested on the hardware, e.g. hardware/software interfaces, interrupts, timing, board-level components, BSP/RTOS, etc. Since all software whose operation is potentially affected by hardware must be tested on the actual target hardware, integration begins earlier and software is verified earlier. Also, the system upon which the software resides must comply with ARP-4754A, which mandates similarly strong system processes; it’s likely the custom hardware logic components will need to meet DO-254 (hardware logic’s corollary to DO-178C) so that hardware will be similarly be of higher quality at integration.

When your plans are complete and have been reviewed and signed off internally, it will be time to take the first step in the compliance process by having the SOI#1 review with your certification authority. The SOI#1 review is typically a “desk” review performed by the authority, who will thoroughly review all plans and standards to ensure that all applicable objectives of DO-178C and any relevant supplements will be satisfied if the development proceeds as documented in your plans. This review will result in either a report documenting any observations or findings that must be resolved, or acceptance that your plans and standards are compliant. Once your plans are accepted, the last step should be the easiest in the project’s lifecycle: follow the plan!

Standards: If the Design Assurance Level are above DAL-D; Levels C, B or A, then the applicant is also required to develop three DO-178 Standards. Those standards are:

- Software Requirements Standard: provides criteria for the decomposition and assessment of System Requirements into software high-level requirements and high-level to low-level requirements; including derived and safety-related requirements.DO-178C mandates thorough and detailed software requirements. Assumptions are drastically minimized. Consistency of requirements and their testability is greatly enhanced. Iterations and rework due to faulty and missing requirements are greatly reduced.

- Software Design Standard: provides criteria for defining and assessing the software architecture and design.

- Software Coding Standard: provides criteria for implementing and assessing the software source-code.

As with the other processes involved in proving compliance with DO-178C, planning requires associated output documentation, including the following: Plan for software aspects of certification (PSAC); Software development plan (SDP); Software verification plan (SVP); Software configuration management plan (SCMP); Software quality assurance plan (SQAP); System requirements; Software requirements standard (SRS); Software design standard (SDS); Software code standard (SCS)

Objectives

After the software criticality level has been determined, you examine DO-178 to determine exactly which objectives must be satisfied for the software. Each software level has a defined number of objectives that need to be satisfied (some with independence).

DO-178C, released in 2012, defines objectives for 10 software life cycle processes, activities that can satisfy those objectives, and descriptions of the evidence required to show that the objectives have been satisfied. Meeting different objectives requires varying levels of effort, as some are harder than others. DO-178C defines objectives for the following processes:

The number of objectives to be satisfied (some with independence) is determined by the software level A-E. The phrase “with independence” refers to a separation of responsibilities where the objectivity of the verification and validation processes is ensured by virtue of their “independence” from the software development team.

For objectives that must be satisfied with independence, the person verifying the item (such as a requirement or source code) may not be the person who authored the item and this separation must be clearly documented.

| Level | Failure condition | Objectives | With independence |

|---|---|---|---|

| A | Catastrophic | 71 | 30 |

| B | Hazardous | 69 | 18 |

| C | Major | 62 | 5 |

| D | Minor | 26 | 2 |

| E | No Safety Effect | 0 | 0 |

Development

Output documents associated with meeting DO-178C standards in the development process include software requirements data, software design descriptions, source code and executable

object code.

Any piece of software is designed and implemented against a set of requirements, so development consists of elaborating the software requirements (both high-level and low-level) from the system requirements and perhaps teasing out additional (“derived”) requirements, specifying the software architecture, and implementing the design.

Verification (one of the integral processes) is needed at each step. For example, are the software requirements complete and consistent? Does the implementation satisfy the requirements? According to DO-178C stipulations, without verifiable, unambiguous, consistent and well-defined requirements, you must create a problem report and submit the issue back to the input source to be clarified and corrected.

Complementing the actual development processes are various peripheral activities. The software is not simply a static collection of code, data, and documentation; it evolves in response to detected defects, customer change requests, etc. Thus, the integral processes include Configuration Management and Quality Assurance, and well-defined procedures are critical.

Software verification

The majority of the guidance in DO-178C deals with verification: a combination of reviews, analysis, and testing to demonstrate that the output of each software life cycle process is correct with respect to its input. Of the 71 total objectives for Level A software, 43 apply to verification, and more than half of these concern the source and object code.

For this reason, DO-178C is sometimes called a “correctness” standard rather than a safety standard; the principal assurance achieved through certification is confidence that the software meets its requirements. And the emphasis of the code verification objectives is on testing.

To help ensure that your software fulfills DO-178C requirements, you must submit a verification report that shows the absence of errors—not just that you have tested for and detected errors. You need to prove that all lower-level artifacts satisfy higher-level artifacts, that you have accomplished traceability between requirements and test cases via requirements-based coverage analysis, and that you can show traceability between code structure and test cases through a structural coverage analysis.

Each requirement in your software development process must be traceable not only to the code that implements it but also to the review, test or analysis through which it has been verified. You must also ensure that you can trace implemented functionality back to requirements and that testing can prove this—you need to eliminate any dead code or code that is not traceable to requirements.

Plus, you need to provide all of your derived requirements to the system safety assessment

process. In a nutshell, this means that all of the source code you develop needs to be traceable, verifiable and consistent, and it needs to correctly fulfill the low-level software requirements.

Output documentation associated with DO-178C includes the following:

● Software verification cases and procedures (SVCP)

● Software verification results (SVR)

● Review of all requirements, design and code

● Testing of executable object code

● Code coverage analysis

Traceability

Verification involves traceability: DO-178 requires a documented connection (called a trace) between the certification artifacts. You must also be able to trace those system requirements that will be realized by high level software requirements to one or more low-level software requirements, and a low-level requirement to one or more high-level software requirements.

For example, each High Level Requirement (HLR) must be traceable to Low-Level Requirement (LLR) and ultimately to source code that correctly implements it, and each piece of source code must be traceable back up to low- and high-level requirements.

Traceability analysis is then used to ensure that each requirement is fulfilled by the source code, that each requirement is tested, that each line of source code has a purpose (is connected to a requirement), and so forth. Traceability ensures the system is complete. The rigor and detail of the certification artifacts is related to the software level.

Coding Standard

DO-178C mandates a requirements-based development and verification process that incorporates bi-directional requirements traceability across the lifecycle. Source code written in accordance with coding standards will generally lend itself to such mapping.

DO-178C requires that the source code is written in accordance with a set of rules (or “coding standard”) that can be analyzed, tested, and verified for compliance. It does not specifically require a particular standard, but does require a programming language with unambiguous syntax and clear control of data with definite naming conventions and constraints on complexity. The most popular coding standards are MISRA C and MISRA C++, which now include guidelines for software security, but there are alternatives including the JSF++ AV standard, used on the F-35 Joint Strike Fighter and beyond.

The Ada language has its own coding standards such as SPARK and the Ravenscar profile, both subsets designed for safety-critical hard real-time computing. The Ada programming language is particularly applicable because it was designed to promote sound software engineering practice and it enforces checks that will prevent weaknesses such as “buffer overrun” and integer overflow. The most recent version of the language, Ada 2012, includes an especially relevant feature known as contract-based programming, which effectively embeds the low-level software requirements in the source code in which they can be verified either statically (with appropriate tool support) or at run time (with compiler-generated checks).

Software testing

The DO-178C based software testing involves three levels as described in Section 6.4 of the standard viz., Low-level testing, software integration testing, and hardware/software integration testing. DO-178B/C assures the robustness and reliability sought during the development and testing of airborne software.

But testing has a well-known intrinsic drawback; as quipped by the late computer scientist Edsger Dijkstra, it can show the presence of bugs but never their absence. DO-178C mitigates this issue in several ways:

• Instead of “white box” or unit testing, DO-178C mandates requirements-based testing. Each requirement must have associated tests, exercising both normal processing and error handling, to demonstrate that the requirement is met and that invalid inputs are properly handled. The testing is focused on what the system is supposed to do, not on the overall functionality of each module.

• Testing is augmented by inspections and analyses to increase the likelihood of detecting errors early.

Static and Dynamic Analysis

Static analysis involves going through the code in order to find out any possible defect in the code. Dynamic analysis involves executing the code and analyzing the output.

The static analysis of source code may be thought of as an “automated inspection”, as opposed to the dynamic test of an executable binary derived from that source code. The use of a static analysis tool will ensure adherence of source code to the specified coding standard and hence meet that DO-178 objective. It will also provide the means to show that other objectives have been met by analyzing things like the complexity of the code, and deploying data flow analysis to detect any uninitialized or unused variables and constants.

Static analysis also establishes an understanding of the structure of the code and the data, which is not only useful information in itself but also an essential foundation for the dynamic analysis of the software system, and its components.

In accordance with DO-178C, the primary thrust of dynamic analysis is to prove the correctness of code relating to functional safety (“functional tests”) and to therefore show that the functional safety requirements have been met.

Given that compromised security can have safety implications, functional testing will also demonstrate robust security, perhaps by means of simulated attempts to access control of a device, or by feeding it with incorrect data that would change its mission. Functional testing also provides evidence of robustness in the event of the unexpected, such as illegal inputs and anomalous conditions.

DO-178 dead code

DO-178 dead code is executable (binary) software that will never be executed during runtime operations. Dead code has no requirements! D0178B generally does not allow for the presence of dead code: it must be removed.

SCA also helps reveal “dead” code that cannot be executed regardless of what inputs are provided for test cases. There are many reasons why such code may exist, such as errors in the algorithm or perhaps the remnants of a change of approach by the coder, but none is justified from the perspective of DO-178C and must be addressed. Inadvertent execution of untested code poses significant risk.

The source code must be completely covered by the requirements-based tests. “Dead code” (code that is not executed by tests and does not correspond to a requirement) is not permitted. Dead code does not trace to any software requirements, hence does not perform any required functionality. Note that unreferenced variables or functions which are not called (hence are unreferenced) elsewhere in the program are usually removed via the compiler or linker. Since they are not present in the binary executable load image, they are not dead code per DO-178.

What is DO-178 deactivated code?

DO-178 deactivated code is executable (binary) software that will not be executed during runtime operations of a particular software version within a particular avionics box; however the code may be executed during ground maintenance or special operations or be executed within a different or future version of the software within a different configuration or avionic box. Unlike dead code, deactivated code may be left in the source baseline. Special DO-178 deactivated code aspects must be followed.

Structural Coverage Analysis (SCA)

Structural Coverage Analysis (SCA) is another key DO-178C objective, and involves the collation of evidence to show which parts of the code base have been exercised during test. The verification of requirements through dynamic analysis provides a basis for the initial stages of structural coverage analysis. Once functional test cases have been executed and passed, the resulting code coverage can be reviewed, revealing which code structures and interfaces have not been exercised during requirements based testing. If coverage is incomplete, additional requirements-based tests can be added to completely exercise the software structure. Additional low-level requirements-based tests are often added to supplement functional tests, filling in gaps in structural coverage whilst verifying software component behavior.

DO-178C defines specific verification objectives, including requirements-based testing, robustness testing and coverage testing, depending on the software level for which you are complying. At Level E, DO-178C requirements don’t apply. Level D requires 100 percent requirements coverage. Level C stipulates that companies meet Level D requirements plus 100 percent statement or line coverage. To gain Level B compliance status, companies must meet Level C requirements plus 100 percent decision coverage. Level A requires that companies meet all Level B requirements plus 100 percent modified condition decision coverage. Each type of

coverage is defined in the standard—for example, statement coverage means that every statement in the program has been invoked at least once, while decision coverage means that every point of entry and exit in the program has been invoked at least once and every decision in the program has reached all possible outcomes at least once.

MC/DC?

The official definition of MCDC, (Modified Condition/Decision Coverage) is Every point of entry and exit in the program has been invoked at least once, every condition in a decision in the program has taken on all possible outcomes at least once, and each condition has been shown to affect that decision outcome independently. A condition is shown to affect a decisions outcome independently by varying just that decision while holding fixed all other possible conditions. The key to successful, and accurate, MCDC testing is to analyze each source code construct for potential MCDC applicability and then develop sufficient test cases to ensure that each condition in that construct is independently verified per the aforementioned MC/DC definition. MC/DC analysis is primarily done with the assistance of DO-178 qualified structural coverage analysis tools.

MC/DC testing will detect certain kinds of logic errors that would not necessarily show up in decision coverage but without the exponential explosion of tests if all possible combinations of True and False were needed in a multiple-condition decision. It also has the benefit of forcing the developer to formulate explicit low-level requirements that will exercise the various conditions.

• Code coverage objectives apply at the statement level for DAL C. At higher DALs, a finer granularity is required, with coverage also needed at the level of complete Boolean expressions (“decisions”) and their atomic constituents (“conditions”). DAL B requires decision coverage (tests need to exercise both True and False results).

DAL A requires modified condition/decision coverage. The set of tests for a given decision must satisfy the following:

o In some test, the decision comes out True, and in some other test, it comes out False;

o For each condition in the decision, the condition is True in some test and False in another; and

o Each condition needs to independently affect the decision’s outcome. That is, for each condition, there must be two tests where:

> the condition is True in one and False in the other,

> the other conditions have the same values in both tests, and

> the result of the decision in the two tests is different.

Configuration management

DO-178 requires configuration management of all software lifecycle artifacts including requirements, design, code, tests, documentation, etc. To support compliance with DO-178C elements surrounding configuration management, companies are required to do the following:

● Uniquely identify each configuration item

● Protect baselines of configuration items from change

● Trace a configuration item to the configuration item from which it was derived (lineage and history)

● Trace baselines to the baselines from which they were derived

● Reproduce builds (replicate executable object code)

● Provide evidence of change approvals

● Produce output documentation for a software configuration index (SCI) and a software life-cycle environment configuration index (SECI).

DO-178C also requires that companies implement a problem reporting system to document any change to the formal design baseline.

However, DO178 does not require specific tools, not even for avionics configuration management. Hence, avionics configuration management can be performed manually and even via a purely paper-based system. However, virtually all avionics and DO-178 software projects would be better served via configuration management tool.

DO-178’s strong CM requirements, coupled with modern tools which automate many CM tasks, ensures higher software quality now and in the future. Literally any version of released DO-178C compliant software must be able to be recreated and retested in its entirety for thirty years after delivery. That means not only the application source code must be controlled and captured, but all the ancillary files including makefiles, build scripts, 3rd party tools, development environments, design and test data, etc. must all be captured. This capture eliminates common problems in software maintenance found within other industries.

Quality assurance

The QA process in DO-178C requires reviews and audits to demonstrate compliance. Key output documents in this process include software quality assurance records (SQARs), a software

conformity review (SCR) and a software accomplishment summary (SAS).

Automated tools

The development of DO-178B/C compliant software needs a good amount of experience and expertise in several design, testing and verification tools and methods.

Automated measurement and reporting tools can fulfill DO-178C requirements by

enabling you to do the following:

● Gain access to data in multiple tools across the development workflow to avoid slow, costly and error-prone manual data collection

● Automatically generate reports and dashboards to help ensure that you generate consistent evidence of compliance and provide stakeholders with the correct information in a timely manner

Tool Qualification

Software development requires many tools including design tools, code generation tools, compilers/linkers, libraries, test tools, and structural coverage tools. Tools have a range of uses during the software life cycle. Some assist verification, such as checking that the source code complies with a coding standard, computing maximum stack usage, or detecting references to uninitialized variables. Others affect the executable code, such as a code generator for a model-based design. Tools can save significant labor, but we need to have confidence that their output is correct; otherwise, we would have to verify the output manually.

With DO-178C, we gain such confidence through a process called Tool Qualification, which is basically a demonstration that the tool meets its operational requirements. DO-178 tool qualification pertains to development and testing tools. Different qualification criteria apply to each and most tools do NOT need to be qualified. When required, DO-178 tool qualification utilizes a subset of DO-178.

The level of effort that is required — the so-called tool qualification level, or TQL — depends on both the DAL of the software that the tool will be processing and which of several “what-if” scenarios could arise in the presence of a tool defect:

A major product of the DO-178C effort is a new document, Software Tool Qualification Considerations (DO-330/ED-215). This is not strictly a supplement, since it is domain-independent and can be used in conjunction with other high-integrity standards besides DO-178C. The DO-178B concepts of verification tool and development tool have been replaced by three tool qualification criteria:

| DO-178B Concepts | DO-178C / DO-330 Criteria | |

| Development tool | Criterion 1 | Output is part of airborne software Tool could insert an error |

| Verification tool | Criterion 2 | Tool could fail to detect an error and is used to reduce other development or verification activities |

| Criterion 3 | Tool could fail to detect an error | |

Criterion 2 is new and corresponds to a situation such as using a static analysis tool for source code review (for example to demonstrate the absence of recursion) and then using those results to justify the removal of stack checks in the compiled code.

DO-178C defines five Tool Qualification Levels (TQLs) based on the software level and the tool criterion:

| Tool Qualification Level Determination | |||

| Software Level | Criterion | ||

| 1 | 2 | 3 | |

| A | TQL-1 | TQL-4 | TQL-5 |

| B | TQL-2 | TQL-4 | TQL-5 |

| C | TQL-3 | TQL-5 | TQL-5 |

| D | TQL-4 | TQL-5 | TQL-5 |

DO-330 defines the specific guidance for each TQL, using the same structure as DO-178C. The tool user must provide qualification artifacts, including material supplied by the tool developer (in particular the Tool Operational Requirements, which documents the operational environment, expected functional behavior, and other aspects). The qualification activities for TQL-1 through TQL-4 are roughly equivalent to the certification activities for Levels A through D, respectively. TQL-5 corresponds to a verification tool under DO-178B.

Technology Supplements

Since the publication of DO-178B in 1992, a number of software engineering methodologies have matured and offer benefits (and also raise issues) for developers of airborne systems. A major part of the DO-178C effort was devoted to analyzing the issues surrounding three specific technologies and preparing supplements that adapt and extend the core DO-178C guidance as appropriate.

One of the significant changes in DO-178C from DO-178B is that there are four additional supplements that may be used in conjunction with the DO-178C. These supplements cover

model based development and verification supplement (DO-331.); Object-oriented technology and related techniques supplement (DO-332); formal methods supplement (DO-333) and software tool qualification considerations (DO-330).

A particular focus in these supplements is on the verification process.

- Model-Based Development and Verification (RTCA DO-331 / EUROCAE ED-218)

In model-based development, a system’s requirements, design architecture, and/or behavior are specified with a precise (and typically graphical) modeling language, processed by tools that automatically generate code and in some instances also test cases. Using a qualified modeling tool offers a number of advantages, including a shortened/simplified life cycle and a precise (and understandable) specification of requirements. However, model-based development also introduces a number of challenges, such as the merging of system and software life cycle processes, more complicated traceability, and the question of what coverage analysis means in the context of model-based development. The guidance in DO-331 addresses these issues.

Model-based development (MBD), such as the Sparx environment, works at higher levels of abstraction than source code, providing one means of coping with the enormous growth of software in airborne systems and equipment. Research indicates that early-stage prototyping of software requirements using an executable model effectively routs out “defects” at the requirements and design levels, a huge saving step. With MBD, it is also possible to automatically generate source code from the executable model. Although the key to verification remains traceability from high-level requirements to model-based requirements, DO-178C simply considers the models to be the requirements so traceability becomes self-evident.

The challenges of auto-generated and manually inserted code when using MBD are more significant, however. MBD tools might also insert code that can be rationalized only in the context of the model’s implementation, not the functional requirements. Still, whether code is written from textual requirements, from design models or auto-generated from a tool, tried and tested practices of coding standards adherence are still applicable, and the verification of the executable object code is still primarily performed by testing.

- Object-Oriented Technology and Related Techniques (RTCA DO-332 / EUROCAE ED-217)

Object Orientation – a design approach in which a system’s architecture is based on the kinds of entities that the system deals with, and their relationships – has been a mainstay in software development for several decades since it can ease the task of extending a system when new requirements need to be met. However, the programming features that give Object Oriented Technology (OOT) its expressive power – inheritance, polymorphism, and dynamic binding – raise a number of significant technical issues. In brief, the dynamic flexibility provided by OOT is in conflict with the DO-178B and DO-178C requirements to statically demonstrate critical program properties; the guidance in DO-332 addresses this issue and others. A noteworthy feature of DO-332 is its introduction of a new verification objective for type substitutability, reflecting the advantages of using inheritance solely for type specialization.

The Object-Oriented Technology (OOT) “leg” of DO-178C focuses on languages such as C++, Java, and Ada 2005. For example, in the world of object orientation, “subtyping” is the ability to create new types or subtypes in an OO language. DO-178C addresses the issue of subtype verification for the safe use of OOT, which was not dealt with in DO-178B. DO-178C requires verification of local type consistency as well as verifying the proper use of virtual memory.

- Formal Methods (RTCA DO-333 / EUROCAE ED-216)

With the advances in proof technology and hardware speed, the use of formal (i.e., mathematically based) methods in software verification has moved from the research labs into more widespread practice, especially at the higher levels of safety and security criticality. Formal methods can be used to prove program properties ranging from a demonstration that run-time errors will not occur, to proof that a module’s implementation complies with its formally specified requirements.

Formal methods were identified in DO-178B as an alternative means of compliance, but there was no common understanding of how they would fit in with the other objectives. DO-333 addresses this issue and indicates how formal methods can complement or replace other verification activities, most notably certain types of requirements-based testing.

Consider formal methods for high-assurance applications. Static analysis tools based on formal methods can prove program properties (including safety and security properties) ranging from absence of run-time errors to compliance with formally specified requirements. Use of such tools provides mathematics-based assurance while also eliminating the need for some of the low-level requirements-based tests.

Design Verification Testing (DVT) performed at the class level proves that all the member functions conform to the class contract with respect to preconditions, postconditions, and invariants of the class state. Thus, a class and its methods needs to pass all tests for any superclass for which it can be substituted. As an alternative to DVT, developers can use formal methods in conformance with the formal methods supplement.

Cost factors

DO-178C has become the international and de facto standard for certifying all aviation safety-critical software. The need to comply with DO-178C can add significant cost to programs under development at a time when cost is becoming an increasingly critical factor in complex product development. Projects that need to comply with DO-178C standards could see cost increases anywhere from 25 percent to 40 percent compared to projects that don’t require compliance.

The sources of additional costs may include the following:

●Reduced developer productivity due to increases in process complexity

●Manual reporting and documentation processes that are not suited to the level of detail required to comply with DO-178C

●Qualification activities involved in compliance

What are the DO-178 certification risks?

- Incomplete and general data within the five key DO-178 process plans prior to initiating those lifecycles

- Missing design/low-level software requirements

- Insufficient checklists for reviews

- Incorrect or Incomplete traceability between components

- Incomplete structural coverage for decision and MCDC coverage

- Missing or improper tool qualification

Lessons learned

In summary, the guidance in DO-178C serves mainly to provide confidence that the software meets its requirements — not to validate that the requirements, in fact, reflect the system’s intended functionality. So getting the code correct is not sufficient, but it is necessary, and many of the benefits of the DO-178C approach can be realized without going through the effort of a formal certification.

The basic principle is to choose requirements development and design methods, tools, and programming languages that limit the opportunity for introducing errors and verification methods that ensure that errors introduced are detected.

References and Resources also include:

https://www.aerodefensetech.com/component/content/article/adt/features/articles/27378

https://www.electronicproducts.com/do-178c-helps-to-make-flying-safer/#

https://www.ibm.com/downloads/cas/E2GGOGY4

https://www.consunova.com/do178c-info.html

International Defense Security & Technology Your trusted Source for News, Research and Analysis

International Defense Security & Technology Your trusted Source for News, Research and Analysis