Late delivery or software defects can damage a brand’s reputation — leading to frustrated and lost customers. In extreme cases, a bug or defect can degrade interconnected systems or cause serious malfunctions. Consider Nissan having to recall over 1 million cars due to a software defect in the airbag sensor detectors. The numbers speak for themselves. Software failures in the US cost the economy USD 1.1 trillion in assets in 2016. What’s more, they impacted 4.4 billion customers.

Military and aerospace systems are even more prone to software defects as well as operate in a hostile and adversarial environment. During a modern airplane’s flight, hundreds of millions of lines of code will run. Avionics systems often comprise many thousands of functions and millions of lines of code. To ensure the safety of the system, these must be tested to make sure that they operate as expected. Software is present almost everywhere in the aircraft, from mundane components like galley equipment to highly critical ones such as flight control systems. A software bug caused the failure of a USD 1.2 billion military satellite launch.

Per the related IEEE standard, Independent Verification and Validation (V&V) processes are used to determine whether the development products of a given activity conform to the requirements of that activity and whether the product satisfies its intended use and user needs. V&V life cycle process requirements are specified for different integrity levels. The scope of V&V processes encompasses systems, software, and hardware, and it includes their interfaces.

This standard applies to systems, software, and hardware being developed, maintained, or reused (legacy, commercial off-the-shelf [COTS], non-developmental items). The term software also includes firmware and microcode, and each of the terms system, software, and hardware includes documentation. V&V processes include the analysis, evaluation, review, inspection, assessment, and testing of products.

Software quality assurance (SQA)

One of the main reasons for such failures turned out to be poor quality assurance during the software development process. The chief purpose of executing stringent quality assurance tests is to prevent the release of poor quality products. Small mistakes that slip through may lead to large financial losses. The way to create high-quality software is to implement effective QA management that provides tools and methodologies for building bug-free products. Software quality management is an umbrella term covering three core aspects: quality assurance, quality control, and testing.

Software quality assurance (SQA) is the part of quality management that includes a planned set of organizational actions. The purpose of these actions is to improve the software development process, introducing standards of quality for preventing errors and bugs in the product.

Software quality control (SQC) is the part of quality management that includes a set of activities focused on fulfilling quality requirements. QC is about product-oriented activities that certify software products for their quality before release. The process of software quality control is governed by software quality assurance.

What is Software Verification and Validation?

Software verification is the testing done to make sure the software code performs the functions it was designed to perform. Validation is the testing performed to prove that the verified code meets all of its requirements in the target system or platform. These definitions are consistent with the RTCA DO-178C “Software Considerations in Airborne Systems and Equipment Certification” document which the Federal Aviation Administration uses to guide the development of certifiable flight software.

According to that document, software validation does not need to be considered (and is not considered by DO-178C) if the software requirements are complete and correct. If they are, then in theory the properly verified software will also validate. Unfortunately, in practice it often happens that the verified code does exactly what it

was designed to do, but what it does is not the right thing.

Software verification and validation are commonly described in connection with the V-shaped software

lifecycle diagram shown in Fig.. The software development process begins on the top, left side which

indicates the first step is a thorough development of the software requirements. The software functional

requirements are decomposed into a software architecture, from which the detailed software design and

the software code are ultimately produced. The sequential decomposition of requirements to code is

sometimes referred to as the waterfall software development model, because each step flows down from

the next.

Software verification is the process of proving that the progressive decomposition of requirements to the software code is correct. The blue arrows represent the verification testing done to prove the correct decomposition at each step.

The blue arrows represent the verification testing done to prove the correct decomposition at each step.

The testing done to prove that the code meets the requirements is called validation and is associated with

the right side of Fig. The red arrows represent the validation testing at each level. At the lowest level, each software unit is tested in a process called unit testing. If errors are found in unit testing, the code is corrected and then re-tested. When all the software units have been successfully tested, they are integrated into the full code. The integrated code is tested in computer simulation to validate that it satisfies the software architecture requirements. If errors are found at this point, then either the code or the architecture must be corrected. If faulty architecture is to blame, then the software architecture and the detailed design must be repeated and new software is coded. Then both unit testing and integration testing need to be repeated.

Once the integrated software is validated to be correct, the last step of validation is to check that the software operates correctly on the target platform. This testing is usually performed using high-fidelity computer simulations first, then using hardware-in-the-loop (HIL) simulations, and finally the actual spacecraft computer system. If the software fails to operate as intended on the target system computer, the problem is almost always that the software requirements were not correct or were not complete.

It is also sometimes discovered that assumptions made about the software interfaces is incorrect. If it is found that new requirements are needed, then the whole software development process must be repeated, which is adds greatly to the software development cost. Quick fixes to the software architecture may result in the creation of a fractured architecture which could produce complex validation problems. The validation process ends when it is proved that the code does what it has been designed to do on the target platform.

Obviously, it is very important to make sure that the software requirements are complete and correct prior to coding the software. To make sure this happens, the traditional approach to software development is to have numerous human reviews of the requirements and the software architecture. As pointed out by Ambrosi et. al., these meetings include the System Requirements Review, the Preliminary Design Review, the Critical Design Review, the Detailed Design Review, the Qualification Review, and the Acceptance Review.

The problem is that although it is easy to specify software requirements for a given subsystem in isolation

(e.g., communication subsystem), it is more difficult for software engineers to make sure that the software

requirements meet the overall system requirements (communication with all other systems working on the

satellite and on the ground, plus all fail-safe requirements, etc.). Ideally, software requirements should be distilled or flow down from the system (satellite + ground) performance and system safety requirements. If all of the requirements are complete and correct prior to code development, the software re-design and re-verification costs can be avoided.

Software testing

Software testing is the process of evaluating and verifying that a software product or application does what it is supposed to do. The benefits of testing include preventing bugs, reducing development costs and improving performance. To ensure the safety of passengers, crew, and aircraft, aerospace software applications must be vigorously tested within strict guidelines to ensure that they operate correctly. Failure of onboard critical software (safety-critical and/or mission-critical) could have far-reaching repercussions.

Though testing itself costs money, companies can save millions per year in development and support if they have a good testing technique and QA processes in place. Early software testing uncovers problems before a product goes to market. The sooner development teams receive test feedback, the sooner they can address issues such as: Architectural flaws, Poor design decisions, Invalid or incorrect functionality, Security vulnerabilities, and Scalability issues

“If software fails, you can get awful incidents like the flight 447 Air France A330 crash over the Atlantic in 2009, which killed everyone on board on the way from Brazil to Paris. It was caused by one hardware failure, but the root of the incident was the software’s inability to recognize and report the hardware fault,” says Dylan Llewellyn, international sales manager at software company QA Systems.

To avoid disasters such as Flight 447, and to reduce costs, software testing should be done as early as possible in a development program. Massimo Bombino, an expert in avionics software and regional manager of Southeast Europe for Vector Software, recommends planning software testing from “day zero” of a development program and forming small agile teams of software developers. These teams should plan all the software unit testing and integration testing from day zero to minimize the risk of nasty surprises and last-minute regression testing, which is conducted when code goes wrong.

When development leaves ample room for testing, it improves software reliability and high-quality applications are delivered with few errors. A system that meets or even exceeds customer expectations leads to potentially more sales and greater market share.

Software Testing is the basic activity aimed at detecting and solving technical issues in the software source code and assessing the overall product usability, performance, security, and compatibility. It’s not only the main part of quality assurance; it is also an integral part of the software development process.

Test policy: A test policy is the most high-level document that is created at the organizational level. It defines the test principles adopted by the company and the company’s main test objectives. It also explains how testing will be conducted and how a company measures test effectiveness and success.

There is no standard approach to test policy creation, but it typically includes the following: Definition of what testing means for the company, Test objectives of the organization,

General standards and criteria for software testing in projects, Definition of testing terms to clarify their further usage in other documents, Listing of tools to support the testing process,

Methods and metrics for assessing efficiency of testing, and Ways to improve the testing processes.

Test strategy: A test strategy is a more specific product-level document that derives from the Business Requirements Specification document. Usually, a project manager or a business analyst creates a test strategy to define software testing approaches used to achieve testing objectives. A test strategy is driven by the project’s business requirements, which is why it meshes with a project manager’s responsibilities.

The main components of a test strategy are: The scope of testing, Test objectives, Budget limitations, Communication and status reporting, Industry standards, Testing measurement and metrics, Defect reporting and tracking, Configuration management, Deadlines, Test execution schedule, Risk identification

Pushing testing procedures off until the last week can create bottlenecks and slow down progress. So, consider planning a testing schedule from the early stages of the development process to detect and fix bugs and malfunctions as soon as possible.

Test Plan: A test plan is a document that describes what to test, when to test, how to test, and who will do the tests. It also describes the testing scope and activities. The test plan includes the objectives of the tests to be run and helps control the risks. It’s a good practice to have a test plan written by an experienced person like a QA lead or manager.

Test scenario is a description of an objective a user might face when using the program. An example might be “Test that the user can successfully log out by closing the program.” Typically, a test scenario will require testing in a few different ways to ensure the scenario has been satisfactorily covered.

Test cases describe a specific idea that is to be tested, without detailing the exact steps to be taken or data to be used. For example, a test case might say “Test that discount codes can be applied on top of a sale price.” This doesn’t mention how to apply the code or whether there are multiple ways to apply the code. The actual testing that will cover this test case may vary from time to time. Test cases give flexibility to the tester to decide exactly how they want to complete the test.

According to the definition, given by ISTQB (International Software Testing Qualifications Board, the worldwide leader in the certification of competencies in software testing), “A test case is a set of input values, execution preconditions, expected results, and execution postconditions, developed for a particular objective or test condition, such as to exercise a particular program path or to verify compliance with a specific requirement.”

Test Scripts means the line-by-line description of all the actions and data needed to perform a test. A script typically has ‘steps’ that try to fully describe how to use the program — which buttons to press, and in which order — to carry out a particular action in the program. These scripts also include specific results that are expected for each step, such as observing a change in the UI. An example step might be “Click the ‘X’ button,” with an example result of “The window closes.”

A formal technical review (FTR) is an activity performed by software engineers to reveal functional and logical errors at the early stages. An FTR is a group meeting at which attendants with certain roles ensure that developed software meets the predefined standards and requirements.

Types of software testing

There are many different types of software tests, each with specific objectives and strategies:

Structural coverage analysis to ensure that structural elements of the code (such as statements) have been tested to an acceptable degree. Each line of code has to be checked for faults. Each software unit has to be tested to see how it integrates with other units, and then tested at a higher systems level. It’s a laborious process. Increasingly, cost overruns and delays in airplane development are linked to software testing. But it’s vital to get software right.

Code quality measurements

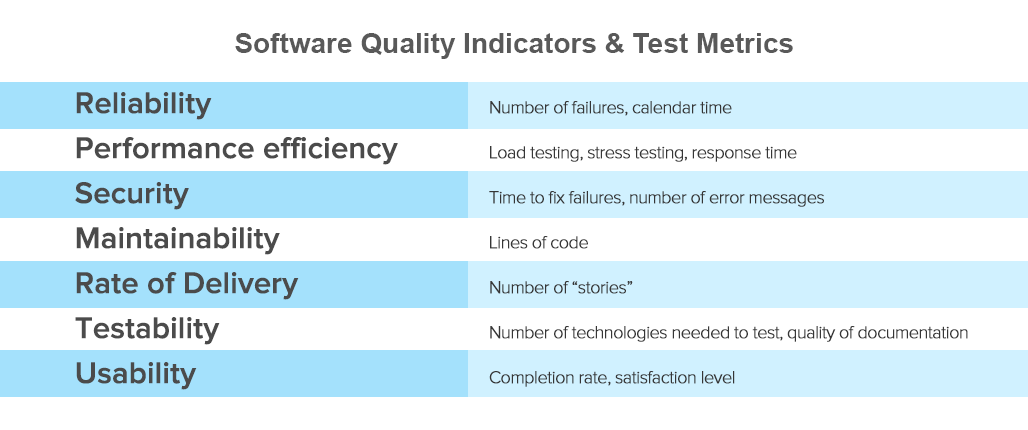

The CISQ Software Quality Model defines four important aspects of software quality: reliability, performance efficiency, security, maintainability, and rate of delivery. Additionally, the model can be expanded to include the assessment of testability and product usability.

- Unit testing: Validating that each software unit performs as expected. A unit is the smallest testable component of an application.

- Integration testing: Ensuring that software components or functions operate together.

- Functional testing to ensure that the software meets high- and low-level requirements. Checking functions by emulating business scenarios, based on functional requirements. Black-box testing is a common way to verify functions.

- Black Box Testing examines software functionality without seeing the internal code. That means testers are only aware of what an app should do without knowing how. This type of testing allows test teams to get the most relevant results comparable with end-user testing.

- Performance testing: Testing how the software performs under different workloads. Load testing, for example, is used to evaluate performance under real-life load conditions.

- Regression testing: Checking whether new features break or degrade functionality. Sanity testing can be used to verify menus, functions and commands at the surface level, when there is no time for a full regression test.

- Stress testing: Testing how much strain the system can take before it fails. Considered to be a type of non-functional testing.

- Usability testing: Validating how well a customer can use a system or web application to complete a task.

- Worst-case execution time analysis to ensure that time-critical sections of code meet timing deadlines

- Acceptance testing: Verifying whether the whole system works as intended.

User acceptance testing

In product development, we have user personas to identify a perfect customer or a typical user for your product. A user persona is a fictional character that has the behavior patterns and goals of your product’s target audience. QA teams use personas to identify where and how to seek a bug.

End-user testing or user acceptance testing traditionally comes at the final stages of software development. Engaging end-users to test your application can help discover bugs that might not normally be found. It also proves that your software is production-ready and supplies your developers with user feedback during/after the production stage.

Alpha and beta testing are done at the pre-release stage. Alpha testing is carried out by internal stakeholders at the early stages of development. Business and end-users are often involved in alpha testing performed in the development environment. The feedback from internal teams is used to further improve the quality of the product and fix bugs. Beta testing is performed in the customer’s environment to determine if an app is ready for users.

Static and Dynamic Analysis

Static analysis involves going through the code in order to find out any possible defect in the code. Dynamic analysis involves executing the code and analyzing the output.

Static analysis

This may be the testing you are doing most of the time at your coding. While coding there may be a lot of typing errors, syntax error, loop structure, code termination etc etc . This should be fixed by inspecting ( thorough reading ) of your code. You program will run only after clearing all the coding defects by static analysis.

Static analysis is done in a non-runtime environment which is just when the program is not running at all. So, any kind of static analysis tool that is used will look at the code and will look at the run-time behaviors to find any kind of flaws, back door and bad code.

For static analysis strengths are:

- A more thorough approach and more cost-efficient

- It is able to find future errors that would not be detected in dynamic analysis

- It can point out the exact spot in code where there is an error, so you can easily fix it

Dynamic analysis

Now you need to check your program output whether it is the desired output or not. This is called as dynamic analysis in testing. You will compile the program and check the output, then will do the necessary changes in codes.

In contrast, dynamic analysis is done just when the program is running. A dynamic test will monitor system memory, function behavior, response time and overall performance of the system.

Software testing best practices

Software testing follows a common process. Tasks or steps include defining the test environment, developing test cases, writing scripts, analyzing test results and submitting defect reports.

Automated testing

Testing can be time-consuming. Manual testing or ad-hoc testing may be enough for small builds. However, for larger systems, tools are frequently used to automate tasks. Automated testing helps teams implement different scenarios, test differentiators (such as moving components into a cloud environment), and quickly get feedback on what works and what doesn’t.

Implementing automated tests whenever possible and maximizing test coverage would also expedite and improve the testing process.

A good testing approach encompasses the application programming interface (API), user interface and system levels. As well, the more tests that are automated, and run early, the better. Some teams build in-house test automation tools.

There is a wide variety of automation testing tools. They can be both open-source and commercial. Selenium, Katalon Studio, Unified Functional Testing, Test Complete, Watir are the most popular ones.

However, vendor solutions offer features that can streamline key test management tasks such as:

Continuous testing: Software testing has traditionally been separated from the rest of development. It is often conducted later in the software development life cycle after the product build or execution stage. A tester may only have a small window to test the code – sometimes just before the application goes to market. If defects are found, there may be little time for recoding or retesting. It is not uncommon to release software on time, but with bugs and fixes needed. Or a testing team may fix errors but miss a release date. Doing test activities earlier in the cycle helps keep the testing effort at the forefront rather than as an afterthought to development. Earlier software tests also mean that defects are less expensive to resolve.

Testing often: Doing smaller tests more frequently throughout the development stages and creating a continuous feedback flow allows for immediate validation and improvement of the system.

Test-driven development

Test-driven development (TDD) is a software development process in which tests are written before any implementation of the code. TDD has a test-first approach based on the repetition of a very short development cycle. According to it, each new feature begins with writing a test. The developer writes an automated test case before he/she writes enough production code to fulfill that test. This test case will initially fail. The next step will be to write the code focusing on functionality to get that test passed. After these steps are completed, a developer refactors the code to pass all the tests.

DevOps approach – where development and operations collaborate over the entire product life cycle.

Project teams test each build as it becomes available. This type of software testing relies on test automation that is integrated with the deployment process. It enables software to be validated in realistic test environments earlier in the process – improving design and reducing risks.

The aim is to accelerate software delivery while balancing cost, quality and risk. With this testing technique, teams don’t need to wait for the software to be built before testing starts. They can run tests much earlier in the cycle to discover defects sooner, when they are easier to fix.

Configuration management: Organizations centrally maintain test assets and track what software builds to test. Teams gain access to assets such as code, requirements, design documents, models, test scripts and test results. Good systems include user authentication and audit trails to help teams meet compliance requirements with minimal administrative effort.

Service virtualization: Testing environments may not be available, especially early in code development. Service virtualization simulates the services and systems that are missing or not yet completed, enabling teams to reduce dependencies and test sooner. They can reuse, deploy and change a configuration to test different scenarios without having to modify the original environment.

Defect or bug tracking: Monitoring defects is important to both testing and development teams for measuring and improving quality. Automated tools allow teams to track defects, measure their scope and impact, and uncover related issues.

Metrics and reporting: Reporting and analytics enable team members to share status, goals and test results. Advanced tools integrate project metrics and present results in a dashboard. Teams quickly see the overall health of a project and can monitor relationships between test, development and other project elements

Software assurance

Software assurance is defined as the confidence that software functions as intended and is free of vulnerabilities throughout its lifecycle, irrespective of whether the vulnerabilities are a result of intentional or unintentional actions. In addition to traditional applications, software assurance is important for AI adoption to ensure that expected behavior is maintained as algorithms are retuned.

As application development architectures evolve in DevOps, test automation needs to evolve as well. The application of machine learning (ML) in software testing tools is focused on optimizing the software development lifecycle. The value of applying ML to software testing is achieved by creating opportunities for development teams to focus on software enhancements or other impactful quality assurance activities.

Modern software is complex. In addition to increasing software complexity, there are often many blocks of code within complex systems of software (including the layers of libraries) that are redundant or perform similar functions. Many blocks perform extraneous, seldom-used, or never-used functionality. Feature creep, device-specific optimizations, and attempts to support multiple different architectures all contribute to software bloat. Current software development practices and frameworks encourage this situation (e.g., Object-oriented programming, libraries, deprecated code, layers of abstraction).

It is highly desirable to have an enhanced software architecture and deployment strategy that improves software efficiency while preserving the productivity benefits of state-of-the-art development practices, according to CISA. Improving software efficiency by simplifying the structure/layering of the final executable late in the software development lifecycle, while maintaining the benefit of software reuse and layering at the development stage, is an important goal to be addressed in providing secure

software.

Fault-Tolerant Software Design and Runtime Monitoring

An important consideration of small satellite software design is that the verification and validation effort may be unable to detect all software errors. Small satellite software malfunctions include, but are not limited to failure of the operating system, live-lock of the operating system, failures in one or more threads, data corruption problems, and watchdog computer failures. The lack of an elaborate software V&V program may increase the frequency of these errors in small satellites.

However, small satellite software may also suffer anomalies caused by space radiation damage to various memory locations. The disastrous effects of radiation flipping a single bit in memory, referred to as the Single-Event-Upset (SEU) or Single-Event-Latch-up (SEL) failure is well-documented in the literature. As such, it is wise to plan ahead for the detection and correction of software problems in space. This is referred to as fault-tolerant software design.

The most common approach for adding fault-tolerance is the use of redundancy for critical systems.

Redundancy may include the use of two on-board computers, two inertial reference units, four gyros in each direction, etc. In order for this approach to be successful, it is necessary to have a failure detection scheme that can direct when switching to backup hardware is required. One approach for implementing this approach is presented by Hunter and Rowe for the PANSAT satellite and has been used many times since.

However, as demonstrated in the case of the Ariane 5 accident, redundancy does not always protect against common mode failures where the same software error causes both primary and secondary systems to fail. In that case, a software exception fault code was erroneously sent to both flight computers as valid data, thereby causing the rocket to veer off course. Some software fault-tolerance schemes guard against this by requiring that dissimilar software algorithms be used in redundant hardware. This approach is one method suggested by the Federal Aviation Administration in DO-178C.

The second most common means of adding fault tolerance, and for implementing real-time software verification, is the use of run-time monitoring. Run-time monitoring is generally used to debug code still in development on the ground, but it can be applied in the space environment. In runtime monitoring, the program is “instrumented” or programmed to provide information on the program status at any desired point. For example, the software can be programmed to dump register information every time a code segment is executed or a break point can be set in the program that allows register information to be sent to the ground station. The two most common means of run-time monitoring for small satellites are the use of a watchdog processor and the procedure of functional telemetry.

A watchdog program can compare the results of two or more redundant computers or devices to check for agreement and declare an error in the case of disagreement. A watchdog processor can also periodically request each program segment (or thread) to report its status. If no reply is received or a bad reply is received, the watchdog program can raise an error flag and notify the ground station. Threads producing exceptions like divide by zero, overflow, and underflow, may be programmed to report them to the watchdog processor. The watchdog processor can also keep track of the execution time of each thread. Provided that the worst case execution time is known for every thread or process, the watchdog processor can raise a flag to indicate a thread is taking longer than expected to complete and is perhaps live-locked.

Another method of run-time monitoring is implemented through functional telemetry. To use this method, each thread is programmed to compute a known result, test if the expected result is produced, and if not, set an error flag indicating a failure has occurred. In functional telemetry, each thread is required to send telemetry to the ground station as it executes to report the status of these tests. Although the ground station may receive a lot of extraneous information when everything is working right, such information is very valuable to pinpoint the cause of software anomalies when things go wrong. Of course, once the satellite has been placed into orbit, the value of verifying correct software behavior operation would have little value were there not the means to do something about it.

Most large satellites and some small satellites have the capability to upload corrected software. This is enabled by using software that allows the ground station to always be able to communicate with the BIOS of the satellite. From talking to the BIOS, the ground station can first download telemetry and log files after a crash from satellite flash memory to help discover the cause of the software problem. After the problem with the software is discovered and corrected on the ground, the link to the BIOS can be used to direct the satellite to upload new software from the ground station and then to restart. This method of satellite access is called the “back door” into the operating system and it provides a means to recover from software failures after launch.

Security and Safety guidelines

Parasoft takes a test-early-and-often approach to military software testing to ensure software quality, security, reliability, and on-time delivery while reducing the risks associated with complex and interconnected mission-critical systems. We offer tooling to ensure that application code bases are free of security weaknesses and vulnerabilities. And enforce compliance to security and safety guidelines such as CWE, CERT, OWASP, MISRA, AUTOSAR, DISA-ASD-STIG, and process standards like DO-178B/C and UL 2900.

CWE

CWE (Common Weakness Enumeration) is a comprehensive list of over 800 programming errors, design errors, and architecture errors that may lead to exploitable vulnerabilities — more than just the Top 25. The CWE/SANS Top 25 Most Dangerous Software Errors is a shortened list of the most widespread and critical errors that may lead to serious vulnerabilities in software, that are often easy to find and exploit. These are the most dangerous weaknesses because they enable attackers to completely take over the software, steal software data and information, or prevent the software from working at all.

Top of the list with the highest score by some margin is CWE-787: Out-of-bounds Write, a vulnerability where software writes past the end, or before the beginning, of the intended buffer. Like many of the vulnerabilities in the list this can lead to corruption of data and crashing systems, as well as the ability for attackers to execute code.

Second in the list is CWE-79: Improper Neutralization of Input During Web Page Generation, a cross-site scripting vulnerability which doesn’t correctly neutralise inputs before being placed as outputs on a website. This can lead to attackers being able to inject malicious script and allow them to steal sensitive information and send other malicious requests, particularly if they able to gain administrator privileges.

Third in the list is CWE-125: Out-of-bounds Read, a vulnerability which can allow attackers read sensitive information from other memory locations or cause a crash.

CERT

The CERT secure coding standard was developed by the Software Engineering Institute (SEI), for a variety of languages, with the purpose of hardening your code by avoiding coding constructs that are more susceptible to security problems.

In the CERT coding framework, priority is computed as a product of three factors (severity, likelihood of someone exploiting the vulnerability, and remediation cost) and divided into levels: L1, L2, and L3. L1 represents high severity violations, with high likelihood and low remediation cost (i.e. most important to address, as they indicate serious problems that are less complicated to fix). Using CERT’s scoring framework provides great help in focusing efforts and enabling teams to make the best use of their time budgets.

OWASP Top 10

The Open Web Application Security Project (OWASP) is an open-source community of security experts from around the world, who have shared their expertise of vulnerabilities, threats, attacks, and countermeasures by developing the OWASP Top 10 – a list of the 10 most dangerous current web application security flaws, and effective methods of dealing with those flaws. Achieving OWASP compliance is an effective first step to change the software development culture within your organization into one that produces secure code. Developing solid, secure products is the best way to secure a position in today’s oversaturated market.

MISRA

MISRA refers to the widely-adopted coding standards for C and C++ developed by the Motor Industry Software Reliability Association (MISRA). The MISRA coding standards (MISRA C:2012 and MISRA C++:2008) are widely used in safety-critical industries beyond automotive, such as medical, military, and aerospace engineering, providing a set of best practices for writing embedded C and C++ code, facilitating the authorship of safe, secure, and portable code for critical systems. (MISRA has Working Groups for both C and C++.

MISRA C:2012

For C development, the MISRA C standard supports the C90, C99, C11 and C18 language specifications. The current version, MISRA C:2012, has evolved over several years and includes 158 MISRA C rules and 17 directives for a total of 175 guidelines. Amendment 2 to MISRA C:2012, published in 2020, expanded the standard by 2 rules.

MISRA C++:2008

For C++ programming, the current MISRA standard is MISRA C++:2008 standard. However, many organizations are choosing to standardize on the AUTOSAR C++14 standard, which covers the most recent changes to the C++ language. Recently, MISRA and AUTOSAR organizations announced their collaboration on the next generation of these standards.

AUTOSAR

AUTOSAR (AUTomotive Open System ARchitecture) is a worldwide development partnership of vehicle manufacturers, suppliers, service providers and companies from the automotive electronics, semiconductor, and software industry. AUTOSAR developed a standard for modern C++ software development, AUTOSAR C++14.

DISA ASD STIG

The Defense Information Systems Agency (DISA) provides a variety of Security Technical Implementation Guides (STIG) that give guidance for securely implementing and deploying applications on Department of Defense (DoD) networks. The Application Security and Development (ASD) STIG covers in-house application development and evaluation of third-party applications.

The purpose is stated in the Executive Summary: “These requirements are intended to assist Application Development Program Managers, Application Designers/Developers, Information System Security Managers (ISSMs), Information System Security Officers (ISSOs), and System Administrators (SAs) with configuring and maintaining security controls for their applications.

What Is UL 2900

UL 2900 is a cybersecurity standard for network connectable products to help secure the Internet of things (IoT), especially as it relates to functional safety. With the growth of connected devices, it’s more important than ever to make sure these devices operate properly, even under attack. There are versions of the standard specifically tuned for medical devices and nuclear power. The medical version is recognized by the FDA as appropriate to use for security of medical devices and software. UL 2900 relies on the well-known secure coding standards of CWE and OWASP. In particular, CWE includes the well-known Top 25and additional On the Cusp considerations.

DO-178B/C & ED 12 B/C

DO-178B and DO-178 C (referred to as ED-12 B/C in Europe – referenced on this page as DO-178B/C for simplicity) provides guidance, used by aerospace software engineers, to ensure airworthiness. The DO-178 standard is not explicitly mandated in the FAA’s airworthiness requirements, but it is a critical component of the FAA’s approval process for issuing its Technical Standard Order (TSO), so DO-178 is considered essential.

Safety Critical

Safety typically refers to being free from danger, injury, or loss. In the commercial and military industries, this applies most directly to human life. Critical refers to a task that must be successfully completed to ensure that a larger, more complex operation succeeds.

Safety-critical systems demand software that has been developed using a well-defined, mature software development process focused on producing quality software. Safety-critical systems go through a rigorous development, testing, and verification process before getting certified for use. Achieving certification for safety-critical airborne software is costly and time-consuming. Once certification is achieved, the deployed software cannot be modified without recertification.

When approving commercial software-based aerospace systems, certification authorities such as the EASA and FAA refer to the DO-178C Software Considerations in Airborne Systems and Equipment Certification guideline, which ensures that safety-critical software used in airborne systems is safe to use.

When Taft tests software for faults, he carries out static analysis, in which a tester tries to mathematically prove that errors will manifest without running the code. Experience has taught him that certain types of software are hard to examine using the conventional tests written by programmers and that it can be more straightforward to obtain formal proof of software unit’s safety using static analysis. However, the exact opposite can be the case with some other types of software units.

“For part of the system, you focus on mathematical proofs and for other parts you use a more dynamic testing strategy,” Taft says. “Customers often like a combined strategy because they get the confidence of mathematical proofs.”

As part of more formal testing for certification, Taft prefers to define pre- and post-conditions for software. Pre-conditions imply that before the software sends data to a component, the code has to have certain properties. Unless the software is in an appropriate state, it will not be allowed to send the data. Testing can verify that the pre-conditions are satisfied. Later, the post-conditions state what predetermined data the tester should get back from the software.

“When you use pre- and post-conditions, you can do a lot more formal verification and determine whether the software all fits together,” Taft says. “It makes it easier to do integration testing, which has always been one of the greatest challenges, as each contractor builds and tests in isolation, but when you put it together something inevitably goes wrong.”

But testing has a well-known intrinsic drawback; as quipped by the late computer scientist Edsger Dijkstra, it can show the presence of bugs but never their absence. DO-178C mitigates this issue in several ways:

• Testing is augmented by inspections and analyses to increase the likelihood of detecting errors early.

• Instead of “white box” or unit testing, DO-178C mandates requirements-based testing. Each requirement must have associated tests, exercising both normal processing and error handling, to demonstrate that the requirement is met and that invalid inputs are properly handled. The testing is focused on what the system is supposed to do, not on the overall functionality of each module.

• The source code must be completely covered by the requirements-based tests. “Dead code” (code that is not executed by tests and does not correspond to a requirement) is not permitted.

• Code coverage objectives apply at the statement level for DAL C. At higher DALs, finer granularity is required, with coverage also needed at the level of complete Boolean expressions (“decisions”) and their atomic constituents (“conditions”). DAL B requires decision coverage (tests need to exercise both True and False results). DAL A requires modified condition/decision coverage. The set of tests for a given decision must satisfy the following:

o In some test, the decision comes out True, and in some other test, it comes out False;

o For each condition in the decision, the condition is True in some test and False in another; and

o Each condition needs to independently affect the decision’s outcome. That is, for each condition, there must be two tests where:

> the condition is True in one and False in the other,

> the other conditions have the same values in both tests, and

> the result of the decision in the two tests is different.

MC/DC testing will detect certain kinds of logic errors that would not necessarily show up in decision coverage but without the exponential explosion of tests if all possible combinations of True and False were needed in a multiple-condition decision. It also has the benefit of forcing the developer to formulate explicit low-level requirements that will exercise the various conditions.

The static analysis of source code may be thought of as an “automated inspection”, as opposed to the dynamic test of an executable binary derived from that source code. The use of a static analysis tool will ensure adherence of source code to the specified coding standard and hence meet that DO-178 objective. It will also provide the means to show that other objectives have been met by analyzing things like the complexity of the code, and deploying data flow analysis to detect any uninitialized or unused variables and constants.

Static analysis also establishes an understanding of the structure of the code and the data, which is not only useful information in itself but also an essential foundation for the dynamic analysis of the software system, and its components. In accordance with DO-178C, the primary thrust of dynamic analysis is to prove the correctness of code relating to functional safety (“functional tests”) and to therefore show that the functional safety requirements have been met. Given that compromised security can have safety implications, functional testing will also demonstrate robust security, perhaps by means of simulated attempts to access control of a device, or by feeding it with incorrect data that would change its mission. Functional testing also provides evidence of robustness in the event of the unexpected, such as illegal inputs and anomalous conditions.

Structural Coverage Analysis (SCA) is another key DO-178C objective, and involves the collation of evidence to show which parts of the code base have been exercised during test. The verification of requirements through dynamic analysis provides a basis for the initial stages of structural coverage analysis. Once functional test cases have been executed and passed, the resulting code coverage can be reviewed, revealing which code structures and interfaces have not been exercised during requirements based testing. If coverage is incomplete, additional requirements-based tests can be added to completely exercise the software structure. Additional low-level requirements-based tests are often added to supplement functional tests, filling in gaps in structural coverage whilst verifying software component behavior.

SCA also helps reveal “dead” code that cannot be executed regardless of what inputs are provided for test cases. There are many reasons why such code may exist, such as errors in the algorithm or perhaps the remnants of a change of approach by the coder, but none is justified from the perspective of DO-178C and must be addressed. Inadvertent execution of untested code poses significant risk.

Software that flies SpaceX rockets and Starships

Flight software for rockets at SpaceX is structured around the concept of a control cycle. “You read all of your inputs: sensors that we read in through an ADC, packets from the network, data from an IMU, updates from a star tracker or guidance sensor, commands from the ground,” explains Gerding. “You do some processing of those to determine your state, like where you are in the world or the status of the life support system. That determines your outputs – you write those, wait until the next tick of the clock, and then do the whole thing over again.”

The control cycle highlights some of the performance requirements of the software. “On Dragon, some computers run [the control cycle] at 50 Hertz and some run at 10 Hertz. The main flight computer runs at 10 Hertz. That’s managing the overall mission and sending commands to the other computers. Some of those need to react faster to certain events, so those run at 50 Hertz.”

Gerding points out that there’s no way to protect against any arbitrary software bug. “We try to design the software in a way that if it were to fail, the impact of that failure is minimal.” For example, if a software error were to crop up in the propulsion system, that wouldn’t affect the life support system or the guidance systems ability to steer the spacecraft and vice versa. “Isolating the different subsystems is key.”

The software is designed defensively, such that even within a component, SpaceX tries to isolate the effects of errors. “We’re always checking error codes and return values. We also have the ability for operators or the crew to override different aspects of the algorithm.” A big part of the total software development process is verification and validation. “Writing the software is some small percentage of what actually goes into getting it ready to fly on the space vehicle.”

“At SpaceX we really don’t want our processes to fail as a result of a software failure. We’d rather just continue with the rest of the software that actually isn’t impacted by that failure. We still need to know about that failure and that’s where the telemetry factors in, but we want things to keep going, controlling it the best that we can.”

References and resources also include:

https://www.ibm.com/topics/software-testing

https://www.altexsoft.com/blog/engineering/software-testing-qa-best-practices/

International Defense Security & Technology Your trusted Source for News, Research and Analysis

International Defense Security & Technology Your trusted Source for News, Research and Analysis