Artificial intelligence, particularly large language models (LLMs), has moved rapidly from research labs into everyday life. From writing software to generating medical summaries, AI assistants are everywhere. Yet, a critical trust barrier persists: how do we know an AI understands not just our instructions, but the rules behind them? Can it interpret the complex web of obligations, permissions, and prohibitions that guide human decision-making?

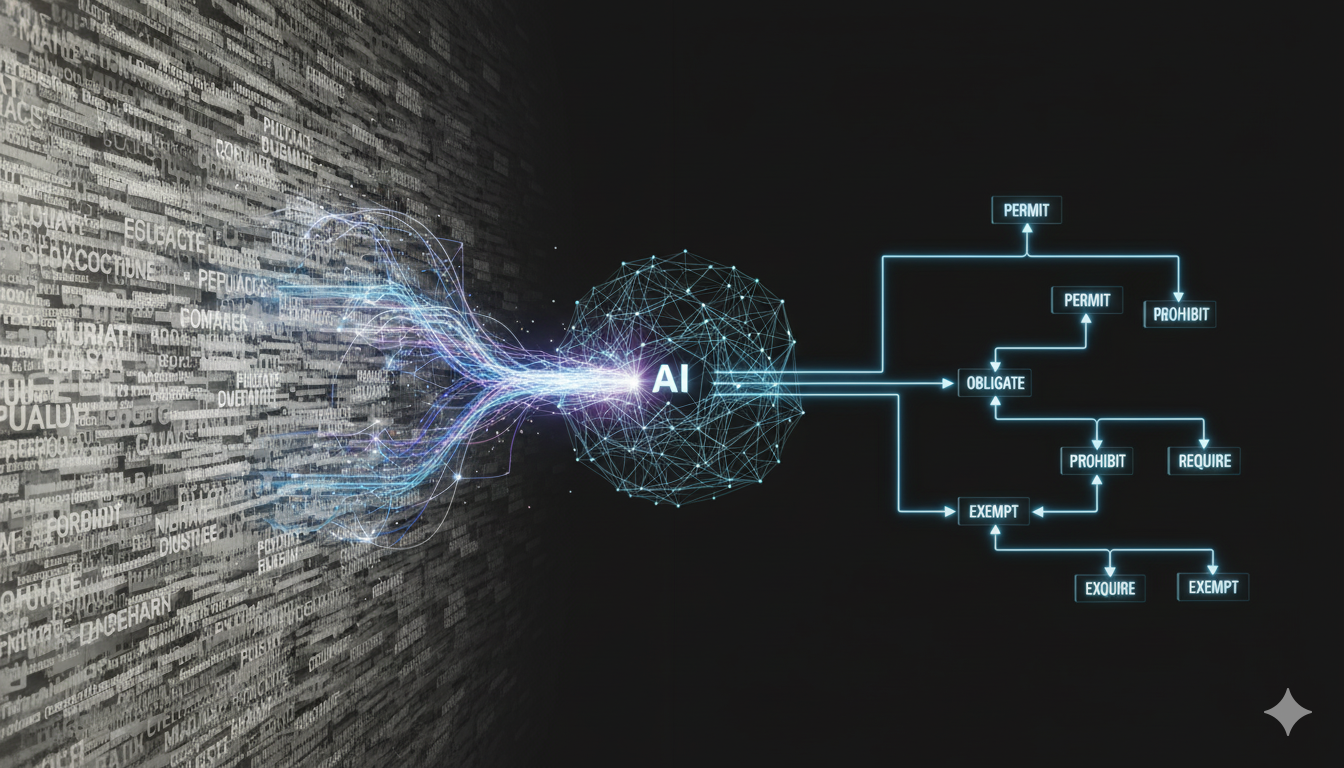

This challenge—known as the “deontic reasoning” problem, from the Greek deon meaning duty—is at the heart of AI trust. For artificial intelligence to operate in high-stakes arenas such as military command, finance, or healthcare, it must be able to interpret and act within legal, ethical, and policy frameworks, not just surface-level text. DARPA’s new Human-AI Communications for Deontic Reasoning DevOps (CODORD) program seeks to close this gap by creating systems that can automatically translate human intent and rules into machine-readable logic.

Overcoming the Knowledge Bottleneck

Currently, teaching AI to understand complex regulations or doctrines is slow and expensive. The process depends on highly trained “knowledge engineers” who manually translate dense legal, military, or technical texts into precise logical languages. This translation requires rare expertise in both formal reasoning and the subject domain, making it a significant barrier to scaling AI into areas where rules evolve constantly.

DARPA sees this as a bottleneck preventing the effective use of today’s advanced AI. CODORD aims to eliminate the need for painstaking manual translation by enabling AI systems to read natural language directly—whether that’s a commander’s verbal order, a financial regulation, or an uploaded policy document—and automatically encode it into a form that supports logical reasoning. This breakthrough would allow AI to evaluate rules, explain its reasoning, and ensure compliance without requiring human intermediaries at every step.

How CODORD Will Work

At its core, CODORD (Command-Oriented Declarative Operational Rules and Directives) is envisioned as a universal translator for intent and rules. Instead of requiring operators or policymakers to manually encode directives into rigid code or legalese, CODORD allows them to use natural human language—whether spoken commands, written policy documents, or uploaded orders. The system then automatically converts that input into a precise logical language that machines can process and reason over. This bridges the gap between human understanding and machine execution, enabling seamless interaction between commanders, policymakers, and AI systems.

Today, this translation of rules and directives is largely done manually by staff officers, legal advisors, and compliance teams. A single question—such as whether a certain piece of equipment can be used under a specific treaty—can take hours or even days to resolve, as teams sift through regulations, contracts, and operational orders. This process is slow, prone to human oversight, and difficult to scale in fast-moving operational environments. CODORD addresses these challenges by automating the reasoning process, instantly parsing and applying rules in real time.

Once translated, CODORD provides the AI with the ability to reason through rules systematically. For example, when given a set of policies, constraints, or permissions, the AI can deduce their logical implications, identify conflicts, and even anticipate edge cases. This logical rigor also enables the system to generate a transparent, auditable reasoning trail, making it clear which specific rule or directive supported a decision. Such traceability is critical in high-stakes environments like defense and government operations, where accountability is as important as speed.

The system’s compliance capability ensures that every recommendation or action taken remains within the established boundaries of permissions and prohibitions. For instance, if an order conflicts with a treaty clause or a status of forces agreement, the AI will flag the issue before execution. This safeguards against errors or unintended violations while ensuring operations remain aligned with both legal and ethical constraints. Consider the case of a military logistics officer asking whether commercial drones from a specific vendor can be used in a given region. With CODORD, the AI could instantly analyze the entire corpus of relevant regulations, contracts, and operational directives, cross-reference them, and return a precise, context-sensitive answer—such as permitting usage only under certain conditions. Beyond just giving a “yes” or “no,” CODORD would present its reasoning chain with citations, enabling human operators to review and trust its logic.

For example, a logistics officer in a conflict zone might ask whether commercial drones from a particular vendor can be used for supply missions under current agreements. Instead of offering a vague or probabilistic answer, a CODORD-enabled AI could analyze the relevant agreements, cross-check them against contract terms and operational orders, and provide a precise response such as: “Yes, but only during daylight hours and with prior notification to local authorities, as required in Section 4.B of the agreement.” Crucially, it could show the reasoning path behind its conclusion, enabling human operators to validate and trust the output.

Building Trust Through Transparency

For defense applications, the ability to ensure that AI systems follow both the spirit and the letter of a commander’s guidance is mission-critical. As U.S. Marine Corps Col. Robert Gerbracht, Special Assistant to the DARPA Director, explained, commanders must be confident that an AI system can interpret their intent within the ethical, legal, and moral guidelines they have established. Without that assurance, AI risks becoming a liability rather than a trusted partner.

What makes CODORD distinct is its emphasis on transparent reasoning. Unlike the opaque decision-making of many LLMs, CODORD systems would generate explanations rooted in logical proofs and traceable references to specific rules. This visibility is key to building trust with human operators in both military and civilian settings.

Implications Beyond Defense

While the program’s immediate focus is defense and national security, its applications span far beyond. In finance, CODORD could help automate compliance checks across constantly changing regulations. In healthcare, it could ensure treatment plans align with the latest medical guidelines and insurance policies without requiring manual cross-checking. In global logistics, it could help manage supply chains subject to shifting trade rules, tariffs, and sanctions.

By making AI not just more powerful but more aligned with human intent, law, and ethics, CODORD marks a turning point in human-machine collaboration. It represents a future where AI is not a black box but a transparent partner—capable of reasoning with the rules we live by and earning the trust needed to help shape decisions in the most critical domains of society.