Computational imaging is turning cameras into intelligent systems, enabling real-time sensing and tracking beyond the pixel.

Introduction: Redefining What Cameras Can See

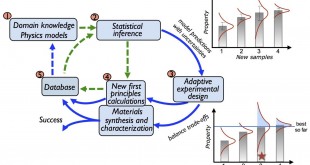

We stand at the precipice of a fundamental transformation in visual perception technology. The conventional camera paradigm—limited by rigid optical designs and passive sensor arrays—is being overturned by computational imaging systems that merge advanced optics with artificial intelligence. These systems extract information far beyond what’s contained in raw pixel data, enabling breakthroughs in real-time sensing and tracking that were unimaginable just a decade ago.

This revolution stems from a paradigm shift in how we understand images. Rather than treating them as simple 2D representations, computational imaging approaches visual data as rich information streams containing hidden details about depth, motion, material properties, and even objects outside the direct line of sight. Through sophisticated computational techniques, researchers are developing imaging systems with extraordinary capabilities—from tracking objects around corners to seeing through atmospheric obscurants and detecting microscopic movements invisible to conventional cameras.

The Evolution of Imaging: From Traditional Pixels to Computational Innovation

In today’s fast-evolving tech landscape, traditional imaging methods are rapidly giving way to innovative techniques that redefine what’s possible in real-time sensing.

For decades, digital imaging relied on capturing and processing arrays of pixels. While effective for many applications, these systems often struggle with speed and data efficiency, especially in environments where every microsecond counts. The advent of computational imaging has shifted this paradigm, allowing researchers and engineers to extract more meaningful information with far less data. This new approach leverages sophisticated algorithms and non-traditional optical methods to reconstruct images or derive actionable data without relying solely on dense pixel arrays.

Computational imaging—a marriage of advanced optics, signal processing, and artificial intelligence—is spearheading this revolution. By moving “beyond the pixel,” new methods such as single-pixel imaging and non-orthogonal light projection are enabling ultra-fast, low-latency tracking systems that have the potential to transform industries ranging from autonomous vehicles to industrial robotics.

As a result, computational imaging not only reduces the data storage burden but also minimizes the processing time—an essential advantage in high-speed applications. The integration of hardware innovations with software-driven analysis enables these systems to operate in environments where traditional cameras might otherwise falter.

Key Innovations Behind Computational Imaging

Single-Pixel Imaging (SPI)

At the heart of this new wave is single-pixel imaging (SPI). Unlike conventional cameras that depend on thousands or millions of individual pixels, SPI uses a single detector to capture light signals. This technique involves illuminating a scene with a sequence of patterns and then recording the corresponding intensity values with one detector. The result is a system that is not only more efficient but also capable of operating under conditions where traditional imaging may fail, such as in low-light or high-speed scenarios. Recent research has demonstrated that acquiring a 3D coordinate in a tracking application can require as little as six bytes of storage space and only 2.4 microseconds of computation time. This dramatic reduction in resource usage is paving the way for systems that are both faster and more cost-effective.

Non-Orthogonal Light Projection

Another breakthrough that is reshaping the field is the use of non-orthogonal light projection in computational imaging systems. Traditional imaging methods often rely on orthogonal projections, which, while accurate, can be cumbersome and less efficient. By projecting geometric light patterns onto non-orthogonal planes, engineers can design compact, efficient systems that generate precise 3D coordinates. This innovative approach reduces the overall system size and simplifies the optical setup. The benefits of a more compact design are significant, making integration into various applications—from vehicle-mounted sensors to portable industrial inspection devices—more feasible without compromising on performance.

From Photons to Information

Traditional digital cameras follow a linear capture process: light passes through lenses, strikes a sensor, and creates a 2D representation. Computational imaging reinvents this pipeline as an active information processing system where every optical component—from specialized lenses to unconventional sensor designs—is optimized to capture specific types of data.

Computational imaging systems employ innovative techniques that fundamentally transform how we capture visual information. Coded apertures use precisely designed patterns to encode depth and spatial data into images, while structured light projections map 3D surfaces by analyzing how projected patterns deform on objects. These systems also utilize time-of-flight sensors that measure light’s roundtrip journey to calculate distances with millimeter precision. Advanced algorithms then decode this rich optical information, enabling capabilities like seeing in near-total darkness through photon-counting sensors or reconstructing hidden objects via transient imaging that tracks light’s subtle scattering patterns.

The true power emerges when combining these techniques with modern processing. Depth-from-defocus methods analyze minute focus variations to build 3D maps, while AI-driven reconstruction algorithms can extract hidden details from seemingly noisy data. Together, they enable systems that don’t just record light but actively interpret it – whether distinguishing materials by their spectral signatures, detecting motions too fast for conventional cameras, or even peering around corners by analyzing indirect light paths. This represents a paradigm shift from passive photography to active computational vision.

The Technology Stack Powering the Revolution

Next-Generation Hardware Components

Modern computational imaging systems integrate several cutting-edge hardware innovations. Unconventional sensors like single-photon avalanche diodes and quantum image sensors provide unprecedented light sensitivity. Programmable optical elements including liquid lenses and deformable mirrors enable dynamic focus and aberration correction. Specialized AI accelerators process visual data in real time, while multi-sensor fusion architectures combine visual, thermal, and RF data streams for comprehensive environmental awareness.

Cutting-Edge Algorithmic Approaches

The software side features equally impressive innovations. Neural Radiance Fields (NeRFs) create detailed 3D scenes from collections of 2D images through advanced machine learning. Differentiable rendering techniques train systems to understand the physics of light transport for more accurate scene reconstruction. Compressed sensing algorithms enable high-quality image reconstruction from minimal data samples, while event-based vision systems process only pixel-level changes for extreme computational efficiency.

The AI Revolution in Imaging

Modern computational imaging increasingly relies on machine learning to interpret complex optical data. Neural networks demonstrate remarkable abilities to reconstruct high-quality images from severely under-sampled data, separate meaningful signals from noise in low-light conditions, predict object motion from subtle visual cues, and extract material properties from spectral signatures. This AI integration creates systems that don’t just passively capture scenes but actively interpret and predict dynamic changes in real time.

Breakthrough Applications

Autonomous Systems That See the Unseeable

The transportation sector is experiencing transformative benefits from computational imaging. Autonomous vehicles now employ systems that detect pedestrians obscured by glare or fog with remarkable reliability. These systems track multiple objects simultaneously with millimeter precision while predicting trajectories based on micro-movements invisible to human observers. Perhaps most impressively, they generate real-time 3D environmental maps without relying on bulky LIDAR systems. Tesla’s vision-only Autopilot exemplifies this shift, using advanced neural networks to extract precise depth information from conventional camera feeds.

Medical Imaging Revolution

Healthcare is undergoing its own imaging transformation through computational techniques. Virtual biopsy systems can now determine tissue properties without physical samples, while advanced blood flow tracking visualizes circulation without contrast agents. Early disease detection systems spot microscopic cellular changes long before symptoms appear, and surgical guidance platforms provide real-time tissue analysis during operations. MIT researchers recently demonstrated a breakthrough system capable of tracking individual blood cells through capillaries, offering unprecedented views of microcirculation.

Security and Surveillance Advancements

Modern security systems combine multiple computational imaging capabilities for comprehensive situational awareness. Person re-identification algorithms recognize individuals across different camera angles and lighting conditions. Behavior prediction models anticipate actions from subtle body language cues. Through-wall sensing technologies detect movement behind obstacles, while material identification systems determine object composition at a distance. These integrated capabilities are transforming applications ranging from airport security to urban surveillance and search-and-rescue operations.

Overcoming Technical Hurdles

Despite remarkable progress, computational imaging faces significant challenges. The processing demands for real-time analysis require massive computational power that can limit deployment options. Training AI systems requires vast, diverse datasets that can be difficult to acquire. Fundamental physical limits imposed by diffraction and quantum effects create theoretical boundaries for certain applications. Perhaps most importantly, the interpretability of these complex systems remains challenging—understanding why they make specific decisions is crucial for critical applications.

Recent Breakthroughs Pushing the Boundaries

The past year has witnessed several landmark achievements in computational imaging that are redefining the possible:

Ultra-Fast 3D Tracking with Single-Pixel Imaging

Researchers from Tsinghua University have developed a revolutionary 3D tracking method capable of monitoring fast-moving objects at unprecedented speeds. Led by Zihan Geng, the team’s single-pixel imaging approach achieves tracking rates of 6,667 Hz – more than 200 times faster than conventional video-based methods. This breakthrough eliminates the need for full image reconstruction, requiring just six bytes of storage and 2.4 µs computation time per 3D coordinate measurement. The system uses a digital micromirror device (DMD) operating at 20 kHz modulation rates to project geometric light patterns onto non-orthogonal planes, enabling compact system design while maintaining exceptional tracking precision without prior motion information.

Furthermore, the researchers implemented a non-orthogonal projection approach to further enhance the practicality of their system. By projecting geometric light patterns onto two non-orthogonal planes, they were able to generate precise 3D coordinates necessary for real-time object tracking. This method not only reduces the overall system size but also simplifies assembly and implementation, making high-speed tracking technology more accessible and opening the door to new applications in autonomous driving, industrial inspection, and security surveillance.

This advancement could significantly enhance autonomous vehicle perception, industrial quality control, and security surveillance systems while reducing hardware costs.

Non-Line-of-Sight Imaging Reaches Practical Applications

MIT researchers recently demonstrated a real-time system called “Confocal Non-Line-of-Sight Imaging” that can reconstruct hidden objects from indirect light reflections with unprecedented clarity. Using advanced photon timing and novel reconstruction algorithms, their system achieved 2 cm resolution at 1 meter distances, enabling practical applications in search-and-rescue and autonomous vehicle navigation.

Single-Photon 3D Imaging Shatters Distance Records

A team at Stanford developed a quantum-inspired imaging system that captured detailed 3D information of subjects over 10 km away using just a single photon per pixel. This breakthrough, published in Nature Photonics, combines single-photon avalanche diodes with novel temporal compression algorithms, enabling long-range surveillance through atmospheric obscurants like fog and smoke.

Neural Holography Enables Real-Time 3D Displays

Researchers at NVIDIA and Harvard unveiled a neural holography system that uses deep learning to calculate optimal wavefront patterns at 60 fps, enabling the first real-time 3D holographic displays with correct focus cues. This overcomes a decades-old challenge in holography by using AI to solve the complex inverse problem in milliseconds rather than hours.

Ultrafast Spectral Imaging for Material Analysis

A Caltech team created a hyperspectral imaging system that captures full spectral data (128 bands) at 1 million frames per second. Their “Compressed Ultrafast Spectral Photography” (CUSP) technique, detailed in Science Advances, allows real-time material analysis of fast-moving objects – from detecting chemical compositions in explosions to analyzing biological processes at cellular scales.

Edge-AI Cameras with On-Sensor Processing

Samsung and Sony have both announced new image sensor architectures that perform neural network processing directly on the sensor chip. Sony’s IMX7000 series can run object detection and tracking algorithms at the pixel level while consuming just 10mW, enabling always-on smart cameras with year-long battery life.

These advances collectively demonstrate how computational imaging is transitioning from laboratory curiosities to practical technologies with real-world impact across industries. The convergence of novel optical designs, advanced sensors, and specialized AI algorithms continues to push the boundaries of what imaging systems can achieve. The Tsinghua team’s ultra-efficient tracking method particularly stands out for its potential to democratize high-speed 3D sensing across multiple application domains while dramatically reducing computational overhead.

The Road Ahead

Future developments promise to push boundaries even further. Quantum imaging techniques may use entanglement phenomena to surpass classical resolution limits. Neuromorphic sensors could mimic biological vision systems for unprecedented efficiency. Edge AI implementations will move more processing directly into camera hardware for faster response times. As these technologies advance, robust ethical frameworks will be essential to guide responsible use of increasingly powerful tracking and sensing capabilities.

Conclusion: A New Visual Paradigm

The transformation from traditional pixel-based imaging to computational imaging represents a paradigm shift in how we perceive and interact with the world. Techniques such as single-pixel imaging and non-orthogonal light projection are building systems that are faster, more efficient, and remarkably adaptable. This revolution is not confined to a single industry but is set to impact autonomous vehicles, industrial robotics, security systems, and beyond.

The computational imaging revolution represents more than incremental improvement—it redefines the very nature of machine perception. By treating light as a rich information carrier rather than simple illumination, these systems are realizing capabilities that recently existed only in science fiction. As the technology matures, cameras will evolve from passive recording devices to intelligent sensing platforms that actively understand, predict, and interact with their environments.

For businesses and researchers, this transformation presents both extraordinary opportunities and significant challenges. Organizations that successfully adopt these new paradigms will gain unprecedented insights into their operations, products, and customers. Those slow to adapt risk being left behind as visual information technology undergoes its most radical transformation since the invention of photography. The future of imaging has arrived, and it sees far beyond the pixel.

International Defense Security & Technology Your trusted Source for News, Research and Analysis

International Defense Security & Technology Your trusted Source for News, Research and Analysis