As artificial intelligence (AI) continues to reshape our digital landscape, the demand for energy-efficient AI chips in mobile devices has never been greater. The growing reliance on mobile technology for tasks ranging from basic communication to complex data analysis necessitates innovations that balance performance with energy consumption. In this blog post, we will explore the significance of energy-efficient AI chips, their impact on mobile devices, and the future trends shaping this exciting field.

The Importance of Energy Efficiency

Mobile devices, including smartphones and tablets, have become essential tools in our daily lives. With features like voice assistants, real-time translation, image recognition, and personalized recommendations, AI capabilities are increasingly integrated into these devices. However, as AI algorithms become more complex, they require significant processing power, which can lead to increased energy consumption.

Energy-efficient AI chips are essential for various reasons, significantly enhancing the user experience and addressing environmental concerns. One of the primary benefits is battery life. Mobile users increasingly prioritize devices that can last throughout the day without needing frequent recharging. Energy-efficient chips play a vital role in extending battery life, allowing users to utilize their smartphones, tablets, and other devices for longer periods without interruption. This feature is particularly crucial for individuals who rely on their devices for work, communication, and entertainment throughout their daily activities.

Another critical aspect is thermal management. High-performance chips often generate significant heat during operation, which can lead to thermal throttling. This phenomenon occurs when a device reduces its performance to avoid overheating, negatively impacting user experience and functionality. Energy-efficient designs help manage heat output more effectively, ensuring that devices remain comfortable to hold and operate optimally. By minimizing heat generation, these chips enhance both performance and user satisfaction.

Finally, the increasing emphasis on sustainability makes energy-efficient AI chips even more vital. As environmental concerns continue to grow, there is a rising demand for sustainable technology solutions. Energy-efficient chips contribute to a lower carbon footprint by reducing overall energy consumption, which is critical in mitigating the impact of technology on the environment. Furthermore, by extending the lifespan of devices through efficient energy use, these chips help minimize electronic waste, aligning with the broader goal of promoting sustainable practices in technology development.

In summary, energy-efficient AI chips are crucial not only for improving user experience through longer battery life and better thermal management but also for supporting sustainability initiatives in the tech industry.

Innovations in AI Chip Design

Innovations in AI chip design are transforming the landscape of energy efficiency, particularly for mobile devices. One of the most significant advancements is the development of Application-Specific Integrated Circuits (ASICs). These custom-designed chips are tailored for specific applications, such as AI processing, enabling them to achieve higher performance per watt than general-purpose processors. By optimizing the chip’s architecture for particular tasks, ASICs can effectively deliver the computational power needed for AI applications while minimizing energy consumption.

Another groundbreaking approach is neuromorphic computing, which draws inspiration from the structure and function of the human brain. Neuromorphic chips are designed to mimic neural networks, enabling them to process information more efficiently. By utilizing parallel processing and event-driven architectures, these chips can significantly reduce energy consumption while maintaining high performance. This innovative approach allows for the development of AI systems that can operate effectively with lower power requirements, making them ideal for mobile devices.

Field-Programmable Gate Arrays (FPGAs) also play a crucial role in enhancing energy efficiency in AI chip design. FPGAs are notable for their flexibility and reconfigurability, allowing developers to customize chip functions on the fly. This adaptability enables optimized energy usage across varying workloads, making FPGAs suitable for a wide range of AI applications. By adjusting the chip configuration to match specific tasks, developers can ensure that energy consumption is kept to a minimum without sacrificing performance.

In addition to hardware innovations, the development of low-power machine learning algorithms is essential for improving energy efficiency. Researchers are focusing on creating machine learning techniques that require fewer resources, enabling AI models to run efficiently on less powerful hardware. These advances in algorithm design complement the innovations in chip architecture, ensuring that AI applications can operate effectively while consuming less energy. Together, these approaches are paving the way for a new generation of energy-efficient AI chips, making them increasingly viable for mobile devices and beyond.

Recent Breakthroughs in Energy-Efficient AI Chips

The growing demand for AI-powered devices, from smartphones to expansive data centers, has intensified the quest for energy-efficient AI chips. Recent advancements in chip design and materials science are paving the way for a new generation of AI hardware that delivers high performance while minimizing power consumption. This evolution is essential not only for enhancing device efficiency but also for addressing the environmental challenges associated with increased energy usage in technology.

One of the most notable advancements lies in advanced chip architectures. Neuromorphic computing, inspired by the human brain’s structure, has led to the development of chips designed to mimic neural processing. This approach facilitates significant energy savings by enabling more efficient data processing methods. Additionally, sparse neural networks, which activate only a subset of neurons at any given time, reduce both computational complexity and energy consumption. These innovations ensure that AI systems can maintain robust performance while conserving energy. Furthermore, approximate computing techniques allow for a small degree of error in calculations, leading to substantial reductions in energy consumption, particularly in applications where perfect accuracy is not essential.

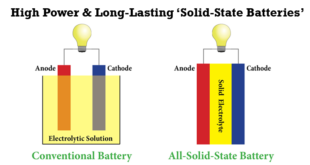

Innovative materials also play a crucial role in enhancing the energy efficiency of AI chips. Two-dimensional materials, such as graphene and transition metal dichalcogenides (TMDs), possess unique electrical properties that can be exploited to create highly efficient transistors. These materials enable faster and more energy-efficient chip designs, unlocking new possibilities in AI hardware. Meanwhile, the field of spintronics, which utilizes the spin of electrons for information storage and processing, offers a promising pathway to further energy-efficient devices, potentially reducing power consumption without sacrificing performance.

In addition to architectural and material innovations, novel memory technologies significantly contribute to energy efficiency. Phase-change memory (PCM) combines fast read and write speeds with low power consumption and high endurance, making it particularly well-suited for AI applications. Its capacity to rapidly store and retrieve data enhances performance in energy-sensitive environments. Similarly, memristors, which store information based on their resistive state, reduce the need for frequent data transfers between memory and processing units. By minimizing data movement, these devices lower energy consumption and improve overall efficiency.

Optimized algorithms further enhance the energy efficiency of AI systems. Quantization techniques reduce the precision of weights and activations in neural networks, leading to a significantly smaller memory footprint and reduced computational requirements. This approach allows AI models to run more efficiently on energy-constrained hardware. Additionally, pruning techniques remove unnecessary connections within neural networks, improving efficiency and lowering energy consumption. These methods ensure that AI models can operate with minimal resources while still delivering effective performance.

In conclusion, breakthroughs in energy-efficient AI chips are transforming the landscape of AI technology, enabling broader deployment across various applications—from edge devices to large-scale data centers. By addressing the energy challenges associated with AI, these advancements contribute to a more sustainable future. As research and innovation continue to progress, we can expect even more significant strides in energy efficiency, paving the way for smarter and greener AI solutions that balance technological advancement with environmental responsibility.

Chinese Scientists Unveil World’s Most Energy-Efficient AI Chips for Mobile Devices

At the forefront of innovation in artificial intelligence, Chinese scientists have introduced two groundbreaking low-power AI chips at a prestigious conference in the chip design industry. These chips not only push the boundaries of performance but also set new records in energy efficiency, addressing the increasing demand for AI capabilities in mobile devices.

The first chip is designed for offline voice control, optimized for use in smart devices. It excels in keyword spotting and speaker verification, effectively recognizing voice signals even amidst environmental noise such as background music or conversations. Traditional voice recognition chips often struggle with high energy consumption during wake-up periods and frequently experience false activations, leading to inefficiencies. To overcome these challenges, the research team, led by Professor Zhou Jun from the University of Electronic Science and Technology of China (UESTC), implemented a novel architecture that features dynamic computation engines, adaptive noise suppression circuits, and an integrated keyword and speaker recognition system. This chip achieves an energy consumption of less than two microjoules per recognition instance, with an impressive accuracy rate of over 95% in quiet conditions and 90% in noisy environments. Its compact design, fitting within a 1 cm² area, makes it suitable for various applications, including smart homes, wearable devices, and smart toys.

The second chip developed by the team focuses on detecting seizures in individuals with epilepsy. Intended for use in wearable devices, it utilizes electroencephalogram (EEG) recognition to identify seizure signals, alerting users to seek immediate medical assistance. Traditional designs require extensive seizure data for training, which is often time-consuming and costly. To address this issue, the researchers optimized a zero-shot retraining algorithm, enabling the pre-trained AI model to make accurate predictions on unseen data without the need for extensive seizure signal collection. This innovative approach results in an accuracy rate exceeding 98%. The chip’s average recognition energy consumption is approximately 0.07 microjoules, marking it as the most energy-efficient design of its kind globally. It also shows potential for applications beyond seizure detection, including brain-computer interfaces and sleep monitoring.

These advancements highlight the growing capabilities of AI chips, positioning them as critical components in the evolution of mobile technology. With significant reductions in energy consumption and enhanced functionality, these new chips promise to expand the reach of AI applications while fostering a more sustainable future.

The Future of Energy-Efficient AI Chips

The future of energy-efficient AI chips for mobile devices looks exceptionally promising, driven by several key advancements. One notable area of progress is material science. The exploration of new materials, such as graphene and other two-dimensional substances, presents exciting possibilities for developing faster and more efficient chips. These innovative materials have the potential to significantly reduce power consumption while enhancing overall chip performance, paving the way for breakthroughs in mobile device technology.

Another important trend is the integration of AI and hardware. By closely aligning AI algorithms with hardware design, developers can optimize resource allocation, enabling chips to intelligently manage energy use according to workload demands. This dynamic approach allows for greater efficiency and responsiveness, ensuring that devices can perform complex tasks without draining their batteries quickly. Such integration promises to revolutionize how mobile devices handle AI applications, making them more sustainable and user-friendly.

Finally, the evolution of energy-efficient AI chips will depend heavily on the formation of collaborative ecosystems. As the industry progresses, cooperation between hardware manufacturers, software developers, and researchers will be essential in creating holistic solutions that prioritize energy efficiency while delivering cutting-edge AI capabilities. By fostering collaboration, stakeholders can share insights, align goals, and accelerate the development of innovative technologies that meet the growing demand for sustainable mobile devices. This collaborative approach is key to driving advancements in energy-efficient AI chips and ensuring a greener, more efficient future for technology.

Conclusion

Energy-efficient AI chips are poised to transform the landscape of mobile technology, enabling advanced features while addressing critical concerns about battery life and sustainability. As the demand for AI integration in mobile devices continues to rise, innovations in chip design will play a crucial role in shaping the future of mobile computing. By investing in energy-efficient solutions, we can enhance user experiences, support sustainable practices, and pave the way for a smarter, more connected world.

References and Resources also include:

https://www.scmp.com/news/china/science/article/3256211/chinese-scientists-create-worlds-most-energy-efficient-ai-chips-mobile-devices

International Defense Security & Technology Your trusted Source for News, Research and Analysis

International Defense Security & Technology Your trusted Source for News, Research and Analysis