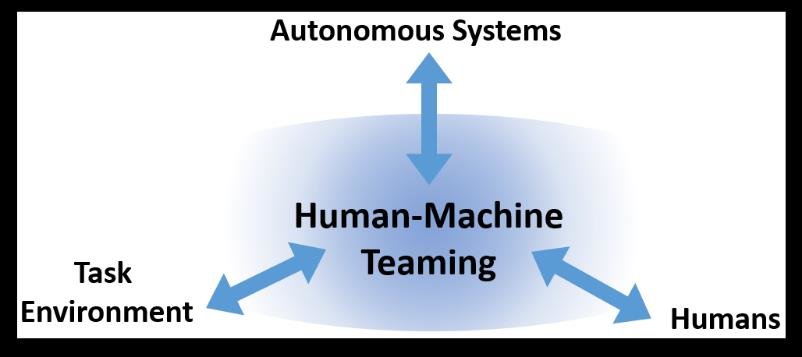

Interactions with technologically sophisticated artificial intelligence (AI) agents are now commonplace. We increasingly rely on intelligent systems to extend our human capabilities, from chatbots that provide technical support to virtual assistants like Siri and Alexa. Examples of such systems include air traffic control, aircraft cockpits, chemical processing, and the power industry. One current focus is the development of such systems for large robotic teams (10 or more robots)

Machines can possess sensory (infra-red, FLIR, sonar, etc.) and motor (flying, fast land travel, precision surgery, etc.) capabilities that humans do not possess. They also can perform tasks over and over again, without becoming bored or fatigued, maintaining a level of vigilance that would be difficult for a human. In some cases, they can communicate and access resources (such as data on the web) faster and more precisely than a human, and they can possess computational capabilities (such as complex mathematical calculations) that are beyond those of humans and data analysis. A machine can also be used in dangerous or extreme environments without risk of loss of life.

Development of autonomous machines that use such capabilities to collaborate with human partners can have a transformative

effect across many commercial and military applications.

Human-to-human teaming involves multiple individuals banding together in pursuit of a common goal. Sports teams, music groups, non-profits, business units, as well as small-scale military organizations, are common examples of situations where multiple individuals

work together, not in the service of a single individual, but in the service of the team.

However, today’s intelligent machines are essential tools, not teammates. They require the undivided attention of a human user and lack the communicative or cognitive capabilities needed to interact as trusted teammates. At best, these technologies are useful in that they extend human capabilities, but their communicative and cognitive capabilities have been inadequate for being a useful and trusted teammate.

To become true teammates, the intelligent machines will need to be flexible and adaptive to the states of the human teammate, as well as the environment. They will need to intelligently anticipate their human teammate’s capabilities, intentions, and generalize specific learning experiences to entirely new situations.

In military training, autonomous AI systems (virtual agents) have been used to not only populate the battlespace with friendly and enemy units, but also as simulated co-pilots, members of nautical maintenance staff, and as virtual humans. Most of these teaming situations

have involved interactions with a human during execution of a constrained task through restricted natural language.

“Intelligent agents are rapidly becoming more prevalent for many applications and are expected to work in conjunction with humans to extend the team capability,” said Dr. J. Cortney Bradford, research scientist, U.S. Army Combat Capabilities Development Command, known as DEVCOM, Army Research Laboratory. “For optimal performance of the team, the human and agent must rapidly adapt to each other as well as the changing operational dynamics and objectives.” Understanding the mechanisms and factors that predict and shape mutual and stable adaptation between humans and adaptive agents across time is critical, she said.

Human-Machine Teaming Research

Human-Machine Teaming Research focuses on the human factors and software engineering aspects of integrating such teams while also incorporating artificial intelligence techniques in order to provide intelligent information and interaction capabilities. This work requires the development of interfaces that rely on artificial intelligence but also provide intuitive interaction capabilities beyond the standard graphical user interface, computer keyboard, and mouse. Examples of multimodal interaction capabilities include eyeglass displays, speech, gesture recognition, and other novel interfaces.

Key research challenges for human-machine teaming

A group of humans and machines collaborate on a common task, such as preparing a meal. The members of the team use communication

to agree on the task for the team (what meal to prepare), to divide the task into subtasks (who cooks which dish), and to coordinate joint activities (emptying a large pot into a serving dish). Communication is also used to inform teammates of individual intentions, such as when a teammate will need a specific utensil or pot. The creation of a joint understanding of the situation is called establishing “common ground.”

Each team member maintains a representation of their own beliefs, desires, and intentions (labeled “self” in the diagram), but also uses communication and perception to maintain a representation of the team’s goals and plans (“team”), as well as models of teammates (“teammate”), highlighted in red for both the human and the machine.

Human Capabilities: Natural Intelligence – research

to better understand human cognitive capabilities in the context of complex and dynamic situations. Some of the areas are: the ability to create mental representations of situations (“mental models”), the goals and intentions of other people (“theory of mind”), and shared knowledge with a communication partner (“common ground”). Robust learning is another key human capability that is central to human-machine teams. Humans can learn information from single events (“episodic memory”) to make predictions and generalizations in new situations and to build knowledge about situations and events that can guide

reasoning and deductive inferences (“common sense”).

Human Models of Machines – research

to understand what humans must know and learn about machines and their internal structure in order to effectively interact with them, including what is required in human-machine teaming to establish and maintain trust. From the machine design perspective, we will also want to understand how to make machines easier to understand and work with. Already we have seen the challenge of the opacity of some machine learning techniques where it is difficult for a human to understand why a machine makes a specific decision. A continuing challenge will be to create systems that are both competent and can explain their behavior. These are fundamentally questions of psychology and neuroscience but require research in intelligence machines in order to address them.

Machine Capabilities: Artificial Intelligence – research

to improve intelligent machine capabilities in order to enable effective human machine teams. One of our challenges is developing a science of teaming where we gain an understanding of the additional capabilities that a machine needs to be an effective teammate in the relevant tasks and environments.

These capabilities include:

Perception and Motor Control. To support joint activities, the machine must have perceptual and motor capabilities sufficient to create an internal model of the environment and act on it.

Communication. An obvious enabler of effective teaming is communication. Communication includes not only language, but gestures, and even interpretation of emotion expression. Even with the rise of personal assistants, and the seeming ubiquity of AI systems that process language, supporting general language understanding and production is still beyond the state of the art.

Reasoning, Problem Solving, Planning, Common Sense, Task Expertise. A machine teammate must also have the requisite

cognitive capabilities to perform its tasks, and coordinate with the teammates, etc.

Learning. Although much of a machine teammate’s knowledge can be defined offline, a machine teammate may need to dynamically learn from its environment, both to improve its task performance, but also to improve its model of its human teammates to better anticipate

their goals, beliefs, actions, and interactions.

Integrated Architectures. Beyond individual components, an ongoing research challenge is how these components work together to create coherent, effective behavior for complex problems that involve many different types of reasoning and problem solving

Machine Models of Humans – research

to establish the internal representations and processing of a machine for reasoning about human teammates. A machine’s model of itself and a model of the team structure are also important. The first challenge is understanding which aspects, and to what fidelity, do machines need to model the minds of humans given the demands of the tasks. Furthermore, the machine design might be improved by modeling the limitations of human cognition and brain function. For instance, machines could tailor delivery of information and decision options based on inferences about a human teammate’s attentional capacity and emotional state.

Teamwork adds extra components, such as understanding joint attention, theory of mind, and perspective taking. Although these are active areas in AI for building machine intelligence, the vast majority of work on modeling human abilities comes from the cognitive sciences:

cognitive psychology, cognitive neuroscience, linguistics, etc.

Positive feedback loops drive progress in all aspects of human machine teaming research. Advances in our understanding of human

capabilities guide advances in machine capabilities, which leads to better teaming experiments. Results from teaming experiments improve human models of machines and machine models of humans, and the cycle continues

Army study looks at how autonomous tech adapts to Soldiers

The study’s goal is to uncover brain signals, muscle signals, movement profiles and walking performance metrics that can track the state of humans as they interact with the exoskeleton. “This will both improve our understanding of how humans adapt to intelligent systems, but also these signals carry information that could be used to help train the agent,” Bradford said. “These signals could give the exoskeleton a better understanding of the human at any moment so that it can make better decisions on how to assist the human.”

The device, known as the Dephy ExoBoot, gives the user assistance at the ankle joint while performing challenging physical tasks, such as walking with a heavily loaded rucksack. For healthy adults, walking is a relatively easy task, but for a Soldier carrying a heavy load, the benefit comes from help in conserving energy, Bradford said. The ExoBoot is not directly controlled by the user or any other human operator. It has an internal computer that serves as a controller that must figure out on its own how and when to assist the person wearing the ExoBoot. The device uses a combination of mechanical sensors, models of human behavior and machine learning to determine things like how and when to provide assistance to the user.

For example, the current version only provides assistance during walking, so it must determine whether or not the user is walking as opposed to running, skipping or dancing, then determine where the user is in their walking pattern to appropriately time assistance. Timed assistance is based on gait changes brought on by user fatigue, walking speed, load carriage or even terrain change, Bradford said.

DEVCOM’s acting command sergeant major said Soldier input shapes Army science to ensure that it is applicable to future requirements.” “It’s the real-world experience that Soldier’s bring to bear,” said Sgt. Maj. Luke Blum, who is also DEVCOM ARL’s senior enlisted leader. “While ARL researchers try to anticipate the requirements that a Soldier may have, without field experience, we may fall short in that arena. This could be anything to the practical use of equipment, the employment of field tactics to considerations that go into planning an operational engagement.”

Study participants placed more than 40 reflective dots on various parts of participants’ bodies to measure–with the lab’s optical motion tracking system–how participants move. Researchers also attached 128 dual-layer electroencephalography electrodes developed within the Cognition and Neuroergonomics Collaborative Technology Alliance on participants’ scalps using a cap that looks like a swim cap to measure brain signals. These EEG sensors have enabled high-fidelity neuroimaging during locomotion in complex scenarios such as walking while learning to use an adaptive exoskeleton.

After a few quality checks to ensure the sensors worked, researchers invited participants to walk on the instrumented treadmill located in the Soldier Performance and Equipment Advanced Research Facility. Participants walked for about an hour both with and without assistance from the ExoBoot for comparison. This work is part of a larger ARL research focus area to integrate humans and artificial intelligence to enable teams that can survive and function in complex environments.

US Army is researching technologies to anticipate individuals’ behaviour as part of an effort to boost manned-unmanned teaming

The research looks ahead to the future battlefield, said Dr Jean Vettel, a senior neuroscientist within ARL. In the future, humans and autonomous systems will be expected to increasingly work together on complex tasks. The speed and efficiency of such teaming can be enhanced if the artificial intelligence (AI) associated with the autonomous systems can anticipate the decisions or behaviour of their human partners, she told Jane’s .

For example, Vettel said AI could help determine geographic areas in which to conduct surveillance. Current algorithms are designed with certain assumptions and have rigid rules about whether or not to include a particular image in a final set that is presented to a human operator. That process, she said, could be enhanced if the AI was aware of the task that its human partners were trying to complete, since this would enable the algorithm to be flexible and adapt to the human.

ARL is conducting wide-ranging research into ways of streamlining this process, making it easier for these various components to collaborate. This latest study is “one small sliver” of that work, Vettel said. Essentially, ARL is seeking to develop a military equivalent of the autocomplete function found in mobile phones or on e-mail applications, which can suggest the end of a sentence after the first words are typed.

The work is part of a broader research programme on adaptive technologies being pursued by the Combat Capabilities Development Center, Army Research Laboratory (CCDC ARL). Research into this ‘autocomplete’ aspect of the work was conducted with the University at Buffalo (UB), New York, as well as several other universities and research facilities, and was recently published in the Science Advances journal.

However, there are unique challenges in the military domain. Google can base its technology on massive reams of data and billions of interactions, but military users tend to operate in niche areas with comparatively sparse data. “We still have to find ways in which we can have the technology be able to use the physiology of the soldiers to help them perform missions,” Vettel said.

Accordingly, CCDC ARL’s study focused on investigating how different brains process information, with the goal of developing models that can predict a person’s behaviour. Individuals use different brain circuits in varying combinations, even when completing the same tasks, said Vettel. These different brain circuits are analogous to different routes that can be taken to navigate to a geographic location; individuals select which route to take based on idiosyncratic elements, like past experience of traffic, personal preference, and errands that need to be completed en route.

Neuroimaging enables researchers to measure brain activity and understand how different brain regions are involved and used in different tasks, from language production to problem solving to memory. In general, different regions specialise in different things, although these regions work together constantly. For example, while a certain brain region could be key to producing speech, that activity also relies on other brain regions that store knowledge of things that have been learned, such as facts or vocabulary, Vettel explained.

Therefore, there are different combinations, or networks, of brain regions that work in concert with each other when completing certain tasks. Neuroscience typically looks at what is common across large groups of people, Vettel said, focusing on the average brain regions that most humans use for certain tasks. However, separate individuals use different networks of their brains for certain tasks, she added. The aim of ARL’s recent work with UB and other institutions was to “give a better insight into how these different brain networks co-ordinate with one another to support task performance, with the idea that if we can understand how the co-ordination of networks varies between people, we would be better able to predict the changes in performance that people experience”

The goal of the research from a military perspective is to develop technologies and frameworks that can account for the differences in brain activity between individual soldiers. ARL is looking at adaptive technologies that attempt to account for fluctuations in human performance. Data from large groups is unlikely to predict these daily fluctuations in soldier performance, so it is essential to focus specifically on individuals, she said, and this is a major hurdle to achieving the autocomplete goals.

“Whenever the brain regions are slightly different between people, we want to see if we can find a method that can capture that variability,” she said.

As part of the study, the researchers mapped how different regions of the brain were connected to one another in 30 people via tissue called white matter, according to an ARL statement. These maps were then converted into computational models of each subject’s brain, with computers used to simulate what would happen when one region of a person’s brain was stimulated. This helped the researchers estimate brain activity depending on the individual brain connections among networks known as cognitive systems, according to ARL.

At the study’s release, Dr Kanika Bansal, lead author on the report, noted that the brain is dynamic, with connections between different regions changing with learning or deteriorating with age or neurological disease. The connectivity between regions also varies between people, Bansal noted.

Although the work focused on studying the functions of individual brains, it could potentially be extended outside the brain, with the same principles being used to study how groups of people and autonomous systems work together, said Dr Javier Garcia, an ARL neuroscientist and one of the co-authors of the publication.

“While the work has been deployed on individual brains of a finite brain structure, it would be very interesting to see if co-ordination of soldiers and autonomous systems may also be described with this method, too,” he said. “Much how the brain co-ordinates regions that carry out specific functions, you can think of how this method may describe [how] co-ordinated teams of individuals and autonomous systems of varied skills work together to complete a mission.”

There are natural challenges associated with developing such predictive models in the military domain, Vettel said. Although autocompleting an e-mail is not a high-risk task, the soldier domain is characterised by “more high-risk, high-stress, and faster tempos … if you wanted AI, an autonomous agent, or a robot to help you, you would have to operate on a very fast timescale based on the speed of information in a mission”.

ARL is hoping to develop robust models of individual soldier physiology, including brain data as well as heart rate and other areas. The research is “looking across the physiology of the body to basically try to build an individual model of a person’s physiology to better account for fluctuations in task performance”.

Enhanced co-operation between humans and autonomous systems will be particularly important in the future environment, Vettel said. Today, there is a tendency to focus on the potential of one human to work with one autonomous agent. However, the future battlefield could be characterised by humans working with sets of autonomous agents, as well as collaborating with soldiers who are working with groups of their own autonomous agents.

“That creates a huge amount of teaming to assess, from autonomy to autonomy, human to human, and human to autonomy,” Vettel said. “The problem space is a rich source of complex challenges for multidisciplinary research that would have far-reaching implications for both the military and civilian sectors.”

References and Resources also include:

https://www.army.mil/article/245400/army_study_looks_at_how_autonomous_tech_adapts_to_soldiers