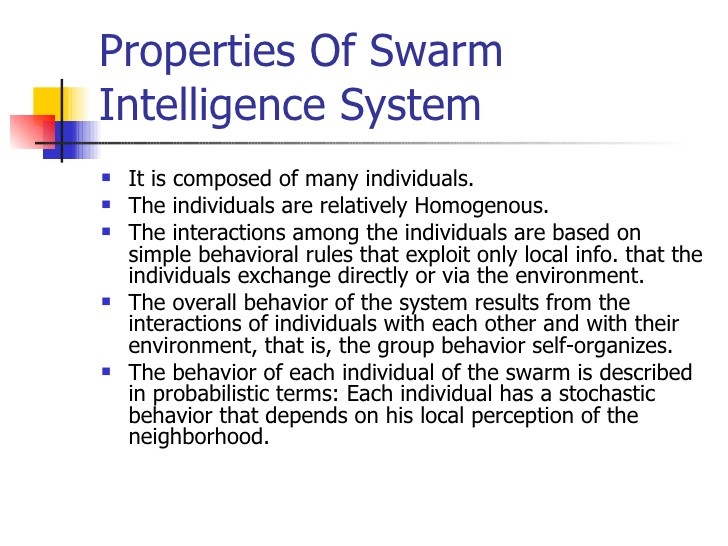

A robot swarm typically consists of tiny, simple, indistinguishable robots that are each equipped with a sensor (and a camera, radar and/or sonar so they can gather information about their surrounding environment). When one robot collects and shares data with the others in the group, it allows the singular robots to function as a homogeneous…