Most AI advances and applications are based on a type of algorithm known as machine learning that finds and reapplies patterns in data. Deep learning, a powerful subset of machine learning, uses neural networks to find and amplify even the smallest patterns.

Generative adversarial networks (GANs) are deep neural net architectures comprised of two nets, pitting one against the other (thus the “adversarial”). Currently, neural network can learn to recognize cats by analyzing several million cat photos, but humans must carefully identify the images and label them as cat photos. GANs can deliver unsupervised learning, systems that can learn without such heavy human involvement.

The members of Forbes Technology Council has selected this as the next big thing in tech. GaN is a way of pitting two neural networks against each other in order to train one of them to produce new things. For example, it may generate realistic-looking pictures. We’re not quite there yet but we will be soon. When that happens, it could conceivably become impossible to separate real information from false. For better or worse, that will be a very big thing,” said Ben Lee, Rootstrap. A new technique in artificial intelligence called GANs – which gives machines imagination – could soon be integrated into cloud services to make them cheaper and easier to use, MIT predict.

Since 2014, when GANs became popular through the work of Goodfellow (now a researcher at Google Brain), GANs have been used mainly to create realistic visuals with amazing results. In 2017, the graphics microprocessor company Nvidia trained a GAN, using photographs of celebrities, to produce its own ultra-realistic images of famous people who have never existed in the real world. GANs have been used to create videos that simulate the future few seconds of a scene, change day to night or summer to winter in landscape videos, fill in empty gaps in images or add years to faces, among many other spectacular demonstrations.

GANs are responsible for the first piece of AI-generated artwork sold at Christie’s, as well as the category of fake digital images known as “deepfakes.” GAN have raised concerns too, “The ability to generate realistic artificial images, often called deepfakes when images are meant to look like recognizable people, has raised concern in recent years,” said Johnson.

Security companies have rushed to embrace AI models as a way to help anticipate and detect cyberattacks. However, sophisticated hackers could try to corrupt these defenses. “AI can help us parse signals from noise,” says Nate Fick, CEO of the security firm Endgame, but “in the hands of the wrong people,” it’s also AI that’s going to generate the most sophisticated attacks. Generative adversarial networks, or GANs, which pitch two neural networks against one another, can be used to try to guess what algorithms defenders are using in their AI models. Another risk is that hackers will target data sets used to train models and poison them—for instance, by switching labels on samples of malicious code to indicate that they are safe rather than suspect.

Now, images of the Earth are also subject to deep fake. A lot of scholars have found GANs useful for spotting objects and sorting valid images from fake ones. In 2017, Chinese scholars used GANs to identify roads, bridges, and other features in satellite photos. The concern is that the same technique that can discern real bridges from fake ones can also help create fake bridges that AI can’t tell from the real thing. An adversary might fool computer-assisted imagery analysts into reporting that a bridge crosses an important river at a given point, while there is no bridge. Forces trained to go a certain route toward a bridge will, in fact, be surprised to find that the bridge does not exist.

A conventional network might say, “The presence of x, y, and z in these pixel clusters means this is a picture of a cat.” But a GAN network might say, “This is a picture of a cat, so x, y, and z must be present. What are x, y, and z and how do they relate?” The adversarial network learns how to construct, or generate, x, y, and z in a way that convinces the first neural network, or the discriminator, that something is there when, perhaps, it is not.

Lot of image-recognition systems can be fooled by adding small visual changes to the physical objects in the environment themselves, such as stickers added to stop signs that are barely noticeable to human drivers but that can throw off machine vision systems, as DARPA program manager Hava Siegelmann has demonstrated.

China has been recently leading the efforts in using generative adversarial networks (GANs) to trick computers into seeing objects in landscapes or in satellite images that aren’t there. Todd Myers, automation lead for the CIO-Technology Directorate at the US National Geospatial-Intelligence Agency says “the Chinese have already designed; they’re already doing it right now, using GANs .. to manipulate scenes and pixels to create things for nefarious reasons.”

“The Chinese are well ahead of us. This is not classified info,” Myers said Thursday at the second annual Genius Machines summit, hosted by Defense One and Nextgov. “The Chinese have already designed; they’re already doing it right now, using GANs—which are generative adversarial networks—to manipulate scenes and pixels to create things for nefarious reasons.” For example, Myers said, an adversary might fool your computer-assisted imagery analysts into reporting that a bridge crosses an important river at a given point.

The military and intelligence community can defeat GAN, Myers claims, but it’s time-consuming and costly, requiring multiple, duplicate collections of satellite images and other pieces of corroborating evidence. “For every collect, you have to have a duplicate collect of what occurred from different sources,” he said. “The biggest thing is the funding,” he said. U.S. officials confirmed that data integrity is a rising concern. Lt. Gen. Jack Shanahan, who runs the Pentagon’s new Joint Artificial Intelligence Center, said: “We have a strong program protection plan to protect the data. If you get to the data, you can get to the model.” But when it comes to protecting public open-source data and images, used by everybody, the question of how to protect it remains open.

Andrew Hallman, who heads the CIA’s Digital Directorate, framed the question in terms of epic conflict. “We are in an existential battle for truth in the digital domain,” Hallman said. “That’s, again, where the help of the private sector is important and these data providers. Because that’s frankly the digital conflict we’re in, in that battle space…This is one of my highest priorities.”

Generative vs. Discriminative Algorithms

Discriminative algorithms try to classify input data; that is, given the features of a data instance, they predict a label or category to which that data belongs. For example, given all the words in an email, a discriminative algorithm could predict whether the message is spam or not_spam. spam is one of the labels, and the bag of words gathered from the email are the features that constitute the input data. When this problem is expressed mathematically, the label is called y and the features are called x. The formulation p(y|x) is used to mean “the probability of y given x”, which in this case would translate to “the probability that an email is spam given the words it contains.”

So discriminative algorithms map features to labels. They are concerned solely with that correlation. One way to think about generative algorithms is that they do the opposite. Instead of predicting a label given certain features, they attempt to predict features given a certain label.

The question a generative algorithm tries to answer is: Assuming this email is spam, how likely are these features? While discriminative models care about the relation between y and x, generative models care about “how you get x.” They allow you to capture p(x|y), the probability of x given y, or the probability of features given a class. (That said, generative algorithms can also be used as classifiers. It just so happens that they can do more than categorize input data.)

Another way to think about it is to distinguish discriminative from generative like this: Discriminative models learn the boundary between classes; Generative models model the distribution of individual classes.

Generative adversarial networks (GANs)

Generative adversarial networks (GANs) are deep neural net architectures comprised of two nets, pitting one against the other (thus the “adversarial”). GANs were introduced in a paper by Ian Goodfellow and other researchers at the University of Montreal, including Yoshua Bengio, in 2014. Referring to GANs, Facebook’s AI research director Yann LeCun called adversarial training “the most interesting idea in the last 10 years in ML.”

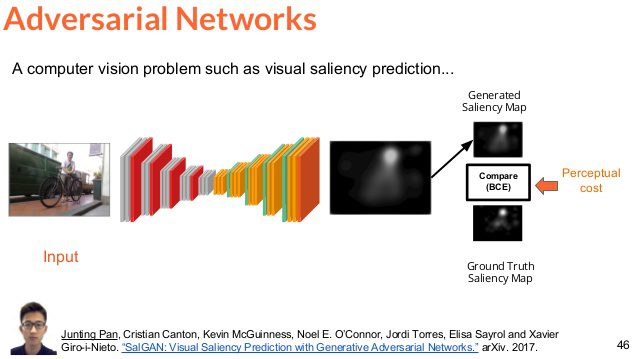

You start by feeding both neural networks a whole lot of training data and give each one a separate task. The first network, known as the generator, must produce artificial outputs, like handwriting, videos, or voices, by looking at the training examples and trying to mimic them. The second, known as the discriminator, then determines whether the outputs are real by comparing each one with the same training examples. In GANs two separate networks are trained with competitive goals: one network produces answers (generative) and another network distinguishes between the real and the generated answers (adversarial).

The concept is to train these networks competitively, so that after some time, neither network can make further progress against the other. Or the generator becomes so effective that the adversarial network cannot distinguish between real and synthetic solutions, even with unlimited time and substantial resources. Unlike generative networks without an opponent, GANs can be trained with only a few hundred images.

GANs’ potential is huge, because they can learn to mimic any distribution of data. That is, GANs can be taught to create worlds eerily similar to our own in any domain: images, music, speech, prose. They are robot artists in a sense, and their output is impressive – poignant even. On one hand, the ability to synthesize media and mimic other data patterns can be useful in photo editing, animation, and medicine (such as to improve the quality of medical images and to overcome the scarcity of patient data).

For the example, if the goal is to generate hand-written numerals like those found in the MNIST dataset, which is taken from the real world. The goal of the discriminator, when shown an instance from the true MNIST dataset, is to recognize them as authentic. Meanwhile, the generator is creating new images that it passes to the discriminator. It does so in the hopes that they, too, will be deemed authentic, even though they are fake. The goal of the generator is to generate passable hand-written digits, to lie without being caught. The goal of the discriminator is to identify images coming from the generator as fake.

The discriminator network is a standard convolutional network that can categorize the images fed to it, a binomial classifier labeling images as real or fake. The generator is an inverse convolutional network, in a sense: While a standard convolutional classifier takes an image and downsamples it to produce a probability, the generator takes a vector of random noise and upsamples it to an image. The first throws away data through downsampling techniques like maxpooling, and the second generates new data. Both nets are trying to optimize a different and opposing objective function, or loss function, in a zero-zum game.

“Dueling Neural Networks describes a breakthrough in artificial intelligence that allows AI to create images of things it has never seen. It gives AI a sense of imagination,” says Rotman. However, he also urges caution, as it raises the possibility of computers becoming alarmingly capable tools for digital fakery and fraud.

References and Resources also include:

https://www.dezeen.com/2018/02/22/mit-technology-review-predicts-10-breakthrough-technologies/

https://deeplearning4j.org/generative-adversarial-network

https://www.technologyreview.com/s/612713/five-emerging-cyber-threats-2019/

https://i-hls.com/archives/90229