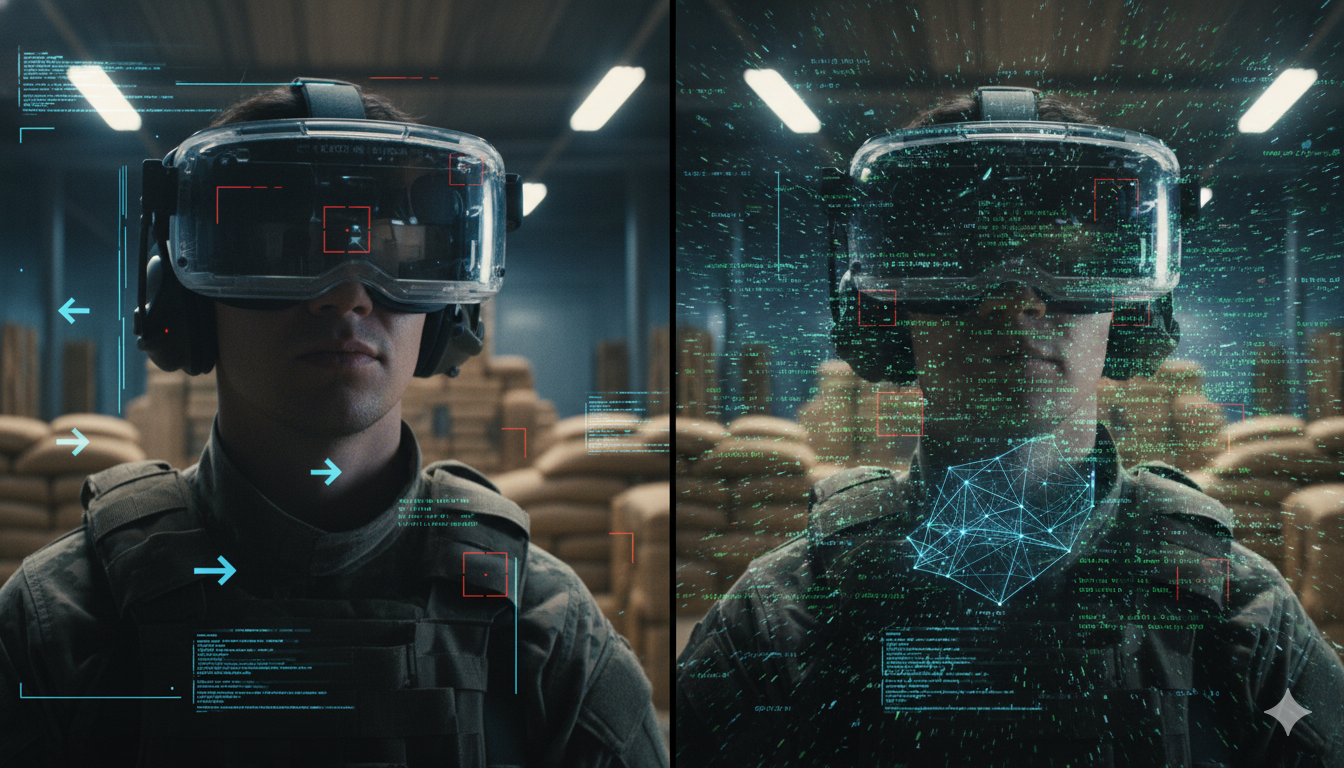

Mixed reality (MR) has rapidly evolved from a futuristic concept to a transformative force, weaving digital overlays into our physical world with applications ranging from manufacturing and medicine to education and entertainment. Within military contexts, its potential is profound: soldiers navigating urban environments aided by augmented intelligence, or technicians conducting equipment repairs guided by animated projections directly on machinery.

This physical-digital fusion, however, introduces unparalleled vulnerabilities. Traditional cybersecurity threats target systems, but MR raises a chilling possibility: an attacker might manipulate a user’s perception and cognition. This is not a distant or hypothetical threat. Researchers have already shown that malicious actors can manipulate MR systems in ways that directly disrupt human cognition. By flooding the senses with disorienting visual input, attackers can induce motion sickness or “cybersickness.” They can clutter a user’s display by highlighting irrelevant objects, distracting attention from what truly matters. False but urgent alerts may be injected into the field of view, steering operators toward fabricated scenarios. Even subtle, repeated manipulations—like generating false alarms—can gradually erode confidence in the system itself.

Such tactics are known as cognitive attacks, and their danger lies in their subtlety. Unlike traditional cyber intrusions aimed at disabling software, cognitive attacks target the operator’s mind, undermining perception, focus, and trust. The ultimate objective is not to crash a program but to compromise human performance—spreading confusion, heightening anxiety, and paving the way to mission failure.

DARPA’s Intrinsic Cognitive Security (ICS) Initiative

In response, the Defense Advanced Research Projects Agency (DARPA) launched the Intrinsic Cognitive Security (ICS) program to build resilience directly into MR systems before they become ubiquitous on battlefields. Traditional patches won’t protect the human mind; instead, ICS adopts formal methods, a mathematical approach enabling precise modeling and proof of system properties.

The ICS program seeks to break new ground by applying formal methods to mixed reality, an approach that allows security to be mathematically verified rather than simply assumed. The goal is to design MR systems with built-in protections against cognitive attacks—protections that can be proven correct long before the systems ever reach a battlefield or training environment. In other words, ICS is not about patching vulnerabilities after they appear but about engineering systems that are resilient by design.

At the heart of this effort is the development of cognitive models. These models attempt to formally represent how humans perceive, process, and react to information within immersive environments. By mathematically capturing the boundaries of human attention, perception, and physiological limits, researchers can anticipate where adversaries might attempt to exploit vulnerabilities. Such models serve as a bridge between neuroscience, psychology, and computer science, translating the intricacies of human cognition into a framework that machines can account for.

From these models, DARPA’s teams work on formulating concrete guarantees—precise, provable statements about system behavior. For example, a system might be guaranteed never to overload the user with more than a manageable number of high-priority alerts at once, or it might enforce orientation limits that prevent disorientation and cybersickness. These guarantees are expressed in mathematical language, ensuring that they can be rigorously tested and verified rather than left to subjective judgment.

The final step is to use these models and guarantees to design and build MR systems that are intrinsically secure against manipulation. By embedding safeguards directly into their architecture, the resulting systems do not rely solely on reactive defenses or user vigilance. Instead, they operate within proven safe boundaries, offering military personnel—and eventually civilians—the confidence that their augmented environments will support, rather than undermine, their performance even in high-stakes situations.

ICS is structured as a 36-month effort, divided into two phases. The first phase focuses on developing cognitive models and formal guarantees—such as constraints on alert volume or motion sickness thresholds—and expressing them in a language well-suited for mathematical proof. The second phase transitions from theory to practice, testing these guarantees by building prototypes on commercial MR hardware to validate their effectiveness in real-world scenarios.

Moving from Theory to Implementation

By 2024, DARPA had awarded contracts to leading research organizations to advance ICS into tangible solutions. Teams were tasked with developing cognitive models and formal guarantees addressing physiology, perception, attention, confidence, and system status in MR environments.

One of the most ambitious undertakings within the ICS program is the Verified Probabilistic Cognitive Reasoning for Tactical Mixed Reality Systems, or VeriPro. With $8.5 million in DARPA funding, the project is led by researchers at Penn State in collaboration with several universities and industry partners. Its central aim is to create a comprehensive framework for understanding how cognitive attacks emerge in MR environments—and how they can be neutralized through mathematically grounded defenses.

The VeriPro team’s work begins with building detailed models of human behavior and perception in mixed reality. By capturing how users react under varying conditions—whether during high stress, information overload, or disorientation—they can identify where MR systems are most vulnerable. These insights allow researchers to simulate different kinds of cognitive attacks, from subtle distractions to outright manipulations, and then test how formal guarantees can be applied to mitigate them.

As Professor Bin Li explains, mixed reality differs from virtual reality in that it overlays digital content onto the real world rather than replacing it entirely. This creates scenarios that are both powerful and complex. A firefighter, for example, might train in a real room while digital flames are superimposed on the walls. Such environments are invaluable for training and operations, but they also provide new entry points for adversaries who might tamper with overlays or distort situational awareness.

Over the course of three years, VeriPro will translate theory into practice by developing prototypes on commercial MR hardware. These prototypes are designed to show how mathematically proven guarantees can be embedded into real-world systems, ensuring they remain resilient against cognitive manipulation. By doing so, VeriPro not only advances the ICS program’s vision but also lays a foundation for the broader adoption of secure mixed reality in both military and civilian domains.

At the same time, a major project called the Modeling and Analysis Toolkit for Realizable Intrinsic Cognitive Security (MATRICS) was launched to combat issues like cybersickness and other vulnerabilities unique to MR. This initiative brought together experts from academia and industry to explore how physiological parameters—such as frame rates, latency, and optical flow—affect user stability. Their work focused on modeling these effects, verifying detection mechanisms, and ensuring mitigation strategies could be formally proven effective.

Central to this effort is the development of a knowledge base cataloging known cognitive attack patterns and potential defenses, enabling structured anticipation of threats. Formal specification tools are being adapted to express and verify cognitive guarantees, translating theories of human–machine interaction into computational models like probabilistic automata and Markov Decision Processes. This allows researchers to reason rigorously about vulnerabilities and ensure that system behaviors remain consistent and safe.

Broader Research Context: Foundations for Future MR Security

Parallel academic efforts are also expanding the theoretical foundations for MR security. New frameworks are being introduced that blend insights from security, information theory, and cognitive science, offering structured approaches to understanding deception in MR environments. These models help quantify how adversarial manipulation can distort perception and decision-making, creating pathways for robust defensive strategies.

Charting the Path Forward: A Safer MR Future

By embedding security into MR systems through mathematical formality, the ICS program marks a paradigm shift in defense strategy. Recent progress—from contracts awarded to industry leaders, to toolkits like MATRICS, to emerging academic frameworks—shows momentum toward MR systems designed with cognitive safety at their core.

If these initiatives succeed, the result will extend far beyond the battlefield. They will establish the foundational architecture for safe, trustworthy mixed reality that protects human cognition from adversarial interference and enables the technology to flourish in both defense and civilian domains.