Robots have already become an indispensable part of our lives. However currently, most robots are relatively rigid machines which make unnatural movements. In contrast, humans seem to be able to manipulate objects with our fingers with incredible ease like sifting through a bunch of keys in the dark, tossing and catching a cube, tying a knot, insert a pin in a hole or use a hand tool such as a drill. But these are really very complex and involve extremely fine finger and hand motions.

For decades, roboticists have been trying to understand and imitate dexterous human manipulation. And while there has been much progress in machine perception, dexterous manipulation remained elusive.

The fine motor skill (or dexterity) achieved in humans is through the coordination of small muscles, in movements—usually involving the synchronization of hands and fingers—with the eyes. The complex levels of manual dexterity that humans exhibit can be attributed to and demonstrated in tasks controlled by the nervous system.

Examples of autonomous, robotic dexterous manipulation are still confined to the research laboratory. At present, autonomous, real time dexterous manipulation in unknown environments still eludes us. In spite of the enormous challenge, autonomous robots are poised to make huge leaps in their ability to manipulate unfamiliar objects. Recent advances in machine learning, Big Data, and robot perception have put us on the threshold of a quantum leap in the ability of robots to perform fine motor tasks and function in uncontrolled environments, says Robert Platt, computer science professor.

Some companies, such as KUKA, have already developed a dexterous robot arm with sensors in every joint. This solution might be more expensive, but it still enables the feasibility of dexterous manipulation.

In 2018, the Berkley OpenAI group demonstrated that this hurdle may finally succumb to machine learning as well. Given 200 years worth of practice, machines learned to manipulate a physical object with amazing fluidity. This might be the beginning of a new age for dexterous robotics.”

Defense Advanced Research Projects Agency (DARPA), an agency under the U.S. Department of Defense, has been working to improve the quality of robots. It is now conducting a global competition to design robots that can perform dangerous rescue work after nuclear accidents, earthquakes and tsunamis.

The robots are tested for their ability to open doors, turn valves, connect hoses, use hand tools to cut panels, drive vehicles, clear debris and climb a stair ladder — all tasks that are relatively simple for humans, but very difficult for robots.

Future Applications of Dexterous Robots

Future applications of robotic dexterous manipulation may include tasks where fine manipulation is required, yet it is infeasible or dangerous for a human to perform the task. Examples include underwater salvage and recovery, remote planetary exploration, and retrieval of objects from hazardous environments.

Industry

Today’s best robotic hands can pick up familiar objects and move them to other places — such as taking products from warehouse bins and putting them in boxes. But robots can’t orient a hand tool properly — say, lining up a Phillips head screwdriver with the grooves on a screw, or aiming a hammer at a nail. And they definitely can’t use two hands together in detailed ways, like replacing the batteries in a remote control.

From the manufacturers perspective, the main challenge is to be able to grasp as many objects as possible of different sizes and shapes, adeptly and decisively. Mark Lewandowski from P&G gave example of their high mix production with over 2,000 different products. As an example of the need for dexterous manipulation, he talked about their production line for bottles, where they need to be placed on a conveyor belt. The various shapes and sizes of these bottles makes automation using today’s technologies infeasible. P&G are looking for flexible automation in order to reproduce human capabilities.

Grasping has to be adjusted by force and sensing feedback to avoid crushing the bottles. Moreover, the grasp has to be firm enough to avoid slippage during high speed movements. Mr. Lewandowski stated that the robotic end effector has to be 99% reliable, so it is not the weakest link in the production chain. He also mentioned that the cost of universal gripping devices is easily justified by their flexibility and reliability, compared to the high cost of custom end effectors, which are designed for individual parts.

Boeing

A Boeing representative Craig Battles exposed the assembly challenges facing aerospace by showing examples of complex parts used in this industry. Boeing’s biggest issues for robotic dexterity in manufacturing and automation are: safety, quality control, cost reduction and throughput. Each assembly tasks has different quality control and cost challenges and each one includes a fastening, a loading/unloading and a sealing step.

Human safety is a big consideration, because there are a lot of risks involved in repetitive tasks requiring tools, along with the hazards of an automated environment. Some tasks are even performed in tight spaces where a human can barely fit. Thus, small collaborative robots might be a great solution for those kinds of tasks, especially since they can reduce safety hazards. But this brings another challenge, since those assembly robots would need to move around the large shop floor.

Home

Platt’s Helping Hands lab—in collaboration with the University of Massachusetts Lowell and the Crotched Mountain Rehabilitation Facility in New Hampshire—is building a power wheelchair with a robotic arm that can grasp items around the house or perform simple household tasks. This could enable elderly or people with disabilities to continue to live independently in their homes.

Platt is also interested in adapting this technology for everyday use. “We hear a lot about the Alexa-style assistants that can answer questions by accessing the internet. But these assistants can’t do anything physical,” says Platt. “We want to equip these devices with a robotic body so you can say, ‘Alexa, get the newspaper,’ or ‘Alexa, clean up Jimmy’s room.’”

Military

Similar types of robots could take on similar duties in areas of intense conflict and be used for dangerous operations such as defusing mines. For example, Platt and his group recently completed a grant from the Office of Naval Research to develop fundamental manipulation technologies that will be used aboard Naval vessels.

Hazardous waste

Engineering professor Taskin Padir and his team received a grant from the Department of Energy to adapt NASA’s Valkyrie robot for hazardous waste disposal. There are more than a dozen sites scattered around the U.S. where radioactive waste was buried in tunnels during the Cold War. For autonomous robots to locate, grasp, and place this waste in safe containers, they will need fine motor skills and an ability to operate in unfamiliar environments.

Medicine

Funded by a grant from the National Science Foundation, engineering professor Peter Whitney is working with researchers at Stanford University to create a robot that can perform MRI-guided surgery.

Space exploration

Platt is working with researchers at NASA to develop robotic manipulation capabilities for handling soft objects on future NASA space missions.

“Robots that work flawlessly in the lab break down quickly when they’re placed in unfamiliar situations,” says Platt. “Our goal is to develop the underlying algorithms that will allow them to be more reliable in the real world. Ultimately, this will fundamentally change the way we think about robots, allowing them to become partners with humans rather than just machines that work in far away factories.”

Dexterous Manipulation Technology

Dexterous Manipulation is an area of robotics in which multiple manipulators, or fingers, cooperate to grasp and manipulate objects. Dexterous manipulation differs from traditional robotics primarily in that the manipulation is object-centered. That is, the problem is formulated in terms of the object to be manipulated, how it should behave, and what forces should be exerted upon it. Dexterous manipulation, requiring precise control of forces and motions, cannot be accomplished with a conventional robotic gripper; fingers or specialized robotic hands must be used.

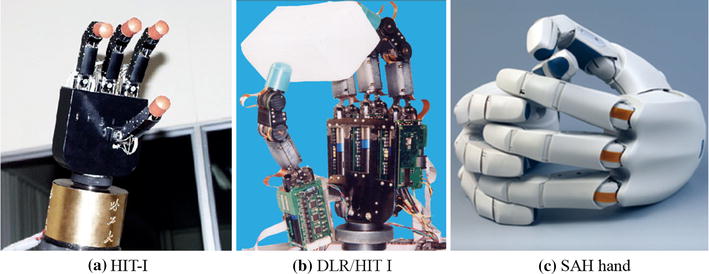

It should come as no surprise then that the majority of robot hands designed for dexterous manipulation are anthropomorphic in design. Research has also been done to classify human grasping and manipulation with an eye to providing a knowledge-based approach to grasp choice for robots.

While this approach has had some success in emulating human grasp choices for particular objects and tasks, other researchers have argued that robot hands, and the circumstances in which they work, are fundamentally different from the human condition. A model based approach, based on the kinematics and dynamics of manipulating an object with the fingertips, has therefore dominated the field. The results of this approach are now adequate for manipulations of objects in controlled environments.

Although autonomous dexterous manipulation remains impractical outside of the laboratory, a promising interim solution is supervised manipulation. In this approach, a human provides the high-level grasp and manipulation planning, while the robot performs fine (dexterous) manipulations. Another method is teaching by demonstration (gesture-based programming). The human may also perform the interpretation of tactile information in supervised remote exploration.

The miniaturization of manipulation is another area of with promise. However, manipulations occurring on a very small scale are dominated by friction and Van der Waals forces. Stable grasping is often not necessary; the objects will stick directly to the manipulator.

Berkeley researchers unveil “most dexterous robot ever created”

Researchers at UC Berkeley have developed and unveiled a first in robotics: a robot that matches two highly versatile limbs with the ability to reason and simulate different outcomes via two onboard neural networks. The university team claims that this combination of technologies makes it the world’s most dextrous robot.

Professor Ken Goldberg and one of his graduate students, Jeff Mahler, displayed the results of their work at EmTech Digital, an AI event in San Francisco organised by MIT Technology Review.

The two-armed robot relies on software called Dex-Net, which gives it the ability to reason and make decisions with as much dexterity as its arms are capable of moving. Via this onboard system, the robot is capable of quickly determining how best to grasp objects, based on simulations that take place within two separate, deep neural networks.

OpenAI sets new benchmark for robot dexterity

Robert Platt, computer science professor and head of the Helping Hands robotics lab at Northeastern and his team at the Helping Hands Lab have trained a robot to find, grab, and remove unfamiliar objects from a pile of clutter with 93 percent accuracy. Achieving this required significant advances in machine learning, perception, and control.

As part of a NASA grant, Platt’s lab recently built a robotic hand equipped with tactile sensors and developed new algorithms for interpreting the tactile data. “In order to insert a key into a lock, the robot needs to know exactly how it’s holding the key, down to the millimeter,” says Platt. “Our algorithms can localize these kinds of grapsed objects very accurately.”

Platt’s lab demonstrated these new capabilities by grasping a USB connector and plugging it into a port. While this may not sound like a big deal, it’s a critical step toward creating robots that can do precise manipulation tasks such as changing the battery in a cell phone.

The researchers used a technique called reinforcement learning in which the robot learns via trial and error. They created a simulated world in which the robot could practice picking up and manipulating objects in virtual reality. When the robot did what the researchers wanted—grabbed an object from a pile—it was given a reward. This technique allows the robot to master skills in a virtual environment and then apply them to the real world.

A major advance in depth perception was also essential for robots to work in an uncontrolled environment. Previously, they could only see the world as a flat field of seemingly random colors. But with this new 3-D perception, they could identify individual objects in a crowded field.

While vision is an excellent tool for guiding broad movements, fine motor skills require a sense of touch.

“Think of what you can do with gloves on,” explains Platt. “You can open the garage door, grab a shovel, and clear the driveway. But if you need to unlock the garage first, you need to take your gloves off to insert the key.”

As part of a NASA grant, Platt’s lab recently built a robotic hand equipped with tactile sensors and developed new algorithms for interpreting the tactile data.

“In order to insert a key into a lock, the robot needs to know exactly how it’s holding the key, down to the millimeter,” says Platt. “Our algorithms can localize these kinds of grapsed objects very accurately.”

Platt’s lab demonstrated these new capabilities by grasping a USB connector and plugging it into a port. While this may not sound like a big deal, it’s a critical step toward creating robots that can do precise manipulation tasks such as changing the battery in a cell phone.