Remote sensing means that we aren’t actually physically measuring things with our hands. We are using sensors that capture information about a landscape and record things that we can use to estimate conditions and characteristics. LiDAR, or light detection ranging (sometimes also referred to as active laser scanning) is one remote sensing method that can be used to map structure including vegetation height, density and other characteristics across a region.

Lidars (Light Detection and Ranging) are similar to radars in that they operate by sending light pulses to the targets and calculate distances by measuring the received time. Since they use light pulses that have about 100,000 times smaller wavelength than radio waves used by radar, they have much higher resolution. Distance traveled is then converted to elevation. When LIDAR is mounted on Aircraft, these measurements are made using the key components of a lidar system including a GPS that identifies the X,Y,Z location of the light energy and an Internal Measurement Unit (IMU) that provides the orientation of the plane in the sky.

LIDARs are rapidly gaining maturity as very capable sensors for number of applications such as imaging through clouds, vegetation and camouflage, 3D mapping and terrain visualization, meteorology, navigation and obstacle avoidance for robotics as well as weapon guidance. They have also proved useful for disaster management missions; emergency relief workers could use LIDAR to gauge the damage to remote areas after a storm or other cataclysmic event. LIDAR data is both high-resolution and high-accuracy, enabling improved battlefield visualization, mission planning and force protection. LIDAR provides a way to see urban areas in rich 3-D views that give tactical forces unprecedented awareness in urban environments.

It is used by a large number of autonomous vehicles to navigate environments in real time. Its advantages include impressively accurate depth perception, which allows LiDAR to know the distance to an object to within a few centimetres, up to 60 metres away. It’s also highly suitable for 3D mapping, which means returning vehicles can then navigate the environment predictably —a significant benefit for most self-driving technologies.

LiDAR is an optical sensor technology that enables robots to see the world, make decisions and navigate. Robots performing simple tasks can use LiDAR sensors that measure space in one or two dimensions, but three-dimensional (3D) LiDAR is useful for advanced robots designed to emulate humans. One such advanced robot is a self-driving car, where the human driver is replaced by LiDAR and other autonomous vehicle technologies. 3D LiDAR systems scan beams of light in three dimensions to create a virtual model of the environment. Reflected light signals are measured and processed by the vehicle to detect objects, identify objects, and decide how to interact with or avoid those objects.

However, despite these exciting advances, LiDAR has been hindered by a key factor; its significant cost. Google’s first driverless car prototype in 2012 used a US$70,000 LiDAR. In 2017, Waymo engineers declared they had brought the cost down by 90%. Today, a number of the top LiDAR manufacturers such as Luminar offer autonomous driving-grade LiDARs for under US$1,000. Prices have come down so much that the tech is found in the latest model iPhones. It’s how robot vacuums see what’s around your home.

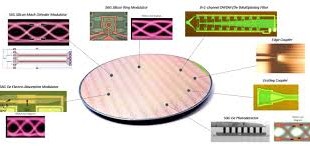

LIDAR systems

A sensing operation starts at the laser source, which provides amplitude and/or phase-modulated light. The modulated light is transmitted to the sensing target through the illumination optics, hits the target, and reflected light from the target is subsequently collected by the imaging optics. Finally, the receiver records the amplitude/phase of the light from the imaging optics, correlates it with the modulation signal, and extracts the time it took for the light to come back from the target, or laser time-of-flight (TOF), to eventually measure the distance to the target.

To successfully perform various missions, both high spatial resolution and range precision are needed, and the system also often needs to obtain high resolution 3D images in a relatively short period of time. There are two methods that LIDAR systems typically employ to obtain 3D images, a scanning type and a flash type. The scanning LIDAR system uses one or a few detector pixels and a scanner to acquire 3D images. Laser pulses are sent out from a laser system, and each laser pulse is directed to a different point on the target by a scanner; then its time-of flight (TOF) is obtained for each target point, using a single detector pixel. The scanning LIDAR system requires a significant time overhead to acquire a high resolution 3D image, because the system needs to scan each point. This means the system requires increasingly more time to take measurements, to obtain ever higher resolution 3D images.

But mechanically steered lasers are bulky, slow and prone to failure, so newer companies are attempting other techniques, like illuminating the whole scene at once (flash lidar) or steering the beam with complex electronic surfaces (metamaterials) instead. Flash LIDAR uses a 2D array detector and a single laser pulse, illuminating the entire interesting scene to acquire 3D images. To acquire real-time images of moving targets, it is necessary to obtain the 3D image with a single laser pulse. Flash LIDAR systems can obtain images with just a single laser pulse, which makes it possible for them to obtain 3D images of moving targets. Moreover, 3D images can be acquired even when the LIDAR system or the target are in motion.

Most lidars today are based on the ToF (time of flight) principle. These ToF lidars often operate in the NIR range (e.g., 850nm or 905nm). This is selected because sensitive, high-SNR, mature, Si-based avalanche photodiode (APD) and single-photon, avalanche photodiode (SPAD) are readily available. A downside for this wavelength is that the maximum permissible energy (MPE) level of the laser is constrained. This will likely require short nanosecond pulses of high-power laser but will, nonetheless, ultimately limit the range.

The other choice is to operate in the SWIR range, e.g., 1550nm. This wavelength has an MPE level which is orders of magnitude higher but requires the use of non-silicon receivers. Most lidars utilizing the FMCW (frequency-modulated-continuous-wave) principle use this wavelength. Note that FMCW offers velocity information per frame, higher SNR, lower power consumption, and less susceptibility to interference, but it is more difficult to implement because it requires a highly coherent stable tunable laser, a coherent optical mixer, and so on.

Si photonics can be used in many aspects of lidar design. In emerging FMCW lidars, Si photonics can be used in coherent receivers. Interestingly, Si photonics can be used to implement beam steering technology using optical phase array (OPA) technology, thus replacing the bulky rotating mechanical beam steering mechanism with a miniaturized true solid-state solution. This would help miniaturize lidar modules, extend product lifetimes, and put lidars on a cost-reduction path akin to other solid-state technologies. This approach would replace many free-space optical components with chip-scale solutions and would create a fabless-foundry ecosystem akin to what is found in CMOS devices.

The LIDAR systems are seeing many breakthroughs like longer range, becoming software configurable, becoming intelligent by integrating AI and able to measure range and velocity by Doppler radar. Blackmore Sensors and Analytics, develops high-performance LIDARs based on multi-beam Doppler sensors to generate both position and velocity data. The technology won a Prism Award at the SPIE Photonics West event earlier in 2019, and BMW and Toyota are among the major auto firms to have tested it

Lidars are also being miniaturized. Currently most compact lidar solutions today are still, roughly, the size of a hand, and the ones ready for use in production vehicles are still larger. One of the key strengths of LiDAR is the number of areas that show potential for improvement. These include solid-state sensors, which could reduce its cost tenfold, sensor range increases of up to 200m, and 4-dimensional LiDAR, which senses the velocity of an object as well as its position in 3-D space.

Spinning LiDAR for Obstacle detection and navigation of Autonomous Vehicles

With the explosion in development of self-driving platforms, demand for 3D LIDAR has grown drastically. One of the important sensor in driverless vehicles is LiDAR which is used for obstacle detection and their navigation. Lidar is most often used as a way for a car to sense things at a medium distance — far away, radar can outperform it, and up close, ultrasonics and other methods are more compact. But from a few feet to a couple hundred feed out, lidar is very useful.

LIDARS by steering the transmitted light, can generate a millimeter-accurate 3D representation of its surroundings that’s called a point cloud. These accurate point cloud images are compared with 3D maps of the roads known as prior maps stored in memory using well-known algorithms, aligning them as closely as possible. That makes it very easy to identify with sub-centimeter precision where the car is on the road In robotic mapping, SLAM (simultaneous localization and mapping) involves the computational problem of constructing or updating a map of an unknown environment while simultaneously keeping track of a specific point within it.

Spinning LiDARs are best identified by the telltale dome on the roof of a connected car, spinning systems have been the prevailing form of the technology. Velodyne created the modern LiDAR industry around 2007 when it introduced a LiDAR unit that stacked 64 lasers in a vertical column and spun the whole thing around many times per second. Rotating LiDAR captures a wide field of view and a long range, but its components tend to be heavy and expensive, and the moving parts require regular maintenance. The Google’s self-driving cars use Velodyne rotating LIDAR HDL-64E module consisting of an array of 64 lasers to identify oncoming vehicles like cars, bicycles, pedestrians and also detect small hazards close by, on the ground. Velodyne LiDAR, a developer of real-time LiDAR sensors, has announced a partnership agreement with Dibotics under which Dibotics will provide consulting services to Velodyne LiDAR customers who require 3D SLAM software. In robotic mapping, SLAM (simultaneous localization and mapping) involves the computational problem of constructing or updating a map of an unknown environment while simultaneously keeping track of a specific point within it.

RedTail LiDAR Systems unveiled its innovative new mapping system that uses tech licenced from the US Army Research Laboratory. The system, named the RTL-400, utilises a microelectromechanical system (MEMS) mirror-based laser scanner to map out small objects from high altitudes by distributing laser pulses rapidly and accurately over the ground.

The technology company, a division of 4D Tech Solutions, Inc., claims that the system boasts unprecedented resolution thanks to the laser pulse rate of 400,000 pulses per second. RedTail says that applications for the RTL-400 will range from agriculture to construction site monitoring. Brad DeRoos, president and CEO of the company, said: “We are very excited to introduce RedTail LiDAR System’s RTL-400. “This product has been optimized for use on small drones, providing high-quality point clouds to meet the needs found in the numerous fields where LiDAR is used.” RedTail LiDAR Systems unveiled the new mapping tech as part of the Group Pitch in the Exhibit Hall Theatre at the Commercial UAV Expo in Las Vegas in on October 2019.

Damon Lavrinc of Ouster, another spinning LiDAR company, says that while spinning/mechanical systems are still the gold standard, the trend is from moving parts to silicon. “We’re taking all of old components used by legacy LiDAR companies and distilling them onto chips,” he says. And Moore’s Law is taking hold in LiDAR. In just a couple of years they moved from 16 to 32 to 64 beams of light, meaning a doubling of resolution at each increase. “And at CES, we introduced 128, the highest resolution possible.”

Quanergy, of Sunnyvale, Calif., also makes a spinning product. According to Chief Marketing Officer Enzo Signore, although the company is innovating with optical arrays in high-end applications, Signore says spinning LiDAR, used in its M8 sensor, typically suits security applications. That product, he says, has a 360-degree horizontal field of view and generates 1.3 million pulses per second in the point cloud. Paired with its Qortex machine learning software, Signore says that its solution is designed to classify people and vehicles, such as identifying how many people are at an airport security checkpoint. A pharmaceutical client, for example, uses it along a 400 meter fence line at a storage facility, having rejected radar due to interference issues and on-fence motion sensors due to high winds. “If an intruder is, say, 70 meters away, the LiDAR will see that person and assign an ID to that person and we can track him all around,” Signore says. “If he then enters a forbidden zone, such as too near an electric switch, you can point a camera at him.”

AEye, based in Dublin, Calif., says that its “agile LiDAR” scans, collects and processes data intelligently, with a range of more than 300 meters, well beyond most other companies’ range. Benmbarek claims that the technology can “dynamically adapt its scanning patterns to focus on ‘regions of interest’ in a single frame, enabling it to identify objects 10 to 20 times more than LiDAR-only products.”

Flash LIDAR

On the other hand, a flash LIDAR system utilizes a 2D array detector and a single laser pulse, illuminating the entire interesting scene to acquire 3D images. To acquire real-time images of moving targets, it is necessary to obtain the 3D image with a single laser pulse. Flash LIDAR systems can obtain images with just a single laser pulse, which makes it possible for them to obtain 3D images of moving targets. Moreover, 3D images can be acquired even when the LIDAR system or the target are in motion.

This method is less prone to image vibration and produces a much faster data capture rate. Texas Instruments notes two downsides, however. For one, reflected light blinds sensors. In addition, great power is necessary to light a scene, especially at distance. Most flash LiDAR, made by manufacturers such as Continental Automotive and LeddarTech, is being applied to autonomous vehicles. Sense Photonics, of Durham, N.C., is an exception. According to Erin Bishop, director of business development, SensePhotonics has a solution – Sense One – for industrial applications, which promises up to 40 meters of range outdoors for imaging large workspaces or perimeters, 7.5x higher vertical resolution than other sensors and a 2.5x wider vertical field of view (75 degrees compared to 30 degrees).

Technology breakthrough allows self-driving cars to see in all weather conditions

Harsh weather conditions had previously made it more difficult for the sensors used in self-driving vehicles to clearly view conditions ahead. However, Draper’s Hemera LiDAR Detector has been developed in a way that allows it to collect much more information from light sources in the environment.

For example, most LiDAR systems can detect millions of photons (light particles), whereas this new system can collect billions of photons per second. It will mean car manufacturers will be able to improve their existing LiDAR and is another stepping stone towards full-automation when vehicles no longer require a human driver. Draper will be offering the Hemera technology on licence to other vehicle manufacturers and suppliers to augment their current systems.

Joseph Hollmann, Ph.D., senior scientist for computational imaging systems development at Draper, said: “Typical LiDAR sensors can be confused by photons that are scattered by obscurants. “We designed it to perform on bright days, when reflected sunlight can confuse LiDARs, and to avoid spoofing, which is being fooled into thinking that obstacles are present, when in reality there are no obstacles.”

Hemera has been designed so it can augment most existing LiDAR systems so car manufacturers and suppliers can preserve existing investments in LiDAR. Sabrina Mansur, Draper’s self-driving vehicle program manager, said the company is currently working with car manufacturers to add Hemera to existing LiDAR systems.

Mansur said: “Hemera receives a lot more information from the scene, which in turn will make self-driving cars safer and more reliable. “The ability to see through degraded visual environments, such as fog, snow and rain, will expand the scenarios in which autonomous cars will be able to operate.”

Volvo, Luminar show off pedestrian-analyzing lidar

While lidar is acknowledged by much of the auto industry as one of the key technologies required for fully self-driving vehicles operating at so-called “Level 5” autonomy, it is also widely viewed as expensive and not yet reliable enough for deployment. As a result, dozens of startup companies developing the technology have attracted venture capital investment with their promises to close that gap.

However, only a handful of them are working with 1550 nm light sources, which are more technologically challenging and expensive to produce but allow much higher powers to be used – extending range and resolution when compared with the more conventional laser diode wavelengths around 1 µm.

Car maker Volvo and the lidar firm Luminar say that the photonics technology they are developing is now capable of predicting the actions of pedestrians based on their poses and body language. Showing off the system, which is based around a 1550 nm laser source,they announced “The [lidar] fidelity enabled the Volvo Cars research and development team to achieve and demonstrate the first ever lidar-based pose estimation for autonomous driving,”

The term “pose estimation” is used to describe ways that pedestrian behavior can be understood and predicted by autonomous systems – for example indicating the likelihood of a person at the side of a road stepping in front of a car. Ford and Luminar say that this has typically been done with two-dimensional data from cameras, but that lidar yields more useful three-dimensional information. “With lidar that achieves camera-like resolution in 3D, this is one of many perception and prediction challenges that can now be reliably solved at distances near and far,” they say.

Lidar startup AEye raises $40M; claims kilometer range breakthrough

AEye, a Californian startup is developing long-wavelength lidar technology for use in future autonomous vehicles. AEye team says that its “iDAR” system – based around a 1550 nm laser emitter – is now able to detect and track a truck at a distance of 1000 meters, well beyond the range of 200-300 meters thought necessary for commercial effectiveness.

Different regulations relating to eye safety allow much more powerful sources to be used at the 1550 nm wavelength than at 980 nm, meaning that there is a far greater “photon budget” available to scan a car’s environment. In a statement from AEye, the startup’s “chief of staff” Blair LaCorte said: “After establishing a new standard for lidar scan speed, we set out to see just how far we could accurately search, acquire and track an object such as a truck. “We detected the truck with plenty of signal to identify it as an object of interest, and then easily tracked it as it moved over 1000 meters away.”

“AEye’s test sets a new benchmark for solid-state lidar range, and comes one month after AEye announced a 100 Hz scan rate – setting a new speed record for the industry,” claims the firm. Along with Volvo-backed Luminar Technologies, AEye is one of a handful of companies in the increasingly crowded auto lidar sector to base its technology around longer-wavelength emitters fabricated on indium phosphide (InP) semiconductor wafers.

LaCorte added that he thinks it will be possible to increase that range to as much as 10 kilometers, and perhaps even greater distances, suggesting potential applications in unmanned aerial vehicles (UAVs).

Quanergy Announces Solid-State LIDAR for Cars and Robots

Quanergy, an automotive startup based in Sunnyvale, Calif., have developed S3, a solid-state LIDAR system designed primarily to bring versatile, comprehensive, and affordable sensing to autonomous cars. The S3 is small, has no moving parts, instead it uses an optical phased array as a transmitter, which can steer pulses of light by shifting the phase of a laser pulse as it’s projected through the array.

This allows each pulse to be steered completely independently and refocussed almost instantly in a completely different direction. Field of view is 120 degrees both horizontally and vertically. The minimum range is 10 centimeters, and the maximum range is at least 150 meters at 8 percent reflectivity. At 100 meters, the distance accuracy is +/- 5 cm, and the minimum spot size is just 9 cm. The S3 itself is 9 cm x 6 cm x 6 cm. Produced in volume, an S3 unit will cost $250 or less. “If you want to achieve a high degree of autonomy, you need a sensor that has a 360 degree field of view, a long range, and high resolution,” says Anand Gopalan, vice president of Velodyne’s R&D.

However in the current sensor only transmitter side is integrated on ASIC. “As we move forward, I think the thought process is to build an engine that encompasses all the functionality of a lidar: the transmission and reception of light and the calculation of intensity and time of flight, all of that, in such a small form factor that now it can be used in a variety of different configurations.”

Velodyne LiDAR’s solid-state LiDAR sensors is based on monolithic gallium nitride (GaN) integrated circuit technology developed in partnership with Efficient Power Conversion (EPC). “What you’re seeing is the GaN integrated circuit that forms the heart of the solution that we’re proposing. This is the chip that does the transmit function. It’s not beamforming.”

Nano-optics startup raises $4.3M to miniaturize solid-state lidar

NYC-based LiDAR startup Voyant Photonics raises $4.3M investment from Contour Venture Partners, LDV Capital and DARPA. The founding team of the startup came from Lipson Nanophotonics Group at Columbia University. “In the past, attempts in chip-based photonics to send out a coherent laser-like beam from a surface of lightguides (elements used to steer light around or emit it) have been limited by a low field of view and power because the light tends to interfere with itself at close quarters.

It’s a misconception that small lidars need to be low-performance. The silicon photonic architecture we use lets us build a very sensitive receiver on-chip that would be difficult to assemble in traditional optics. So we’re able to fit a high-performance lidar into that tiny package without any additional or exotic components. We think we can achieve specs comparable to lidars out there, but just make them that much smaller.”

Voyant’s version of these “optical phased arrays” sidesteps that problem by carefully altering the phase of the light traveling through the chip.” “This is an enabling technology because it’s so small,” says Voyant CEO and co-founder Steven Miller. “We’re talking cubic centimeter volumes.

“This is an enabling technology because it’s so small,” said Voyant co-founder Steven Miller. “We’re talking cubic centimeter volumes. There’s a lot of electronics that can’t accommodate a lidar the size of a softball — think about drones and things that are weight-sensitive, or robotics, where it needs to be on the tip of its arm.”

The company has already produced a silicon optical phased array that operates with infrared light but changes the phase of the light traveling through waveguides on the chip. The result is a beam of non-visible light that can illuminate a wide swathe of the environment at high speed without moving parts.

Many existing lidars use time-of-flight at 905nm wavelength but suffer range limitations due to eye-safety considerations as well as concerns about multi-user crosstalk. These problems can be avoided using frequency modulated continuous wave (FMCW) lidar on a chip designed to operate at 1550nm wavelength.

Cepton Unveils New High-Resolution, Long-Range LiDAR

Cepton Technologies’ newest Vista LiDAR sensor, the Vista-X120, specifically targets advanced driver-assistance systems (ADAS) and autonomous applications. It offers a 120-degree horizontal field of view (FOV), 0.15-degree angular resolution, and a maximum detection range of up to 200 meters at 10% reflectivity. Powered by Cepton’s Micro Motion Technology (MMT), Vista-X120 builds on the existing Vista-P60 product currently shipping to customers worldwide.

The Vista X120 doubles the FOV compared to the standard Vista sensor enabling object perception and localization, in a much wider area. According to the company, by leveraging a common core technology optimized for power and cost, the Vista-X120 enables high-volume price points in the hundreds of dollars for mass-market automotive applications. Cepton’s Vista LiDAR sensors are designed in a small form factor that operates on only 9 W of power, enabling automotive designers to aesthetically integrate LiDAR sensors into their vehicle body and electrical systems.

Indeed, in February, the company filed a patent application for incorporating LiDAR sensors into a headlamp module of a vehicle. According to the patent summary, the LiDAR submodule would be placed near the headlamp illumination module so that light could be transmitted through the same window of the headlamp housing. The LiDAR sensor would include one or more laser sources configured to emit laser beams transmitted through the window toward the scene in front of the vehicle, with the laser beams being reflected off of one or more objects in the scene. Return beams are then generated through the window toward the LiDAR sensor and one or more detectors configured to receive and detect the returning laser beams.

The 200-meter range of the Vista X-120 allows on board software to detect objects, then react and decide on the next course of action for a vehicle traveling at speeds of 65 mph. If you want object classification, you need to know that you’re seeing what the object is, at close range or long range. The Vista X-120’s 0.15-degree angular resolution allows it to sense small obstacles in the road with a high level of accuracy.

Vista-X120 can be combined with Cepton’s Helius LiDAR perception software, enhancing its object detection and perception capabilities for autonomous applications. Helius utilizes the accuracy of lasers combined with AI-driven detection and tracking technologies to build a detailed image of activity in a given area, regardless of lighting conditions.

LGS LIDAR can switch between wide to narrow range

LGS Innovations announced the successful completion of a two-year Laser Radar Technology (LRT) effort in partnership with the Strategic Technology Office (STO) within the Defense Advanced Research Projects Agency (DARPA). The LRT program supports the development of detector arrays and laser transmitter technologies that could improve the ability for a LIDAR system to switch between settings geared to detect objects of interest and settings geared to hone in and provide additional insight on the selected object.

“This breakthrough required developing a laser with the ability to produce a wide range of optical waveforms, and the ability to change waveforms in real-time while operating at full power,” said Stephan Wielandy, Chief Scientist for Photonics Applications for LGS Innovations Advanced Research and Technology division. “To our knowledge, no laser with the ability to meet all of these waveform agility requirements has ever been made before.”

https://www.youtube.com/watch?v=7kSP29AB_DA

Multifunctional Coherent Doppler Lidar based on Laser Linear Frequency Modulation

Farzin Amzajerdian, Diego Pierrottet, Larry Petway, Bruce Barnes, and George Lockard of Langley Research Center have developed multifunctional coherent Doppler lidar based on linear frequency modulation of a continuous-wave (CW) laser beam with a triangular waveform.

The motivation for developing this technique was its application in a coherent Doppler lidar system that could enable precision safe landing on the Moon and Mars by providing accurate measurements of the vehicle altitude and velocity. The multifunctional coherent Doppler lidar is capable of providing high-resolution altitude, attitude angles, and ground velocity, and measuring atmospheric wind vector velocity. It can operate in CW or quasi-CW modes.

In the linear frequency modulation waveform of a laser beam, the modulation waveform has a triangular shape. The transmitted waveform is delayed by the light round-trip time, upon reflection from the target. When mixing the delayed return waveform with the transmitted waveform at the detector, an interference signal will be generated whose frequency is equal to the difference between the transmit and receive frequencies. This frequency is directly proportional to the target range. When the target or lidar platform is not stationary during the beam round-trip time, the signal frequency will be shifted due to the Doppler effect. Therefore, by measuring the frequency during “up chirp” and “down chirp” periods of the laser waveform, both the target range and velocity can be determined.

Blackmore launches 450 m Doppler lidar for autonomous vehicle fleets

Doppler lidar manufacturer Blackmore Sensors and Analytics (Bozeman, MT) announced two new interference-free lidar product lines. The Blackmore Autonomous Fleet Doppler Lidar (AFDL) is a Doppler lidar system specifically designed for autonomous fleet deployment. And the flexible Blackmore Lidar Development Platform (LDP) is a development platform for early deployment into emerging autonomous markets.

Blackmore says that despite the more than $1 billion invested in the lidar space since 2015, it’s increasingly difficult for end-users to identify a solution that delivers as promised. This is because the majority of lidar vendors focus on using amplitude modulation (AM) pulse-based lidar technologies. As a result, OEMs and suppliers are struggling with the inadequate data created by these power-hungry AM lidar sensors. And as lidar use becomes more prevalent, interference-prone AM systems are less effective and unsafe.

To address this, in 2015 Blackmore introduced the world’s first frequency-modulation (FM) lidar systems for autonomous vehicles, which measure both range and velocity simultaneously. For context, chipset-driven industries–including cell phones, automotive radars and GPS systems–also migrated from AM to FM modulation in order to deliver interference-free data at long range, using less power. “The reality is that physics ultimately wins, no matter how much funding chases inferior alternatives,” said Randy Reibel, CEO and co-founder of Blackmore. “But more importantly, FM-based Doppler lidar sensors are safer for self-driving applications.

Specifically, the multi-beam Doppler lidar sensor delivers instantaneous velocity and range data beyond 450 m, with power consumption and size similar to a small laptop. The system supports a 120 x 30-degree field of view, software-defined operation, precise velocity measurements with accuracy down to 0.1 m/s on objects moving up to 150 m/s (335 mph), and measurement rates in excess of 2.4 million points/second. Blackmore’s AFDL is available for pre-order and will ship to customers in Q2 for less than $20,000.

Because Blackmore uses software-defined lidar data, customers can tailor the operational parameters–including field of view, range, point density and scan speed–on the fly with its flexible system. The long-range forward-look optical head covers a 40 x 40-degree field of view, provides two beams to increase point throughput to greater than 1.2 million points/second, and can reach out well beyond 500 m of range extent. Additional sensor heads will be introduced over time, and prices will vary depending upon configuration.

Self-driving technology company Argo AI makes lidar breakthrough

The company in May 2021 announced its own lidar sensor, aptly named Argo Lidar, which it says can accurately spot and identify objects more than 1,300 feet away, or about 300 feet more than current lidar sensors—something that’s vital for highway driving. Argo Lidar is said to be so accurate that it can even correctly identify graffiti on a wall or spot small moving objects such as animals among vegetation and static objects.

The innovation behind Argo Lidar is known as “Geiger-mode” sensing. Argo AI’s proprietary Geiger-mode lidar has the ability to detect the smallest particle of light—a single photon—and is key to sensing objects with low reflectivity. This, combined with higher-wavelength operation above 1400 nanometers, gives Argo Lidar its unique capabilities, including longer-range, higher-resolution, lower-reflectivity detection and full 360° field of view—all from a single sensor.

Argo AI already has prototypes fitted with Argo Lidar testing on public roads. The testing is taking place at six U.S. cities, with more locations, including the first in Europe, to be added this year.

AEye develops AI based Agile sensor technology may surpass lidar

Robotic perception startup AEye (Pleasanton, CA) has demonstrated what it calls a new form of intelligent data collection through optical sensors: iDAR. According to AEye, a shortcoming of traditional LiDAR is that most systems oversample less important information like the sky, road and trees, or undersample critical information such as a fast-approaching vehicle. They then have to spend significant processing power and time extracting critical objects like pedestrians, cyclists, cars, and animals. AEye’s iDAR technology mimics how a human’s visual cortex focuses on and evaluates potential driving hazards: it uses a distributed architecture and at-the-edge processing to dynamically track targets and objects of interest, while always critically assessing general surroundings.

The technology combines an “agile” Micro-optical Mechanical (MOEMS) LiDAR, pre-fused with a low-light camera and embedded artificial intelligence and thus is creating software-definable and extensible hardware that can dynamically adapt to real-time demands. iDAR promises to deliver higher accuracy and longer range than existing lidar designs, enabling improved autonomous vehicle safety and performance at a reduced cost.

AEye’s iDAR is designed to intelligently prioritize and interrogate co-located pixels (2D) and voxels (3D) within a frame, enabling the system to target and identify objects within a scene 10-20x more effectively than LiDAR-only products. Additionally, iDAR is capable of overlaying 2D images on 3D point clouds for the creation of True Color LiDAR. Its embedded AI capabilities enable iDAR to utilize multiple – the company is actually promising “thousands” – of existing and custom computer vision algorithms, which add intelligence that can be leveraged by path planning software. The introduction of iDAR follows AEye’s September demonstration of the first 360 degree, vehicle-mounted, solid-state LiDAR system with ranges up to 300 meters at high resolution.

References and Resources also include:

- http://www.darpa.mil/news-events/2015-03-26

- http://spie.org/newsroom/6175-airborne-3d-imaging-possibilities-for-defense-and-security?highlight=x2412&ArticleID=x119049

- https://www.grandviewresearch.com/press-release/global-lidar-market

- https://arstechnica.com/cars/2018/01/driving-around-without-a-driver-lidar-technology-explained/

- http://optics.org/news/9/11/26

- https://www.fleetnews.co.uk/smart-transport/news/technology-breakthrough-allows-self-driving-cars-to-see-in-all-weather-conditions

- https://www.commercialdroneprofessional.com/redtail-lidar-reveals-innovative-3d-mapping-tech/

- https://finance.yahoo.com/news/solid-state-automotive-lidar-does-173000555.html

International Defense Security & Technology Your trusted Source for News, Research and Analysis

International Defense Security & Technology Your trusted Source for News, Research and Analysis